Elena Torta

Hybrid Decision Making for Scalable Multi-Agent Navigation: Integrating Semantic Maps, Discrete Coordination, and Model Predictive Control

Oct 16, 2024Abstract:This paper presents a framework for multi-agent navigation in structured but dynamic environments, integrating three key components: a shared semantic map encoding metric and semantic environmental knowledge, a claim policy for coordinating access to areas within the environment, and a Model Predictive Controller for generating motion trajectories that respect environmental and coordination constraints. The main advantages of this approach include: (i) enforcing area occupancy constraints derived from specific task requirements; (ii) enhancing computational scalability by eliminating the need for collision avoidance constraints between robotic agents; and (iii) the ability to anticipate and avoid deadlocks between agents. The paper includes both simulations and physical experiments demonstrating the framework's effectiveness in various representative scenarios.

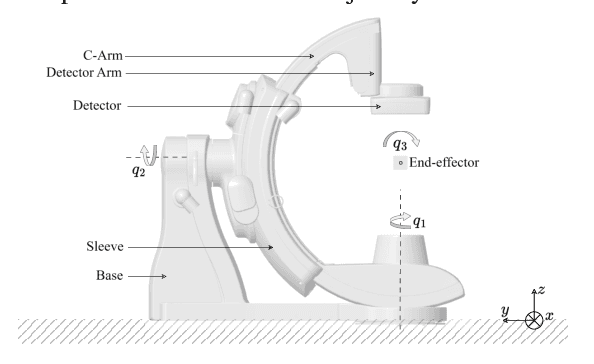

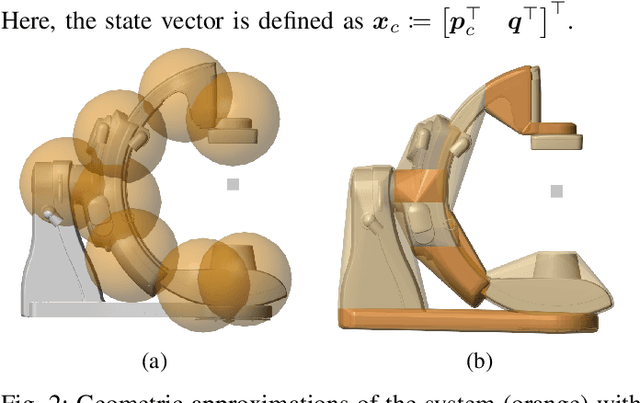

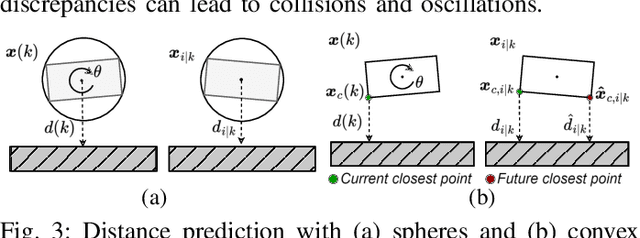

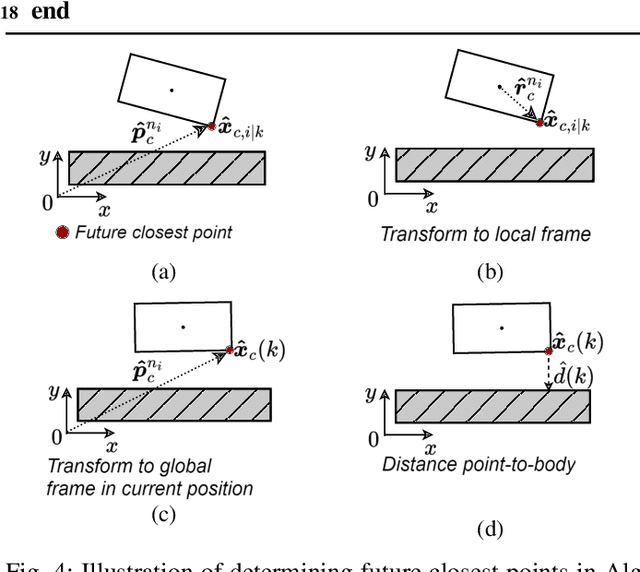

Non-Conservative Obstacle Avoidance for Multi-Body Systems Leveraging Convex Hulls and Predicted Closest Points

Oct 16, 2024

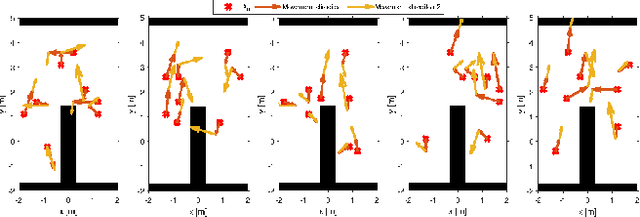

Abstract:This paper introduces a novel approach that integrates future closest point predictions into the distance constraints of a collision avoidance controller, leveraging convex hulls with closest point distance calculations. By addressing abrupt shifts in closest points, this method effectively reduces collision risks and enhances controller performance. Applied to an Image Guided Therapy robot and validated through simulations and user experiments, the framework demonstrates improved distance prediction accuracy, smoother trajectories, and safer navigation near obstacles.

Generation of skill-specific maps from graph world models for robotic systems

Feb 28, 2024

Abstract:With the increase in the availability of Building Information Models (BIM) and (semi-) automatic tools to generate BIM from point clouds, we propose a world model architecture and algorithms to allow the use of the semantic and geometric knowledge encoded within these models to generate maps for robot localization and navigation. When heterogeneous robots are deployed within an environment, maps obtained from classical SLAM approaches might not be shared between all agents within a team of robots, e.g. due to a mismatch in sensor type, or a difference in physical robot dimensions. Our approach extracts the 3D geometry and semantic description of building elements (e.g. material, element type, color) from BIM, and represents this knowledge in a graph. Based on queries on the graph and knowledge of the skills of the robot, we can generate skill-specific maps that can be used during the execution of localization or navigation tasks. The approach is validated with data from complex build environments and integrated into existing navigation frameworks.

A Bayesian optimization framework for the automatic tuning of MPC-based shared controllers

Nov 02, 2023

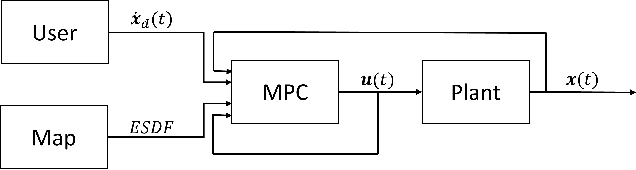

Abstract:This paper presents a Bayesian optimization framework for the automatic tuning of shared controllers which are defined as a Model Predictive Control (MPC) problem. The proposed framework includes the design of performance metrics as well as the representation of user inputs for simulation-based optimization. The framework is applied to the optimization of a shared controller for an Image Guided Therapy robot. VR-based user experiments confirm the increase in performance of the automatically tuned MPC shared controller with respect to a hand-tuned baseline version as well as its generalization ability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge