Eisa Hedayati

An experimental system for detection and localization of hemorrhage using ultra-wideband microwaves with deep learning

Oct 03, 2023Abstract:Stroke is a leading cause of mortality and disability. Emergent diagnosis and intervention are critical, and predicated upon initial brain imaging; however, existing clinical imaging modalities are generally costly, immobile, and demand highly specialized operation and interpretation. Low-energy microwaves have been explored as low-cost, small form factor, fast, and safe probes of tissue dielectric properties, with both imaging and diagnostic potential. Nevertheless, challenges inherent to microwave reconstruction have impeded progress, hence microwave imaging (MWI) remains an elusive scientific aim. Herein, we introduce a dedicated experimental framework comprising a robotic navigation system to translate blood-mimicking phantoms within an anatomically realistic human head model. An 8-element ultra-wideband (UWB) array of modified antipodal Vivaldi antennas was developed and driven by a two-port vector network analyzer spanning 0.6-9.0 GHz at an operating power of 1 mw. Complex scattering parameters were measured, and dielectric signatures of hemorrhage were learned using a dedicated deep neural network for prediction of hemorrhage classes and localization. An overall sensitivity and specificity for detection >0.99 was observed, with Rayliegh mean localization error of 1.65 mm. The study establishes the feasibility of a robust experimental model and deep learning solution for UWB microwave stroke detection.

Deep Convolutional Autoencoder for Assessment of Anomalies in Multi-stream Sensor Data

Feb 15, 2022Abstract:A fully convolutional autoencoder is developed for the detection of anomalies in multi-sensor vehicle drive-cycle data from the powertrain domain. Preliminary results collected on real-world powertrain data show that the reconstruction error of faulty drive cycles deviates significantly relative to the reconstruction of healthy drive cycles using the trained autoencoder. The results demonstrate applicability for identifying faulty drive-cycles, and for improving the accuracy of system prognosis and predictive maintenance in connected vehicles.

Machine learning method for light field refocusing

Mar 30, 2021

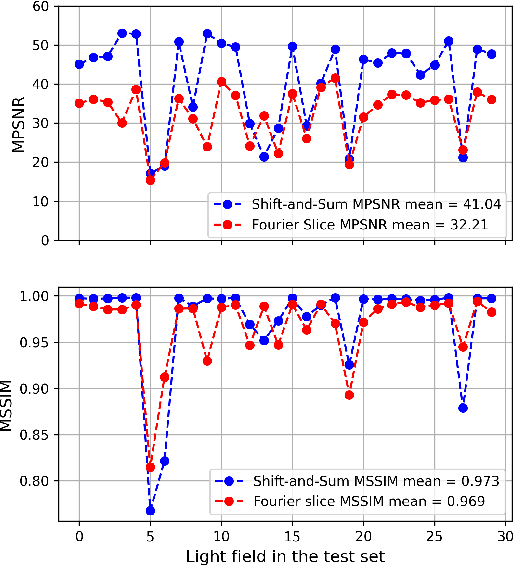

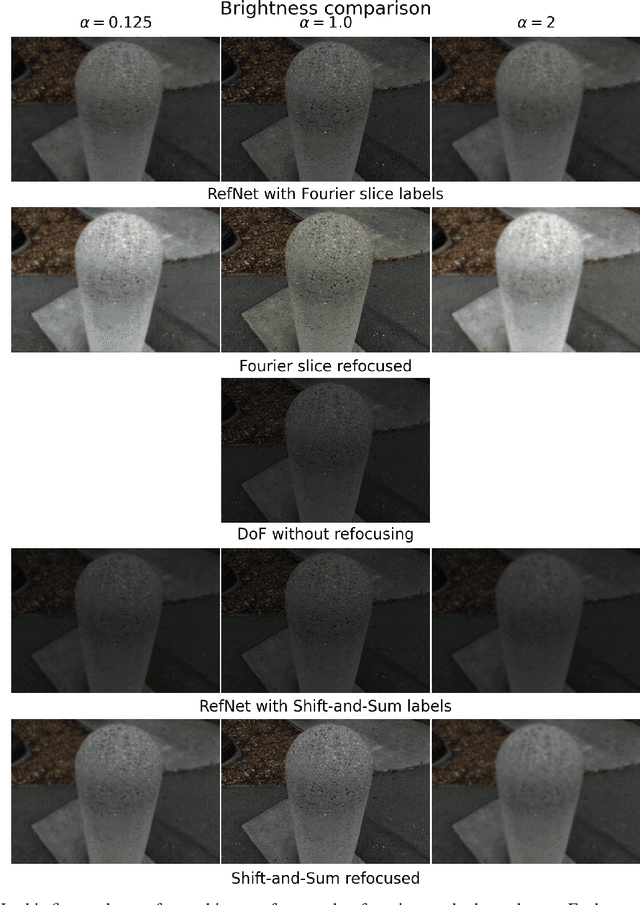

Abstract:Light field imaging introduced the capability to refocus an image after capturing. Currently there are two popular methods for refocusing, shift-and-sum and Fourier slice methods. Neither of these two methods can refocus the light field in real-time without any pre-processing. In this paper we introduce a machine learning based refocusing technique that is capable of extracting 16 refocused images with refocusing parameters of \alpha=0.125,0.250,0.375,...,2.0 in real-time. We have trained our network, which is called RefNet, in two experiments. Once using the Fourier slice method as the training -- i.e., "ground truth" -- data and another using the shift-and-sum method as the training data. We showed that in both cases, not only is the RefNet method at least 134x faster than previous approaches, but also the color prediction of RefNet is superior to both Fourier slice and shift-and-sum methods while having similar depth of field and focus distance performance.

Light Field Compression by Residual CNN Assisted JPEG

Sep 30, 2020

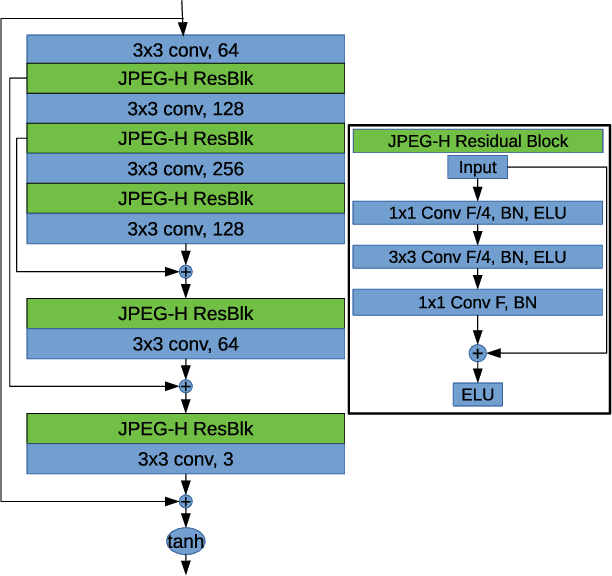

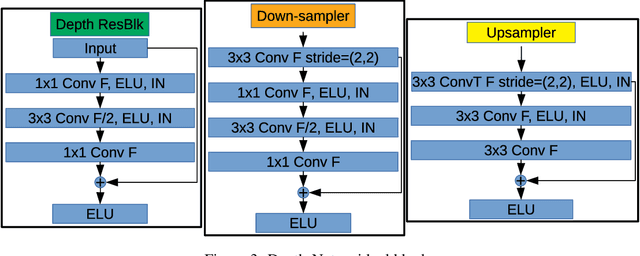

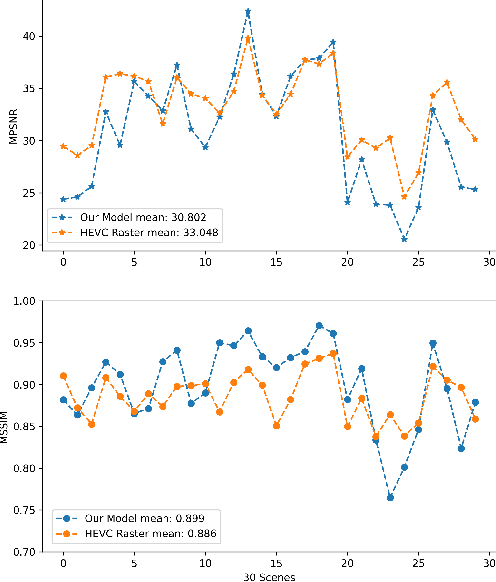

Abstract:Light field (LF) imaging has gained significant attention due to its recent success in 3-dimensional (3D) displaying and rendering as well as augmented and virtual reality usage. Nonetheless, because of the two extra dimensions, LFs are much larger than conventional images. We develop a JPEG-assisted learning-based technique to reconstruct an LF from a JPEG bitstream with a bit per pixel ratio of 0.0047 on average. For compression, we keep the LF's center view and use JPEG compression with 50\% quality. Our reconstruction pipeline consists of a small JPEG enhancement network (JPEG-Hance), a depth estimation network (Depth-Net), followed by view synthesizing by warping the enhanced center view. Our pipeline is significantly faster than using video compression on pseudo-sequences extracted from an LF, both in compression and decompression, while maintaining effective performance. We show that with a 1\% compression time cost and 18x speedup for decompression, our methods reconstructed LFs have better structural similarity index metric (SSIM) and comparable peak signal-to-noise ratio (PSNR) compared to the state-of-the-art video compression techniques used to compress LFs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge