Egor Bulychev

Finetuning Large Language Models for Vulnerability Detection

Jan 30, 2024Abstract:This paper presents the results of finetuning large language models (LLMs) for the task of detecting vulnerabilities in source code. We leverage WizardCoder, a recent improvement of the state-of-the-art LLM StarCoder, and adapt it for vulnerability detection through further finetuning. To accelerate training, we modify WizardCoder's training procedure, also we investigate optimal training regimes. For the imbalanced dataset with many more negative examples than positive, we also explore different techniques to improve classification performance. The finetuned WizardCoder model achieves improvement in ROC AUC and F1 measures on balanced and imbalanced vulnerability datasets over CodeBERT-like model, demonstrating the effectiveness of adapting pretrained LLMs for vulnerability detection in source code. The key contributions are finetuning the state-of-the-art code LLM, WizardCoder, increasing its training speed without the performance harm, optimizing the training procedure and regimes, handling class imbalance, and improving performance on difficult vulnerability detection datasets. This demonstrates the potential for transfer learning by finetuning large pretrained language models for specialized source code analysis tasks.

Identifying collaborators in large codebases

May 07, 2019

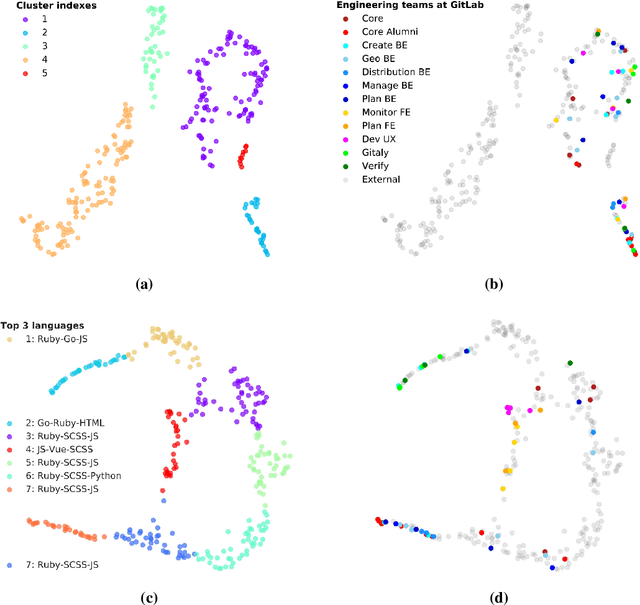

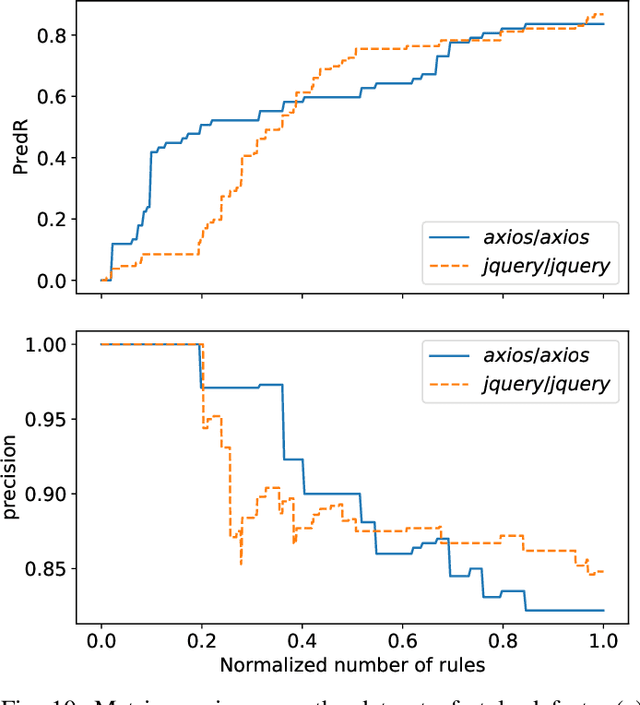

Abstract:The way developers collaborate inside and particularly across teams often escapes management's attention, despite a formal organization with designated teams being defined. Observability of the actual, organically formed engineering structure provides decision makers invaluable additional tools to manage their talent pool. To identify existing inter and intra-team interactions - and suggest relevant opportunities for suitable collaborations - this paper studies contributors' commit activity, usage of programming languages, and code identifier topics by embedding and clustering them. We evaluate our findings collaborating with the GitLab organization, analyzing 117 of their open source projects. We show that we are able to restore their engineering organization in broad strokes, and also reveal hidden coding collaborations as well as justify in-house technical decisions.

STYLE-ANALYZER: fixing code style inconsistencies with interpretable unsupervised algorithms

Apr 01, 2019

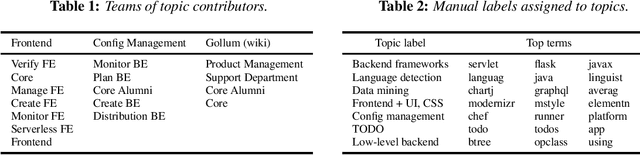

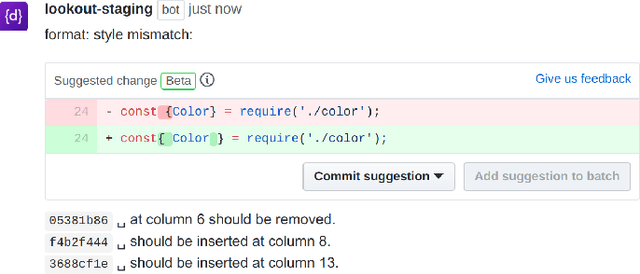

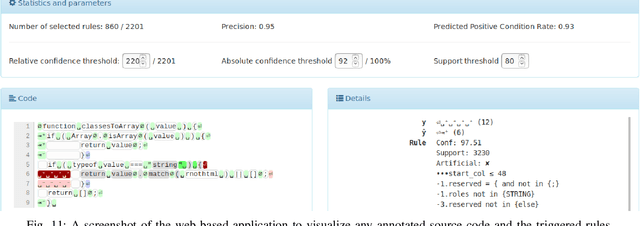

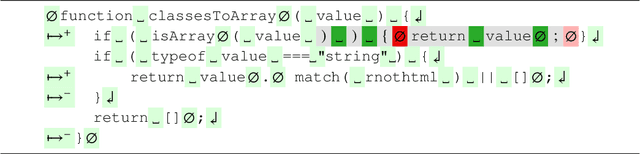

Abstract:Source code reviews are manual, time-consuming, and expensive. Human involvement should be focused on analyzing the most relevant aspects of the program, such as logic and maintainability, rather than amending style, syntax, or formatting defects. Some tools with linting capabilities can format code automatically and report various stylistic violations for supported programming languages. They are based on rules written by domain experts, hence, their configuration is often tedious, and it is impractical for the given set of rules to cover all possible corner cases. Some machine learning-based solutions exist, but they remain uninterpretable black boxes. This paper introduces STYLE-ANALYZER, a new open source tool to automatically fix code formatting violations using the decision tree forest model which adapts to each codebase and is fully unsupervised. STYLE-ANALYZER is built on top of our novel assisted code review framework, Lookout. It accurately mines the formatting style of each analyzed Git repository and expresses the found format patterns with compact human-readable rules. STYLE-ANALYZER can then suggest style inconsistency fixes in the form of code review comments. We evaluate the output quality and practical relevance of STYLE-ANALYZER by demonstrating that it can reproduce the original style with high precision, measured on 19 popular JavaScript projects, and by showing that it yields promising results in fixing real style mistakes. STYLE-ANALYZER includes a web application to visualize how the rules are triggered. We release STYLE-ANALYZER as a reusable and extendable open source software package on GitHub for the benefit of the community.

Splitting source code identifiers using Bidirectional LSTM Recurrent Neural Network

Jul 19, 2018

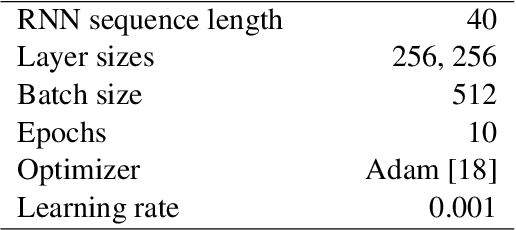

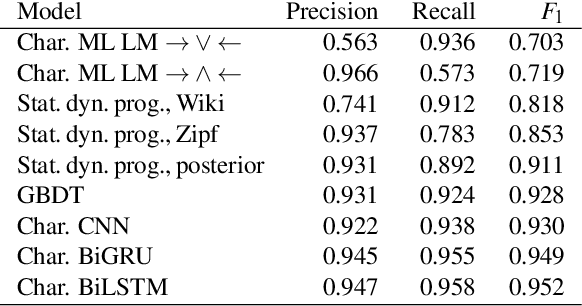

Abstract:Programmers make rich use of natural language in the source code they write through identifiers and comments. Source code identifiers are selected from a pool of tokens which are strongly related to the meaning, naming conventions, and context. These tokens are often combined to produce more precise and obvious designations. Such multi-part identifiers count for 97% of all naming tokens in the Public Git Archive - the largest dataset of Git repositories to date. We introduce a bidirectional LSTM recurrent neural network to detect subtokens in source code identifiers. We trained that network on 41.7 million distinct splittable identifiers collected from 182,014 open source projects in Public Git Archive, and show that it outperforms several other machine learning models. The proposed network can be used to improve the upstream models which are based on source code identifiers, as well as improving developer experience allowing writing code without switching the keyboard case.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge