Edward Rietman

Transient Dynamics in Lattices of Differentiating Ring Oscillators

Jun 08, 2025Abstract:Recurrent neural networks (RNNs) are machine learning models widely used for learning temporal relationships. Current state-of-the-art RNNs use integrating or spiking neurons -- two classes of computing units whose outputs depend directly on their internal states -- and accordingly there is a wealth of literature characterizing the behavior of large networks built from these neurons. On the other hand, past research on differentiating neurons, whose outputs are computed from the derivatives of their internal states, remains limited to small hand-designed networks with fewer than one-hundred neurons. Here we show via numerical simulation that large lattices of differentiating neuron rings exhibit local neural synchronization behavior found in the Kuramoto model of interacting oscillators. We begin by characterizing the periodic orbits of uncoupled rings, herein called ring oscillators. We then show the emergence of local correlations between oscillators that grow over time when these rings are coupled together into lattices. As the correlation length grows, transient dynamics arise in which large regions of the lattice settle to the same periodic orbit, and thin domain boundaries separate adjacent, out-of-phase regions. The steady-state scale of these correlated regions depends on how the neurons are shared between adjacent rings, which suggests that lattices of differentiating ring oscillator might be tuned to be used as reservoir computers. Coupled with their simple circuit design and potential for low-power consumption, differentiating neural nets therefore represent a promising substrate for neuromorphic computing that will enable low-power AI applications.

On the Dynamics of Learning Time-Aware Behavior with Recurrent Neural Networks

Jun 12, 2023Abstract:Recurrent Neural Networks (RNNs) have shown great success in modeling time-dependent patterns, but there is limited research on their learned representations of latent temporal features and the emergence of these representations during training. To address this gap, we use timed automata (TA) to introduce a family of supervised learning tasks modeling behavior dependent on hidden temporal variables whose complexity is directly controllable. Building upon past studies from the perspective of dynamical systems, we train RNNs to emulate temporal flipflops, a new collection of TA that emphasizes the need for time-awareness over long-term memory. We find that these RNNs learn in phases: they quickly perfect any time-independent behavior, but they initially struggle to discover the hidden time-dependent features. In the case of periodic "time-of-day" aware automata, we show that the RNNs learn to switch between periodic orbits that encode time modulo the period of the transition rules. We subsequently apply fixed point stability analysis to monitor changes in the RNN dynamics during training, and we observe that the learning phases are separated by a bifurcation from which the periodic behavior emerges. In this way, we demonstrate how dynamical systems theory can provide insights into not only the learned representations of these models, but also the dynamics of the learning process itself. We argue that this style of analysis may provide insights into the training pathologies of recurrent architectures in contexts outside of time-awareness.

Deep Learning Regression of VLSI Plasma Etch Metrology

Sep 10, 2019

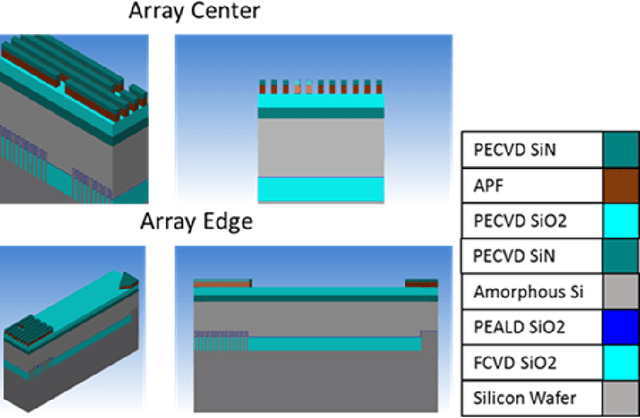

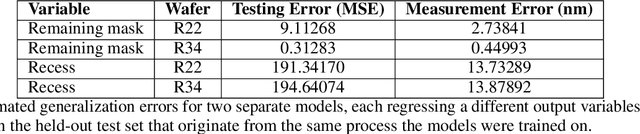

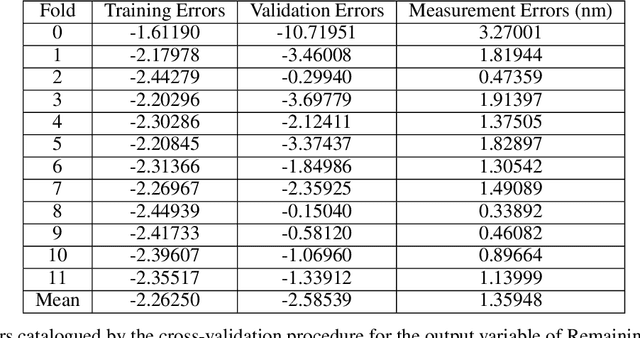

Abstract:In computer chip manufacturing, the study of etch patterns on silicon wafers, or metrology, occurs on the nano-scale and is therefore subject to large variation from small, yet significant, perturbations in the manufacturing environment. An enormous amount of information can be gathered from a single etch process, a sequence of actions taken to produce an etched wafer from a blank piece of silicon. Each final wafer, however, is costly to take measurements from, which limits the number of examples available to train a predictive model. Part of the significance of this work is the success we saw from the models despite the limited number of examples. In order to accommodate the high dimensional process signatures, we isolated important sensor variables and applied domain-specific summarization on the data using multiple feature engineering techniques. We used a neural network architecture consisting of the summarized inputs, a single hidden layer of 4032 units, and an output layer of one unit. Two different models were learned, corresponding to the metrology measurements in the dataset, Recess and Remaining Mask. The outputs are related abstractly and do not form a two dimensional space, thus two separate models were learned. Our results approach the error tolerance of the microscopic imaging system. The model can make predictions for a class of etch recipes that include the correct number of etch steps and plasma reactors with the appropriate sensors, which are chambers containing an ionized gas that determine the manufacture environment. Notably, this method is not restricted to some maximum process length due to the summarization techniques used. This allows the method to be adapted to new processes that satisfy the aforementioned requirements. In order to automate semiconductor manufacturing, models like these will be needed throughout the process to evaluate production quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge