Eduardo Mojica-Nava

Event-Triggered Control for Weight-Unbalanced Directed Networks

Aug 22, 2021

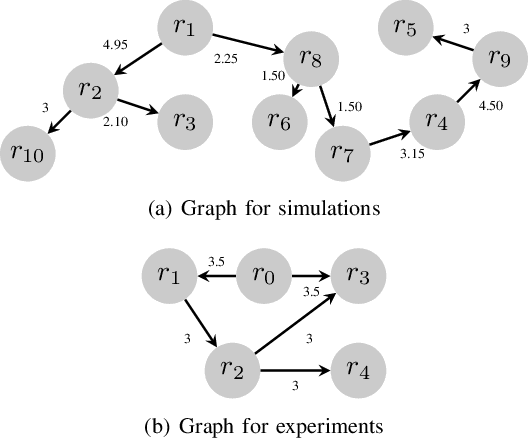

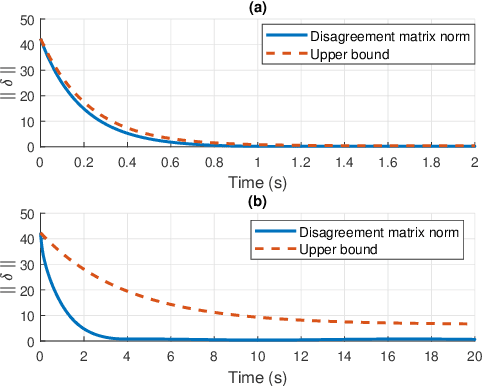

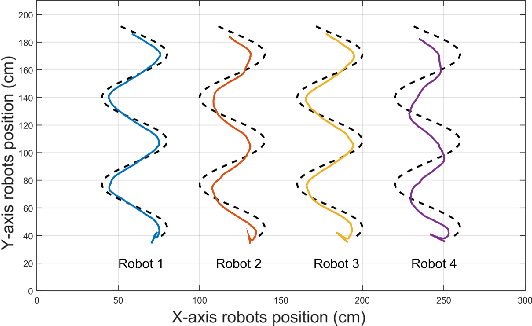

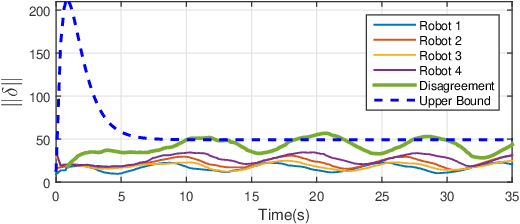

Abstract:We develop an event-triggered control strategy for a weighted-unbalanced directed homogeneous robot network to reach a dynamic consensus in this work. We present some guarantees for synchronizing a robot network when all robots have access to the reference and when a limited number of robots have access. The proposed event-triggered control can reduce and avoid the periodic updating of the signals. Unlike some current control methods, we prove stability by making use of a logarithmic norm, which extends the possibilities of the control law to be applied to a wide range of directed graphs, in contrast to other works where the event-triggered control can be only implemented over strongly connected and weight-balanced digraphs. We test the performance of our algorithm by carrying out experiments both in simulation and in a real team of robots.

Robust Asynchronous and Network-Independent Cooperative Learning

Oct 20, 2020

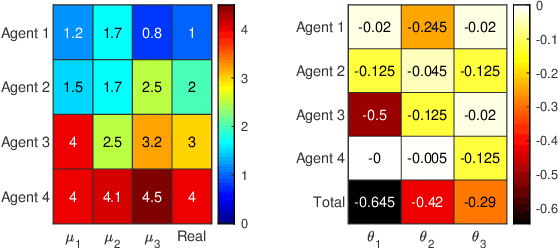

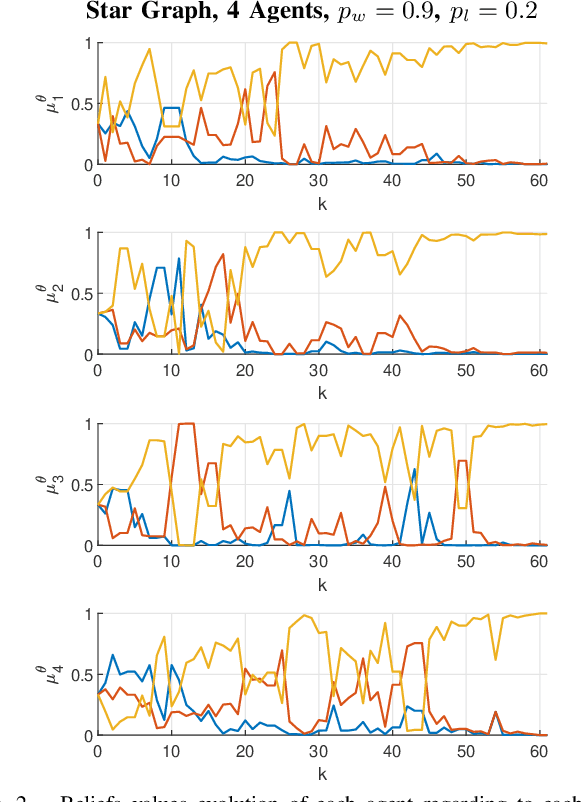

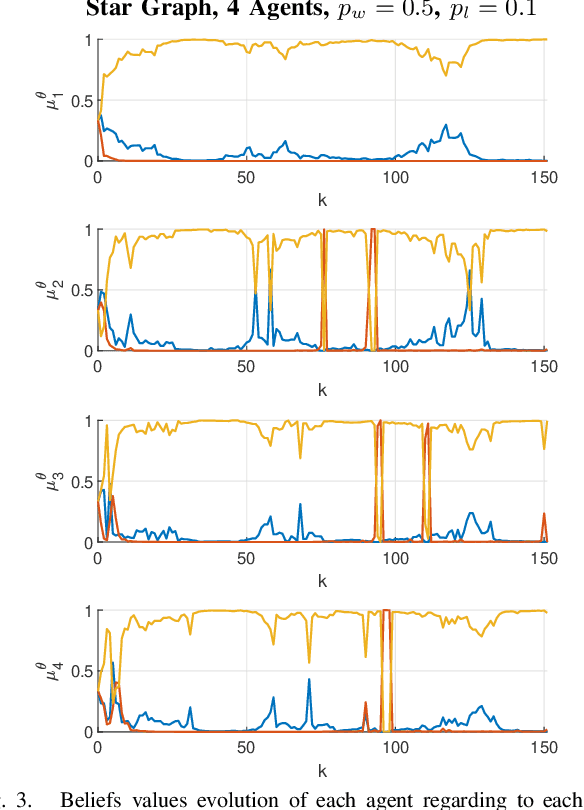

Abstract:We consider the model of cooperative learning via distributed non-Bayesian learning, where a network of agents tries to jointly agree on a hypothesis that best described a sequence of locally available observations. Building upon recently proposed weak communication network models, we propose a robust cooperative learning rule that allows asynchronous communications, message delays, unpredictable message losses, and directed communication among nodes. We show that our proposed learning dynamics guarantee that all agents in the network will have an asymptotic exponential decay of their beliefs on the wrong hypothesis, indicating that the beliefs of all agents will concentrate on the optimal hypotheses. Numerical experiments provide evidence on a number of network setups.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge