Eduardo Izquierdo

Multifunctionality in embodied agents: Three levels of neural reuse

May 16, 2018

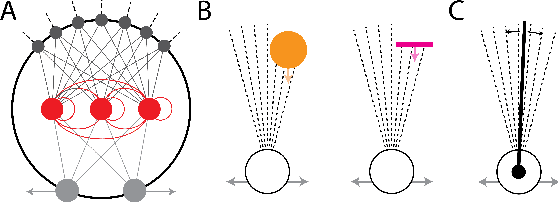

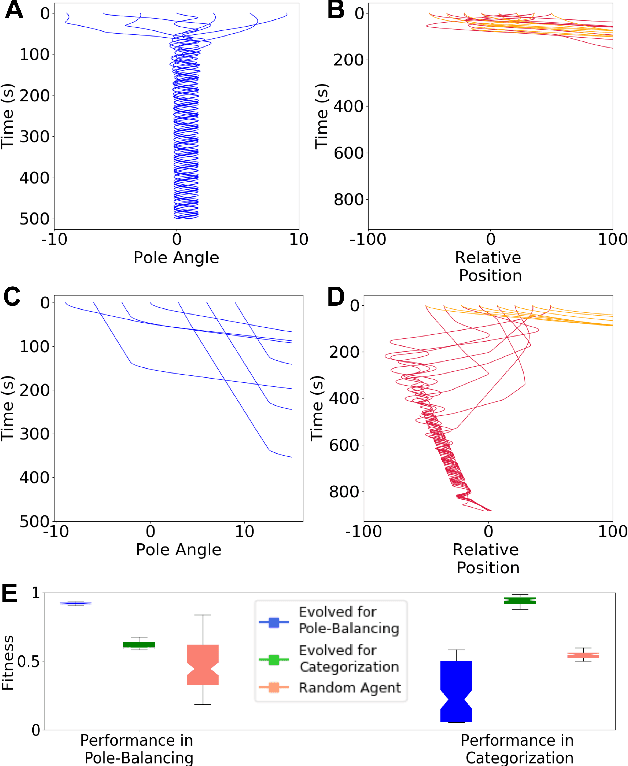

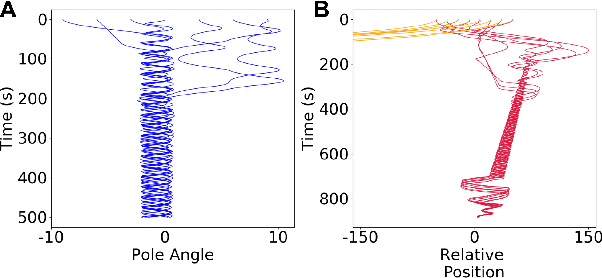

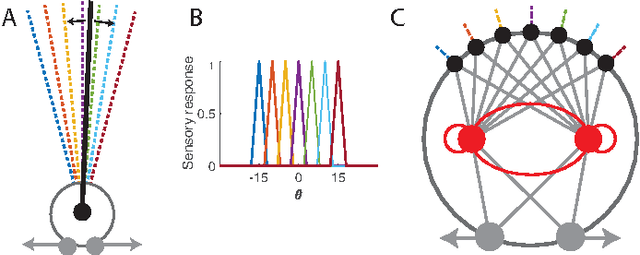

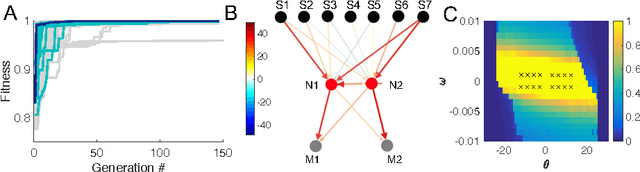

Abstract:The brain in conjunction with the body is able to adapt to new environments and perform multiple behaviors through reuse of neural resources and transfer of existing behavioral traits. Although mechanisms that underlie this ability are not well understood, they are largely attributed to neuromodulation. In this work, we demonstrate that an agent can be multifunctional using the same sensory and motor systems across behaviors, in the absence of modulatory mechanisms. Further, we lay out the different levels at which neural reuse can occur through a dynamical filtering of the brain-body-environment system's operation: structural network, autonomous dynamics, and transient dynamics. Notably, transient dynamics reuse could only be explained by studying the brain-body-environment system as a whole and not just the brain. The multifunctional agent we present here demonstrates neural reuse at all three levels.

Optimal modularity and memory capacity of neural networks

Oct 02, 2017Abstract:The neural network is a powerful computing framework that has been exploited by biological evolution and by humans for solving diverse problems. Although the computational capabilities of neural networks are determined by their structure, the current understanding of the relationships between a neural network's architecture and function is still primitive. Here we reveal that neural network's modular architecture plays a vital role in determining the neural dynamics and memory performance of the network. In particular, we demonstrate that there exists an optimal modularity for memory performance, where a balance between local cohesion and global connectivity is established, allowing optimally modular networks to remember longer. Our results suggest that insights from dynamical analysis of neural networks and information spreading processes can be leveraged to better design neural networks and may shed light on the brain's modular organization.

Information Bottleneck in Control Tasks with Recurrent Spiking Neural Networks

Jun 06, 2017

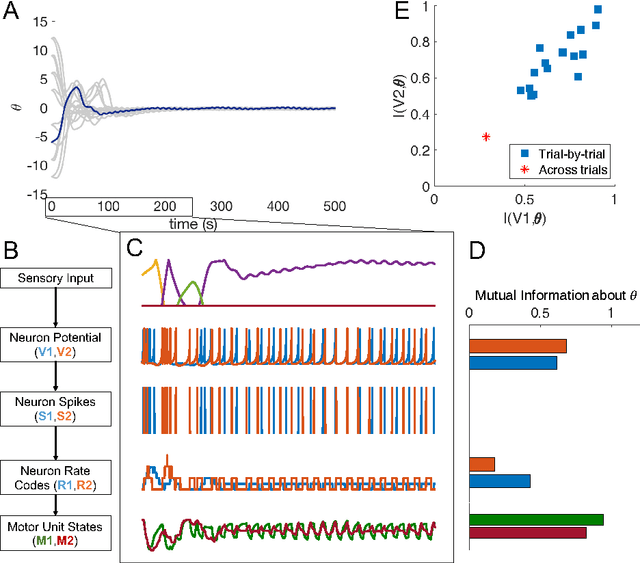

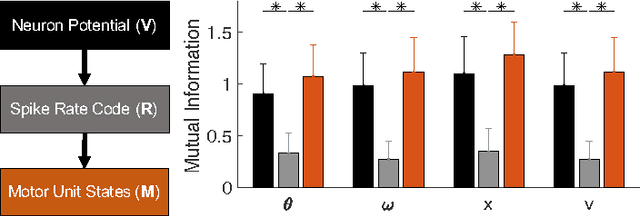

Abstract:The nervous system encodes continuous information from the environment in the form of discrete spikes, and then decodes these to produce smooth motor actions. Understanding how spikes integrate, represent, and process information to produce behavior is one of the greatest challenges in neuroscience. Information theory has the potential to help us address this challenge. Informational analyses of deep and feed-forward artificial neural networks solving static input-output tasks, have led to the proposal of the \emph{Information Bottleneck} principle, which states that deeper layers encode more relevant yet minimal information about the inputs. Such an analyses on networks that are recurrent, spiking, and perform control tasks is relatively unexplored. Here, we present results from a Mutual Information analysis of a recurrent spiking neural network that was evolved to perform the classic pole-balancing task. Our results show that these networks deviate from the \emph{Information Bottleneck} principle prescribed for feed-forward networks.

* Accepted at ICANN'17 to appear in Springer Lecture Notes in Computer Science

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge