Easop Lee

Learning Legged MPC with Smooth Neural Surrogates

Jan 17, 2026Abstract:Deep learning and model predictive control (MPC) can play complementary roles in legged robotics. However, integrating learned models with online planning remains challenging. When dynamics are learned with neural networks, three key difficulties arise: (1) stiff transitions from contact events may be inherited from the data; (2) additional non-physical local nonsmoothness can occur; and (3) training datasets can induce non-Gaussian model errors due to rapid state changes. We address (1) and (2) by introducing the smooth neural surrogate, a neural network with tunable smoothness designed to provide informative predictions and derivatives for trajectory optimization through contact. To address (3), we train these models using a heavy-tailed likelihood that better matches the empirical error distributions observed in legged-robot dynamics. Together, these design choices substantially improve the reliability, scalability, and generalizability of learned legged MPC. Across zero-shot locomotion tasks of increasing difficulty, smooth neural surrogates with robust learning yield consistent reductions in cumulative cost on simple, well-conditioned behaviors (typically 10-50%), while providing substantially larger gains in regimes where standard neural dynamics often fail outright. In these regimes, smoothing enables reliable execution (from 0/5 to 5/5 success) and produces about 2-50x lower cumulative cost, reflecting orders-of-magnitude absolute improvements in robustness rather than incremental performance gains.

Sym2Real: Symbolic Dynamics with Residual Learning for Data-Efficient Adaptive Control

Sep 18, 2025Abstract:We present Sym2Real, a fully data-driven framework that provides a principled way to train low-level adaptive controllers in a highly data-efficient manner. Using only about 10 trajectories, we achieve robust control of both a quadrotor and a racecar in the real world, without expert knowledge or simulation tuning. Our approach achieves this data efficiency by bringing symbolic regression to real-world robotics while addressing key challenges that prevent its direct application, including noise sensitivity and model degradation that lead to unsafe control. Our key observation is that the underlying physics is often shared for a system regardless of internal or external changes. Hence, we strategically combine low-fidelity simulation data with targeted real-world residual learning. Through experimental validation on quadrotor and racecar platforms, we demonstrate consistent data-efficient adaptation across six out-of-distribution sim2sim scenarios and successful sim2real transfer across five real-world conditions. More information and videos can be found at at http://generalroboticslab.com/Sym2Real

Perception Stitching: Zero-Shot Perception Encoder Transfer for Visuomotor Robot Policies

Jun 28, 2024

Abstract:Vision-based imitation learning has shown promising capabilities of endowing robots with various motion skills given visual observation. However, current visuomotor policies fail to adapt to drastic changes in their visual observations. We present Perception Stitching that enables strong zero-shot adaptation to large visual changes by directly stitching novel combinations of visual encoders. Our key idea is to enforce modularity of visual encoders by aligning the latent visual features among different visuomotor policies. Our method disentangles the perceptual knowledge with the downstream motion skills and allows the reuse of the visual encoders by directly stitching them to a policy network trained with partially different visual conditions. We evaluate our method in various simulated and real-world manipulation tasks. While baseline methods failed at all attempts, our method could achieve zero-shot success in real-world visuomotor tasks. Our quantitative and qualitative analysis of the learned features of the policy network provides more insights into the high performance of our proposed method.

Policy Stitching: Learning Transferable Robot Policies

Sep 24, 2023

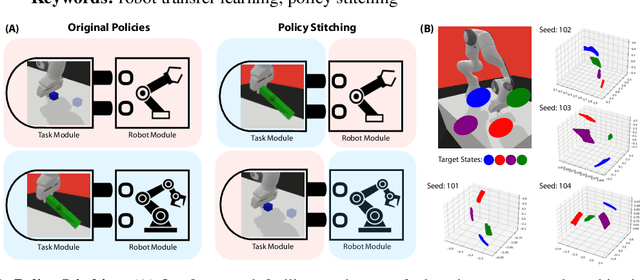

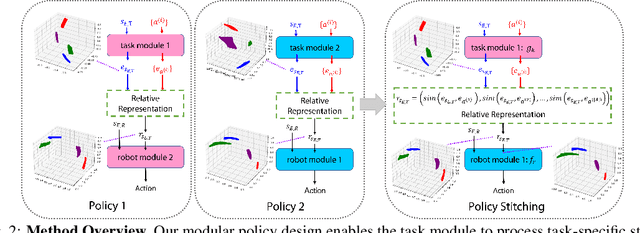

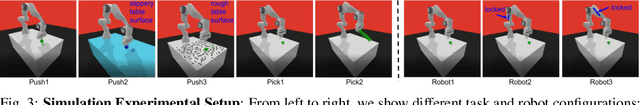

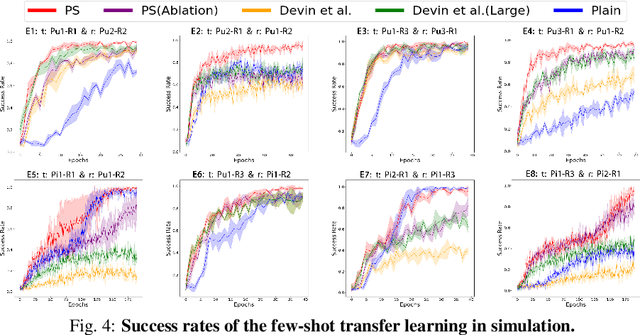

Abstract:Training robots with reinforcement learning (RL) typically involves heavy interactions with the environment, and the acquired skills are often sensitive to changes in task environments and robot kinematics. Transfer RL aims to leverage previous knowledge to accelerate learning of new tasks or new body configurations. However, existing methods struggle to generalize to novel robot-task combinations and scale to realistic tasks due to complex architecture design or strong regularization that limits the capacity of the learned policy. We propose Policy Stitching, a novel framework that facilitates robot transfer learning for novel combinations of robots and tasks. Our key idea is to apply modular policy design and align the latent representations between the modular interfaces. Our method allows direct stitching of the robot and task modules trained separately to form a new policy for fast adaptation. Our simulated and real-world experiments on various 3D manipulation tasks demonstrate the superior zero-shot and few-shot transfer learning performances of our method. Our project website is at: http://generalroboticslab.com/PolicyStitching/ .

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge