Dustin Dannenhauer

Anticipatory Thinking Challenges in Open Worlds: Risk Management

Jun 22, 2023

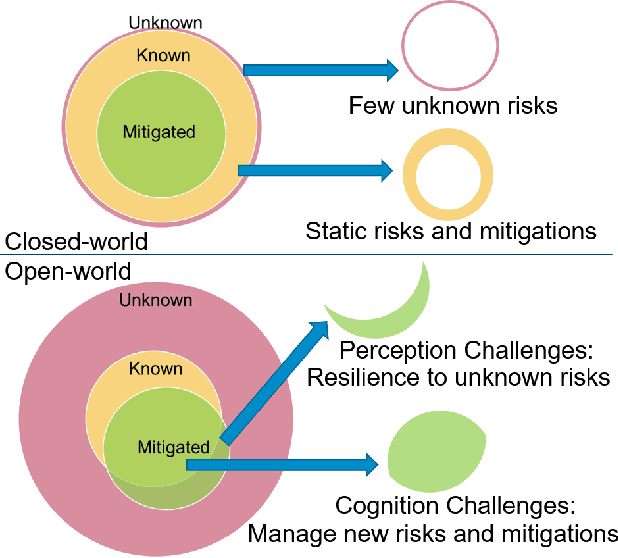

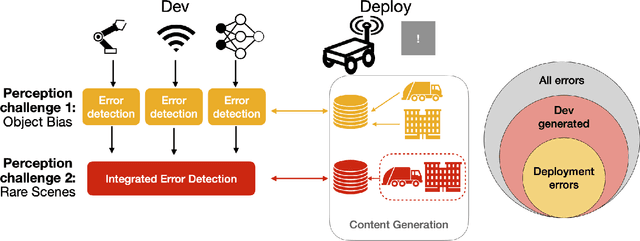

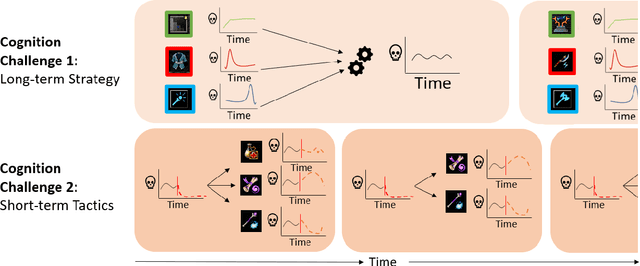

Abstract:Anticipatory thinking drives our ability to manage risk - identification and mitigation - in everyday life, from bringing an umbrella when it might rain to buying car insurance. As AI systems become part of everyday life, they too have begun to manage risk. Autonomous vehicles log millions of miles, StarCraft and Go agents have similar capabilities to humans, implicitly managing risks presented by their opponents. To further increase performance in these tasks, out-of-distribution evaluation can characterize a model's bias, what we view as a type of risk management. However, learning to identify and mitigate low-frequency, high-impact risks is at odds with the observational bias required to train machine learning models. StarCraft and Go are closed-world domains whose risks are known and mitigations well documented, ideal for learning through repetition. Adversarial filtering datasets provide difficult examples but are laborious to curate and static, both barriers to real-world risk management. Adversarial robustness focuses on model poisoning under the assumption there is an adversary with malicious intent, without considering naturally occurring adversarial examples. These methods are all important steps towards improving risk management but do so without considering open-worlds. We unify these open-world risk management challenges with two contributions. The first is our perception challenges, designed for agents with imperfect perceptions of their environment whose consequences have a high impact. Our second contribution are cognition challenges, designed for agents that must dynamically adjust their risk exposure as they identify new risks and learn new mitigations. Our goal with these challenges is to spur research into solutions that assess and improve the anticipatory thinking required by AI agents to manage risk in open-worlds and ultimately the real-world.

Human in the Loop Novelty Generation

Jun 12, 2023

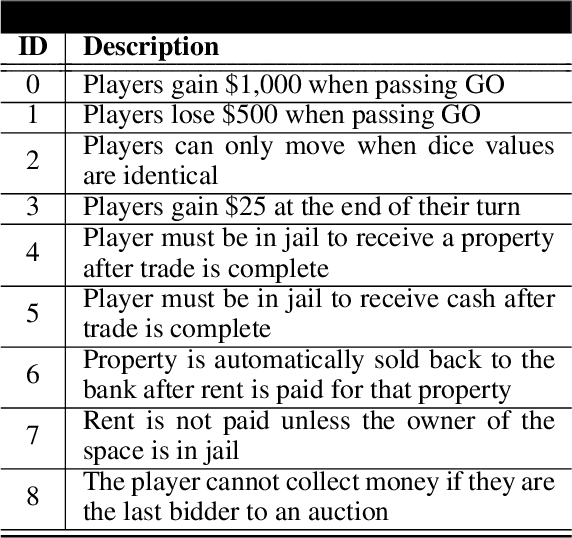

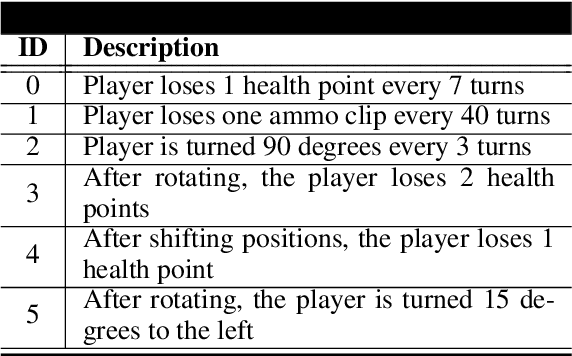

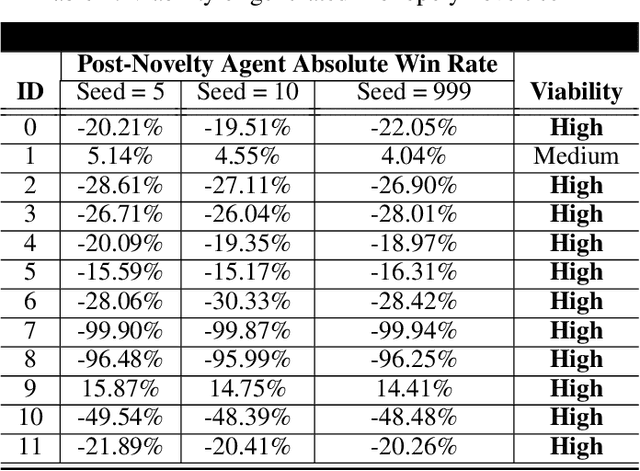

Abstract:Developing artificial intelligence approaches to overcome novel, unexpected circumstances is a difficult, unsolved problem. One challenge to advancing the state of the art in novelty accommodation is the availability of testing frameworks for evaluating performance against novel situations. Recent novelty generation approaches in domains such as Science Birds and Monopoly leverage human domain expertise during the search to discover new novelties. Such approaches introduce human guidance before novelty generation occurs and yield novelties that can be directly loaded into a simulated environment. We introduce a new approach to novelty generation that uses abstract models of environments (including simulation domains) that do not require domain-dependent human guidance to generate novelties. A key result is a larger, often infinite space of novelties capable of being generated, with the trade-off being a requirement to involve human guidance to select and filter novelties post generation. We describe our Human-in-the-Loop novelty generation process using our open-source novelty generation library to test baseline agents in two domains: Monopoly and VizDoom. Our results shows the Human-in-the-Loop method enables users to develop, implement, test, and revise novelties within 4 hours for both Monopoly and VizDoom domains.

A Framework for Characterizing Novel Environment Transformations in General Environments

May 07, 2023

Abstract:To be robust to surprising developments, an intelligent agent must be able to respond to many different types of unexpected change in the world. To date, there are no general frameworks for defining and characterizing the types of environment changes that are possible. We introduce a formal and theoretical framework for defining and categorizing environment transformations, changes to the world an agent inhabits. We introduce two types of environment transformation: R-transformations which modify environment dynamics and T-transformations which modify the generation process that produces scenarios. We present a new language for describing domains, scenario generators, and transformations, called the Transformation and Simulator Abstraction Language (T-SAL), and a logical formalism that rigorously defines these concepts. Then, we offer the first formal and computational set of tests for eight categories of environment transformations. This domain-independent framework paves the way for describing unambiguous classes of novelty, constrained and domain-independent random generation of environment transformations, replication of environment transformation studies, and fair evaluation of agent robustness.

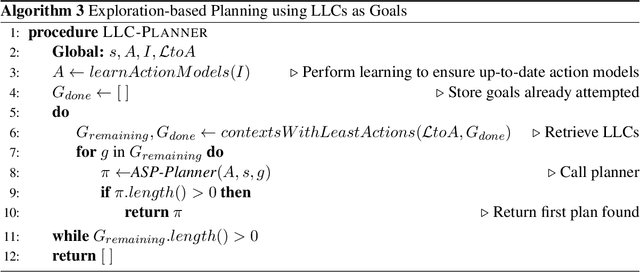

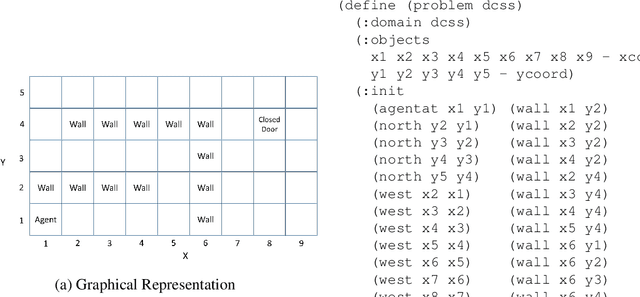

Self-directed Learning of Action Models using Exploratory Planning

Mar 07, 2022

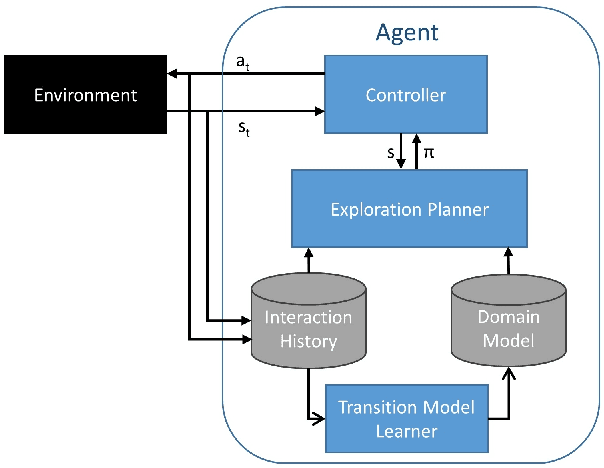

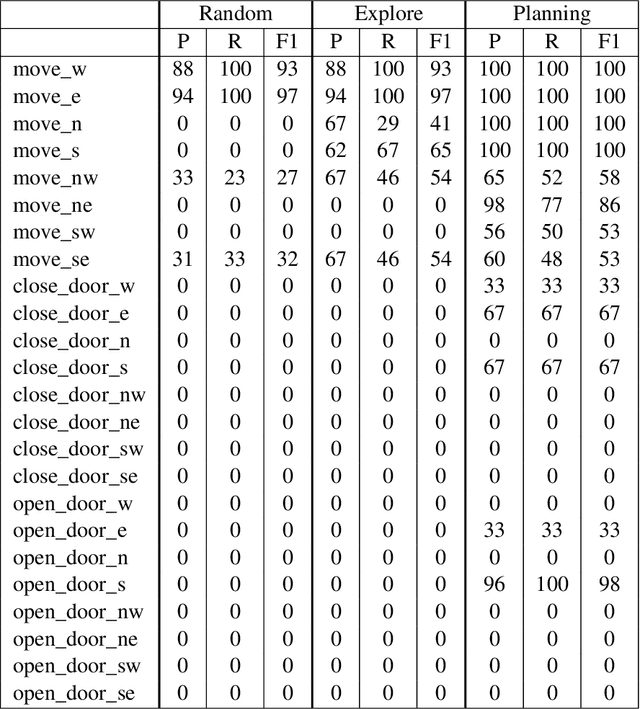

Abstract:Complex, real-world domains may not be fully modeled for an agent, especially if the agent has never operated in the domain before. The agent's ability to effectively plan and act in such a domain is influenced by its knowledge of when it can perform specific actions and the effects of those actions. We describe a novel exploratory planning agent that is capable of learning action preconditions and effects without expert traces or a given goal. The agent's architecture allows it to perform both exploratory actions as well as goal-directed actions, which opens up important considerations for how exploratory planning and goal planning should be controlled, as well as how the agent's behavior should be explained to any teammates it may have. The contributions of this work include a new representation for contexts called Lifted Linked Clauses, a novel exploration action selection approach using these clauses, an exploration planner that uses lifted linked clauses as goals in order to reach new states, and an empirical evaluation in a scenario from an exploration-focused video game demonstrating that lifted linked clauses improve exploration and action model learning against non-planning baseline agents.

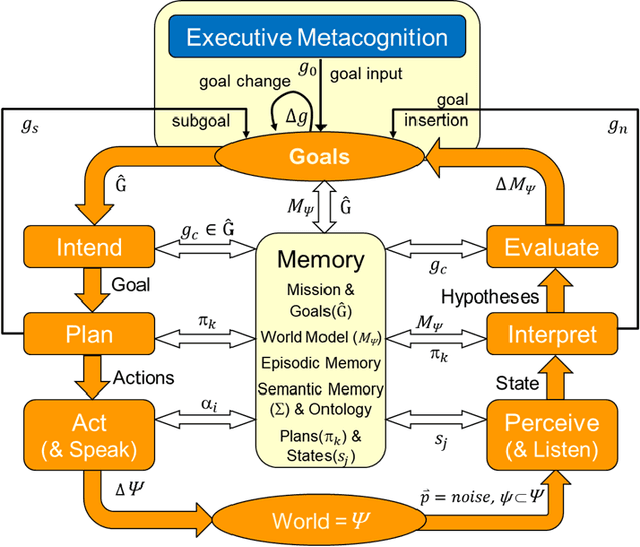

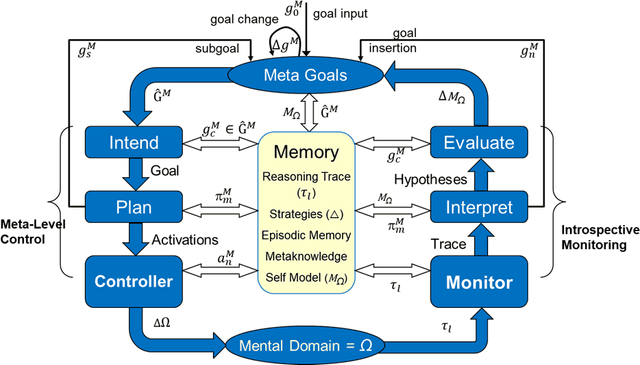

Computational Metacognition

Jan 30, 2022

Abstract:Computational metacognition represents a cognitive systems perspective on high-order reasoning in integrated artificial systems that seeks to leverage ideas from human metacognition and from metareasoning approaches in artificial intelligence. The key characteristic is to declaratively represent and then monitor traces of cognitive activity in an intelligent system in order to manage the performance of cognition itself. Improvements in cognition then lead to improvements in behavior and thus performance. We illustrate these concepts with an agent implementation in a cognitive architecture called MIDCA and show the value of metacognition in problem-solving. The results illustrate how computational metacognition improves performance by changing cognition through meta-level goal operations and learning.

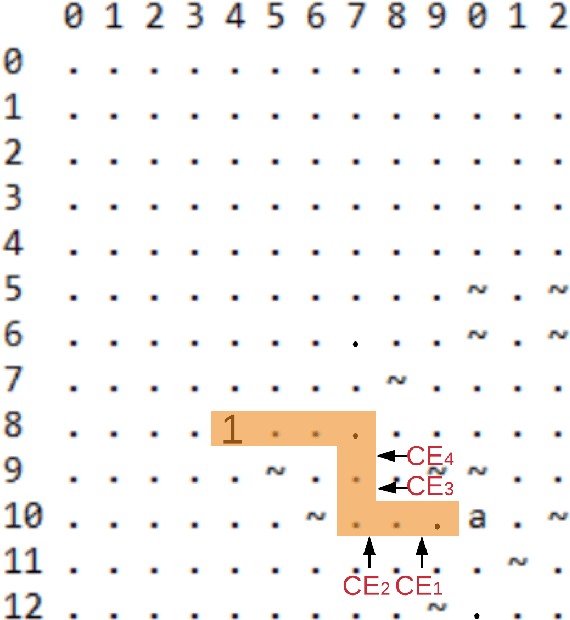

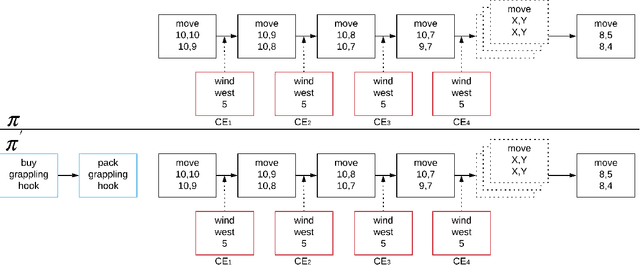

Anticipatory Thinking: A Metacognitive Capability

Jun 28, 2019

Abstract:Anticipatory thinking is a complex cognitive process for assessing and managing risk in many contexts. Humans use anticipatory thinking to identify potential future issues and proactively take actions to manage their risks. In this paper we define a cognitive systems approach to anticipatory thinking as a metacognitive goal reasoning mechanism. The contributions of this paper include (1) defining anticipatory thinking in the MIDCA cognitive architecture, (2) operationalizing anticipatory thinking as a three step process for managing risk in plans, and (3) a numeric risk assessment calculating an expected cost-benefit ratio for modifying a plan with anticipatory actions.

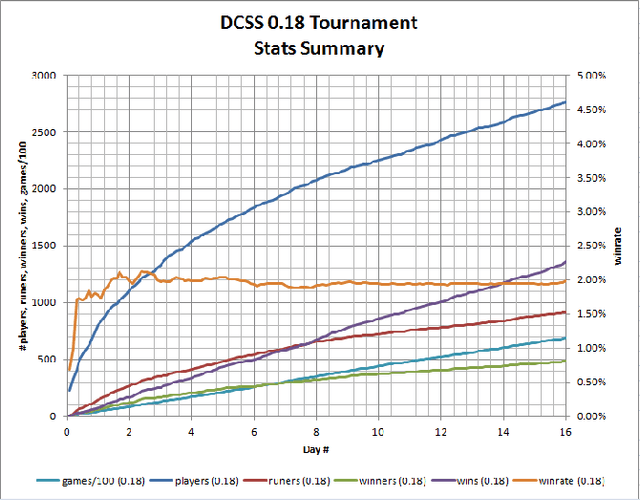

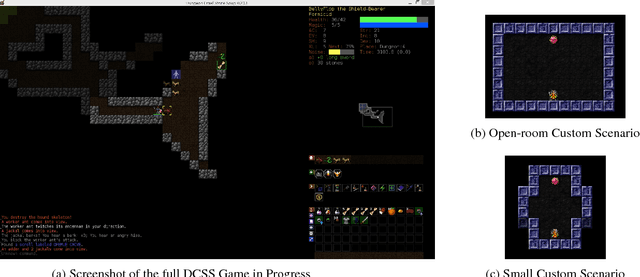

Dungeon Crawl Stone Soup as an Evaluation Domain for Artificial Intelligence

Feb 05, 2019

Abstract:Dungeon Crawl Stone Soup is a popular, single-player, free and open-source rogue-like video game with a sufficiently complex decision space that makes it an ideal testbed for research in cognitive systems and, more generally, artificial intelligence. This paper describes the properties of Dungeon Crawl Stone Soup that are conducive to evaluating new approaches of AI systems. We also highlight an ongoing effort to build an API for AI researchers in the spirit of recent game APIs such as MALMO, ELF, and the Starcraft II API. Dungeon Crawl Stone Soup's complexity offers significant opportunities for evaluating AI and cognitive systems, including human user studies. In this paper we provide (1) a description of the state space of Dungeon Crawl Stone Soup, (2) a description of the components for our API, and (3) the potential benefits of evaluating AI agents in the Dungeon Crawl Stone Soup video game.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge