Anticipatory Thinking Challenges in Open Worlds: Risk Management

Paper and Code

Jun 22, 2023

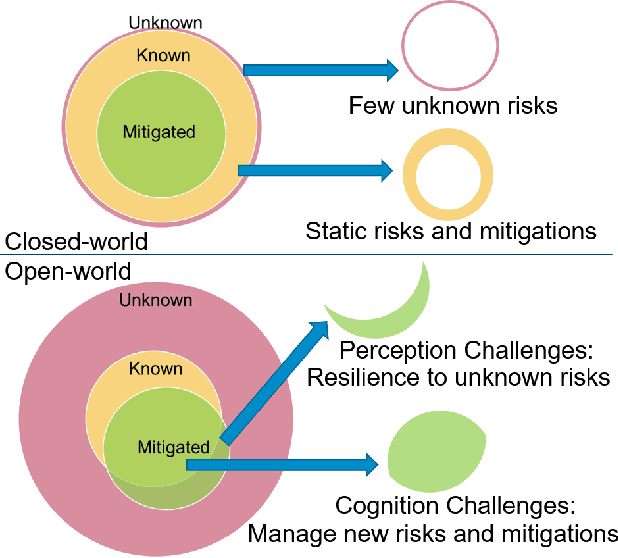

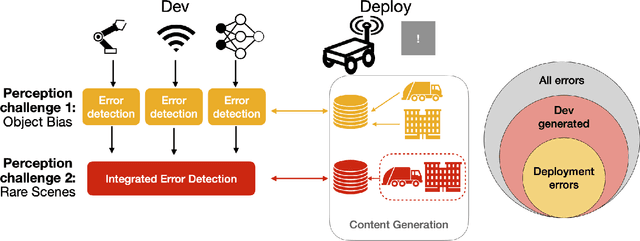

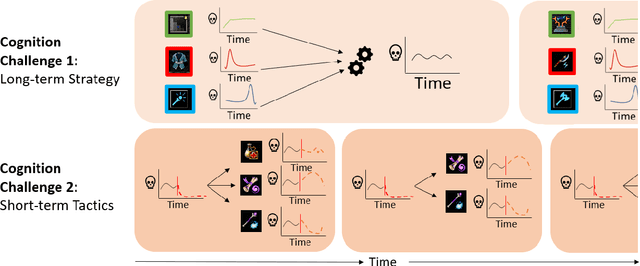

Anticipatory thinking drives our ability to manage risk - identification and mitigation - in everyday life, from bringing an umbrella when it might rain to buying car insurance. As AI systems become part of everyday life, they too have begun to manage risk. Autonomous vehicles log millions of miles, StarCraft and Go agents have similar capabilities to humans, implicitly managing risks presented by their opponents. To further increase performance in these tasks, out-of-distribution evaluation can characterize a model's bias, what we view as a type of risk management. However, learning to identify and mitigate low-frequency, high-impact risks is at odds with the observational bias required to train machine learning models. StarCraft and Go are closed-world domains whose risks are known and mitigations well documented, ideal for learning through repetition. Adversarial filtering datasets provide difficult examples but are laborious to curate and static, both barriers to real-world risk management. Adversarial robustness focuses on model poisoning under the assumption there is an adversary with malicious intent, without considering naturally occurring adversarial examples. These methods are all important steps towards improving risk management but do so without considering open-worlds. We unify these open-world risk management challenges with two contributions. The first is our perception challenges, designed for agents with imperfect perceptions of their environment whose consequences have a high impact. Our second contribution are cognition challenges, designed for agents that must dynamically adjust their risk exposure as they identify new risks and learn new mitigations. Our goal with these challenges is to spur research into solutions that assess and improve the anticipatory thinking required by AI agents to manage risk in open-worlds and ultimately the real-world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge