Matthew Molineaux

A Framework for Characterizing Novel Environment Transformations in General Environments

May 07, 2023

Abstract:To be robust to surprising developments, an intelligent agent must be able to respond to many different types of unexpected change in the world. To date, there are no general frameworks for defining and characterizing the types of environment changes that are possible. We introduce a formal and theoretical framework for defining and categorizing environment transformations, changes to the world an agent inhabits. We introduce two types of environment transformation: R-transformations which modify environment dynamics and T-transformations which modify the generation process that produces scenarios. We present a new language for describing domains, scenario generators, and transformations, called the Transformation and Simulator Abstraction Language (T-SAL), and a logical formalism that rigorously defines these concepts. Then, we offer the first formal and computational set of tests for eight categories of environment transformations. This domain-independent framework paves the way for describing unambiguous classes of novelty, constrained and domain-independent random generation of environment transformations, replication of environment transformation studies, and fair evaluation of agent robustness.

Self-directed Learning of Action Models using Exploratory Planning

Mar 07, 2022

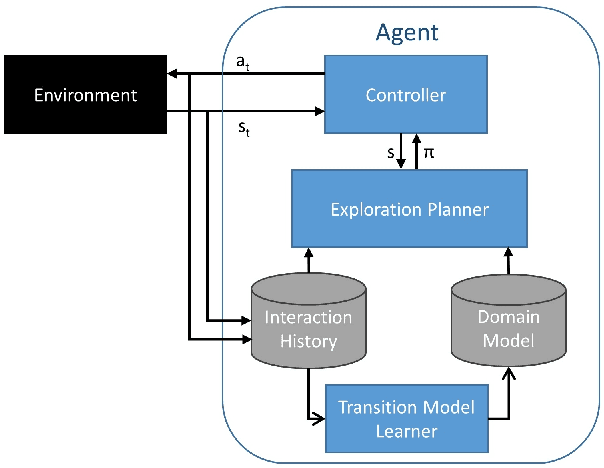

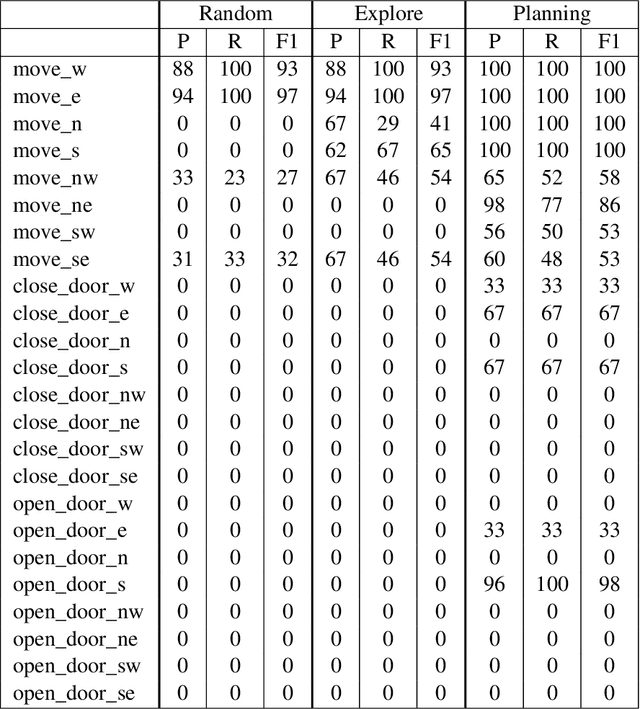

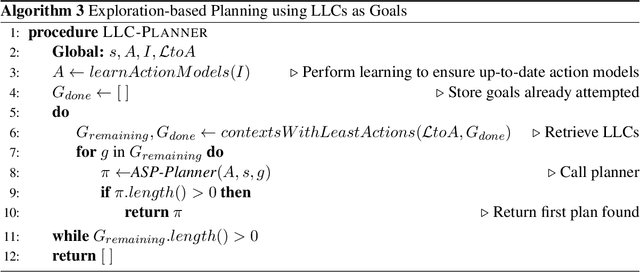

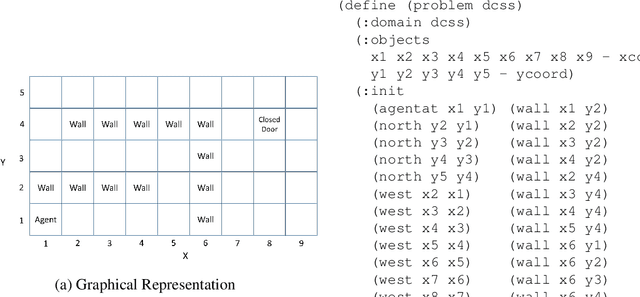

Abstract:Complex, real-world domains may not be fully modeled for an agent, especially if the agent has never operated in the domain before. The agent's ability to effectively plan and act in such a domain is influenced by its knowledge of when it can perform specific actions and the effects of those actions. We describe a novel exploratory planning agent that is capable of learning action preconditions and effects without expert traces or a given goal. The agent's architecture allows it to perform both exploratory actions as well as goal-directed actions, which opens up important considerations for how exploratory planning and goal planning should be controlled, as well as how the agent's behavior should be explained to any teammates it may have. The contributions of this work include a new representation for contexts called Lifted Linked Clauses, a novel exploration action selection approach using these clauses, an exploration planner that uses lifted linked clauses as goals in order to reach new states, and an empirical evaluation in a scenario from an exploration-focused video game demonstrating that lifted linked clauses improve exploration and action model learning against non-planning baseline agents.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge