Duncan S. Callaway

Predicting Electricity Infrastructure Induced Wildfire Risk in California

Jun 06, 2022

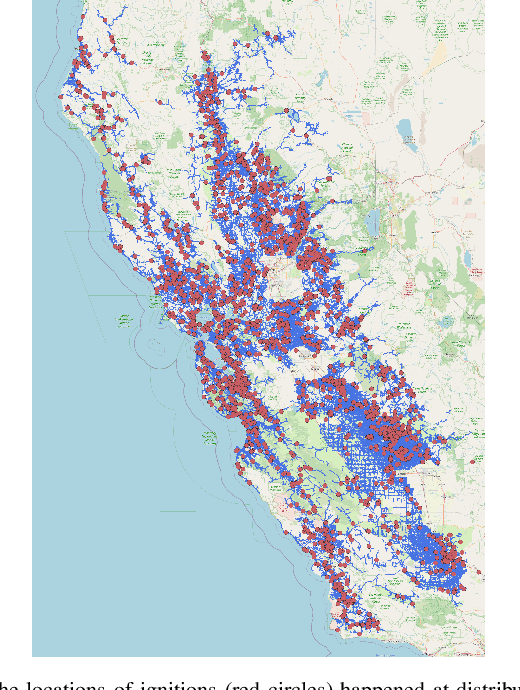

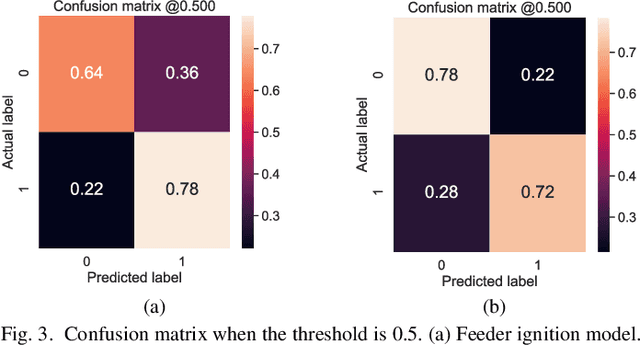

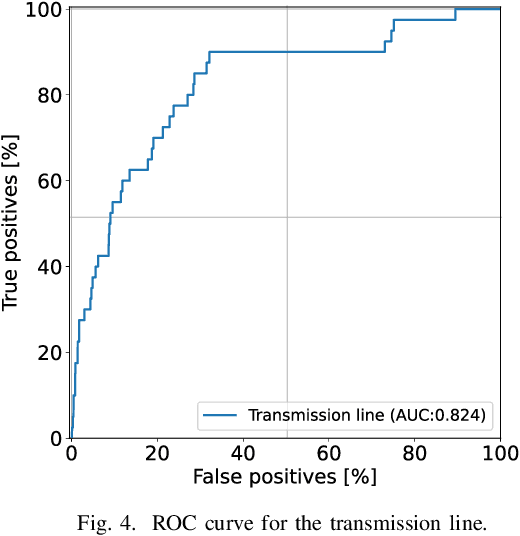

Abstract:This paper examines the use of risk models to predict the timing and location of wildfires caused by electricity infrastructure. Our data include historical ignition and wire-down points triggered by grid infrastructure collected between 2015 to 2019 in Pacific Gas & Electricity territory along with various weather, vegetation, and very high resolution data on grid infrastructure including location, age, materials. With these data we explore a range of machine learning methods and strategies to manage training data imbalance. The best area under the receiver operating characteristic we obtain is 0.776 for distribution feeder ignitions and 0.824 for transmission line wire-down events, both using the histogram-based gradient boosting tree algorithm (HGB) with under-sampling. We then use these models to identify which information provides the most predictive value. After line length, we find that weather and vegetation features dominate the list of top important features for ignition or wire-down risk. Distribution ignition models show more dependence on slow-varying vegetation variables such as burn index, energy release content, and tree height, whereas transmission wire-down models rely more on primary weather variables such as wind speed and precipitation. These results point to the importance of improved vegetation modeling for feeder ignition risk models, and improved weather forecasting for transmission wire-down models. We observe that infrastructure features make small but meaningful improvements to risk model predictive power.

Approximate Multi-Agent Fitted Q Iteration

Apr 19, 2021

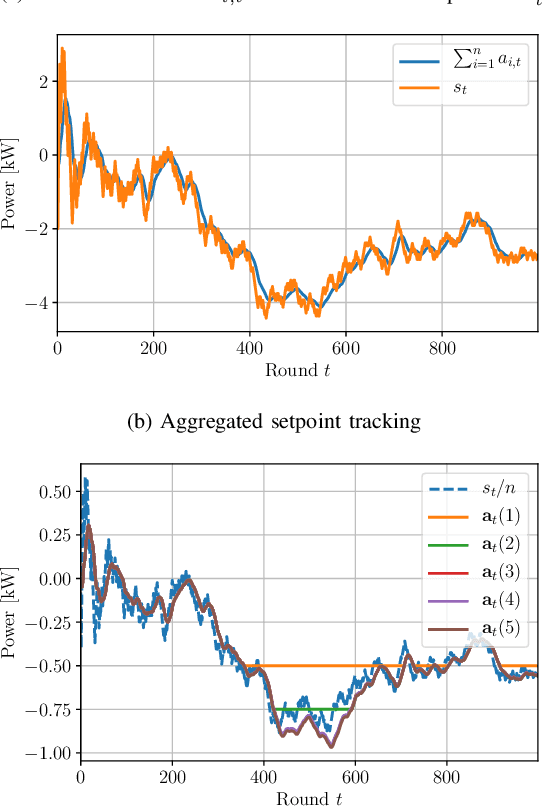

Abstract:We formulate an efficient approximation for multi-agent batch reinforcement learning, the approximate multi-agent fitted Q iteration (AMAFQI). We present a detailed derivation of our approach. We propose an iterative policy search and show that it yields a greedy policy with respect to multiple approximations of the centralized, standard Q-function. In each iteration and policy evaluation, AMAFQI requires a number of computations that scales linearly with the number of agents whereas the analogous number of computations increase exponentially for the fitted Q iteration (FQI), one of the most commonly used approaches in batch reinforcement learning. This property of AMAFQI is fundamental for the design of a tractable multi-agent approach. We evaluate the performance of AMAFQI and compare it to FQI in numerical simulations. Numerical examples illustrate the significant computation time reduction when using AMAFQI instead of FQI in multi-agent problems and corroborate the similar decision-making performance of both approaches.

Online Convex Optimization with Binary Constraints

May 05, 2020

Abstract:We consider online optimization with binary decision variables and convex loss functions. We design a new algorithm, binary online gradient descent (bOG}), and bound its expected dynamic regret. The bound is sublinear in time and linear in the cumulative variation of the relaxed, continuous round optima. We apply bOGD to demand response with thermostatically controlled loads, in which binary constraints model discrete on/off settings. We also model uncertainty and varying load availability, which depend on temperature deadbands, lock-out of cooling units and manual overrides. We test the performance of bOGD in several simulations based on demand response.

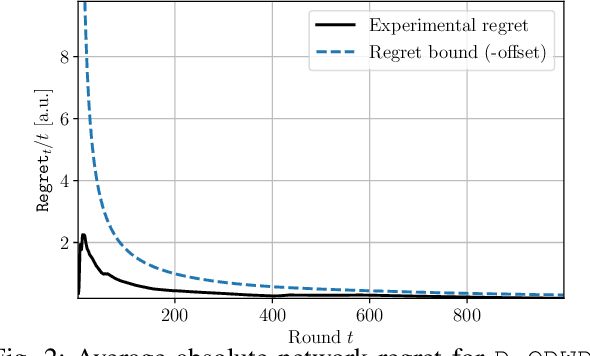

Dynamic and Distributed Online Convex Optimization for Demand Response of Commercial Buildings

Jan 31, 2020

Abstract:We extend the regret analysis of the online distributed weighted dual averaging (DWDA) algorithm[1] to the dynamic setting and provide the tightest dynamic regret bound known to date for a distributed online convex optimization (OCO) algorithm. Our bound is linear in the cumulative difference between consecutive optima and does not depend explicitly on the time horizon. We use dynamic-online DWDA (D-ODWDA) and formulate a performance-guaranteed distributed online demand response approach for heating, ventilation, and air-conditioning (HVAC) systems of commercial buildings. We show the performance of our approach for fast timescale demand response in numerical simulations and obtain demand response decisions that closely reproduce the centralized optimal ones.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge