Duncan C. McElfresh

Human Comprehension of Fairness in Machine Learning

Dec 17, 2019

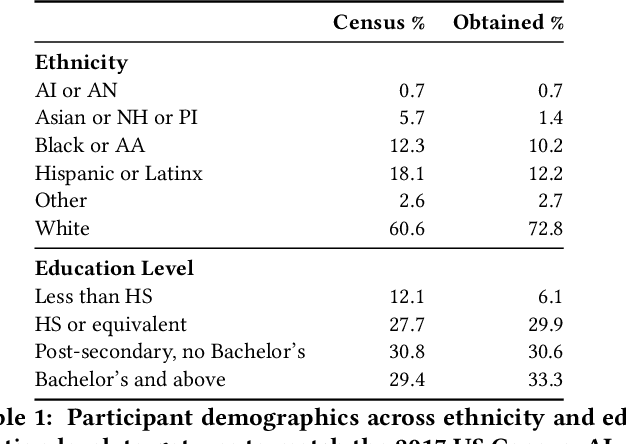

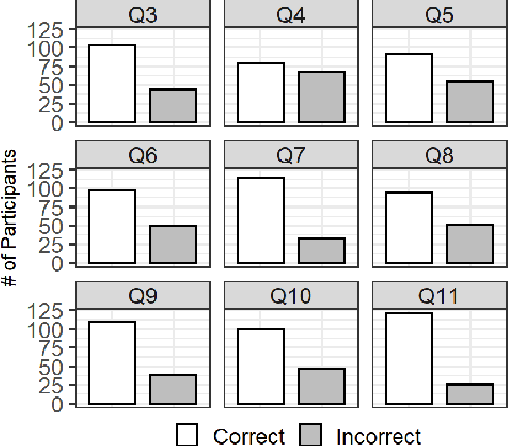

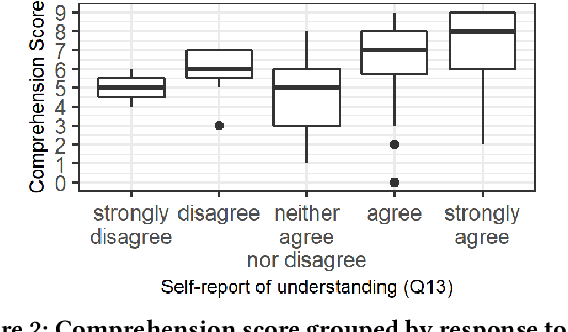

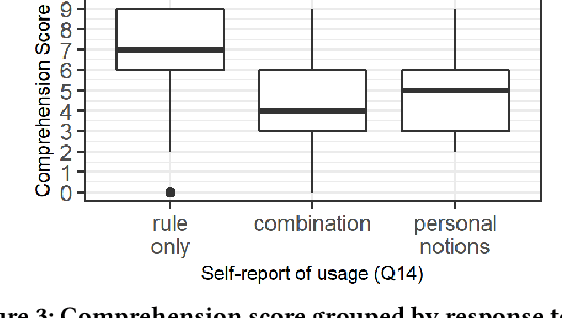

Abstract:Bias in machine learning has manifested injustice in several areas, such as medicine, hiring, and criminal justice. In response, computer scientists have developed myriad definitions of fairness to correct this bias in fielded algorithms. While some definitions are based on established legal and ethical norms, others are largely mathematical. It is unclear whether the general public agrees with these fairness definitions, and perhaps more importantly, whether they understand these definitions. We take initial steps toward bridging this gap between ML researchers and the public, by addressing the question: does a non-technical audience understand a basic definition of ML fairness? We develop a metric to measure comprehension of one such definition--demographic parity. We validate this metric using online surveys, and study the relationship between comprehension and sentiment, demographics, and the application at hand.

Balancing Lexicographic Fairness and a Utilitarian Objective with Application to Kidney Exchange

Sep 07, 2017

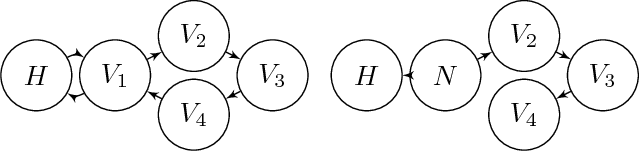

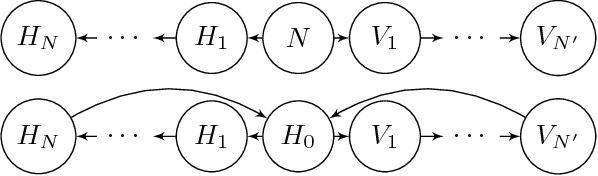

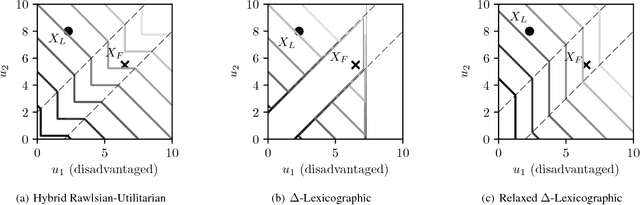

Abstract:Balancing fairness and efficiency in resource allocation is a classical economic and computational problem. The price of fairness measures the worst-case loss of economic efficiency when using an inefficient but fair allocation rule; for indivisible goods in many settings, this price is unacceptably high. One such setting is kidney exchange, where needy patients swap willing but incompatible kidney donors. In this work, we close an open problem regarding the theoretical price of fairness in modern kidney exchanges. We then propose a general hybrid fairness rule that balances a strict lexicographic preference ordering over classes of agents, and a utilitarian objective that maximizes economic efficiency. We develop a utility function for this rule that favors disadvantaged groups lexicographically; but if cost to overall efficiency becomes too high, it switches to a utilitarian objective. This rule has only one parameter which is proportional to a bound on the price of fairness, and can be adjusted by policymakers. We apply this rule to real data from a large kidney exchange and show that our hybrid rule produces more reliable outcomes than other fairness rules.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge