Dor Bank

Autoencoders

Mar 12, 2020

Abstract:An autoencoder is a specific type of a neural network, which is mainlydesigned to encode the input into a compressed and meaningful representation, andthen decode it back such that the reconstructed input is similar as possible to theoriginal one. This chapter surveys the different types of autoencoders that are mainlyused today. It also describes various applications and use-cases of autoencoders.

On the relationship between Dropout and Equiangular Tight Frames

Oct 14, 2018

Abstract:Dropout is a popular regularization technique in neural networks. Yet, the reason for its success is still not fully understood. This paper provides a new interpretation of Dropout from a frame theory perspective. This leads to a novel regularization technique for neural networks that minimizes the cross-correlation between filters in the network. We demonstrate its applicability in convolutional and fully connected layers in both feed-forward and recurrent networks.

Improved Training for Self-Training by Confidence Assessments

Apr 05, 2018

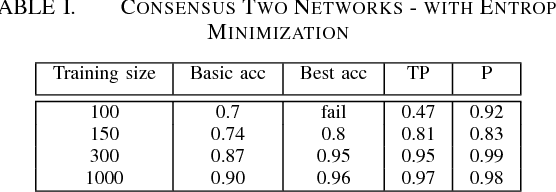

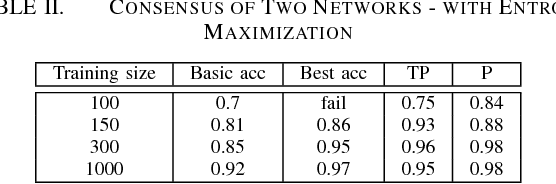

Abstract:It is well known that for some tasks, labeled data sets may be hard to gather. Therefore, we wished to tackle here the problem of having insufficient training data. We examined learning methods from unlabeled data after an initial training on a limited labeled data set. The suggested approach can be used as an online learning method on the unlabeled test set. In the general classification task, whenever we predict a label with high enough confidence, we treat it as a true label and train the data accordingly. For the semantic segmentation task, a classic example for an expensive data labeling process, we do so pixel-wise. Our suggested approaches were applied on the MNIST data-set as a proof of concept for a vision classification task and on the ADE20K data-set in order to tackle the semi-supervised semantic segmentation problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge