Dongjia Zhao

A Novel Cross-Perturbation for Single Domain Generalization

Aug 02, 2023

Abstract:Single domain generalization aims to enhance the ability of the model to generalize to unknown domains when trained on a single source domain. However, the limited diversity in the training data hampers the learning of domain-invariant features, resulting in compromised generalization performance. To address this, data perturbation (augmentation) has emerged as a crucial method to increase data diversity. Nevertheless, existing perturbation methods often focus on either image-level or feature-level perturbations independently, neglecting their synergistic effects. To overcome these limitations, we propose CPerb, a simple yet effective cross-perturbation method. Specifically, CPerb utilizes both horizontal and vertical operations. Horizontally, it applies image-level and feature-level perturbations to enhance the diversity of the training data, mitigating the issue of limited diversity in single-source domains. Vertically, it introduces multi-route perturbation to learn domain-invariant features from different perspectives of samples with the same semantic category, thereby enhancing the generalization capability of the model. Additionally, we propose MixPatch, a novel feature-level perturbation method that exploits local image style information to further diversify the training data. Extensive experiments on various benchmark datasets validate the effectiveness of our method.

Patch-aware Batch Normalization for Improving Cross-domain Robustness

Apr 06, 2023

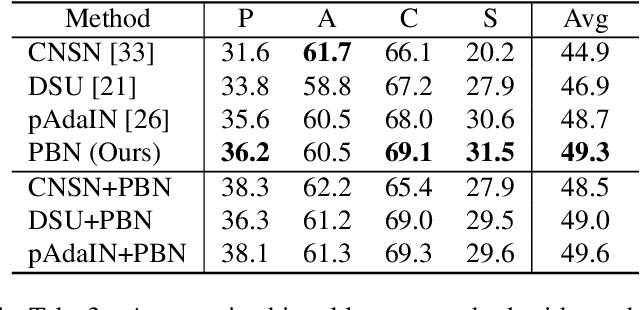

Abstract:Despite the significant success of deep learning in computer vision tasks, cross-domain tasks still present a challenge in which the model's performance will degrade when the training set and the test set follow different distributions. Most existing methods employ adversarial learning or instance normalization for achieving data augmentation to solve this task. In contrast, considering that the batch normalization (BN) layer may not be robust for unseen domains and there exist the differences between local patches of an image, we propose a novel method called patch-aware batch normalization (PBN). To be specific, we first split feature maps of a batch into non-overlapping patches along the spatial dimension, and then independently normalize each patch to jointly optimize the shared BN parameter at each iteration. By exploiting the differences between local patches of an image, our proposed PBN can effectively enhance the robustness of the model's parameters. Besides, considering the statistics from each patch may be inaccurate due to their smaller size compared to the global feature maps, we incorporate the globally accumulated statistics with the statistics from each batch to obtain the final statistics for normalizing each patch. Since the proposed PBN can replace the typical BN, it can be integrated into most existing state-of-the-art methods. Extensive experiments and analysis demonstrate the effectiveness of our PBN in multiple computer vision tasks, including classification, object detection, instance retrieval, and semantic segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge