Dong In Lee

EditSplat: Multi-View Fusion and Attention-Guided Optimization for View-Consistent 3D Scene Editing with 3D Gaussian Splatting

Dec 16, 2024

Abstract:Recent advancements in 3D editing have highlighted the potential of text-driven methods in real-time, user-friendly AR/VR applications. However, current methods rely on 2D diffusion models without adequately considering multi-view information, resulting in multi-view inconsistency. While 3D Gaussian Splatting (3DGS) significantly improves rendering quality and speed, its 3D editing process encounters difficulties with inefficient optimization, as pre-trained Gaussians retain excessive source information, hindering optimization. To address these limitations, we propose \textbf{EditSplat}, a novel 3D editing framework that integrates Multi-view Fusion Guidance (MFG) and Attention-Guided Trimming (AGT). Our MFG ensures multi-view consistency by incorporating essential multi-view information into the diffusion process, leveraging classifier-free guidance from the text-to-image diffusion model and the geometric properties of 3DGS. Additionally, our AGT leverages the explicit representation of 3DGS to selectively prune and optimize 3D Gaussians, enhancing optimization efficiency and enabling precise, semantically rich local edits. Through extensive qualitative and quantitative evaluations, EditSplat achieves superior multi-view consistency and editing quality over existing methods, significantly enhancing overall efficiency.

WateRF: Robust Watermarks in Radiance Fields for Protection of Copyrights

May 03, 2024

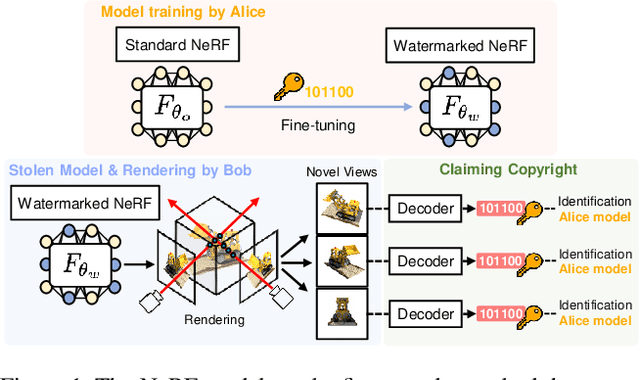

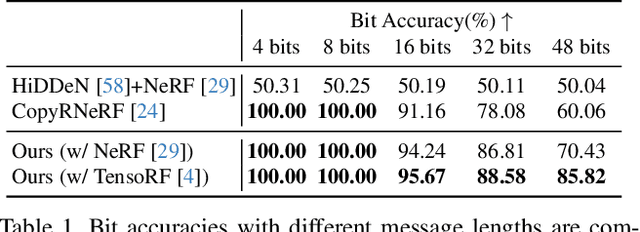

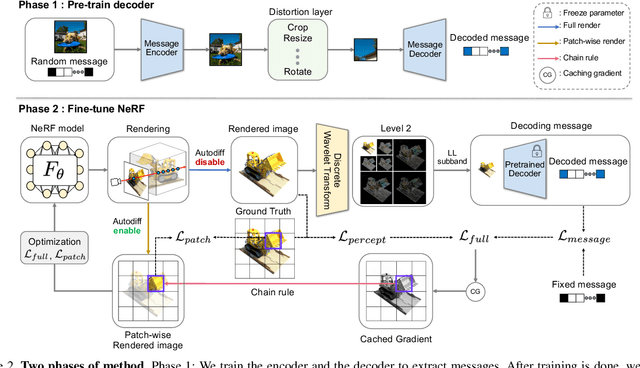

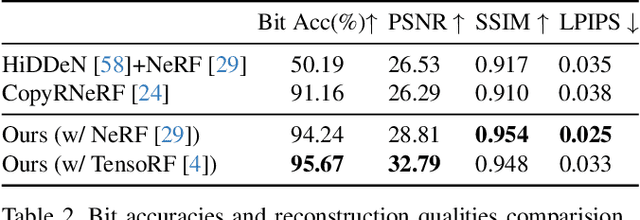

Abstract:The advances in the Neural Radiance Fields (NeRF) research offer extensive applications in diverse domains, but protecting their copyrights has not yet been researched in depth. Recently, NeRF watermarking has been considered one of the pivotal solutions for safely deploying NeRF-based 3D representations. However, existing methods are designed to apply only to implicit or explicit NeRF representations. In this work, we introduce an innovative watermarking method that can be employed in both representations of NeRF. This is achieved by fine-tuning NeRF to embed binary messages in the rendering process. In detail, we propose utilizing the discrete wavelet transform in the NeRF space for watermarking. Furthermore, we adopt a deferred back-propagation technique and introduce a combination with the patch-wise loss to improve rendering quality and bit accuracy with minimum trade-offs. We evaluate our method in three different aspects: capacity, invisibility, and robustness of the embedded watermarks in the 2D-rendered images. Our method achieves state-of-the-art performance with faster training speed over the compared state-of-the-art methods.

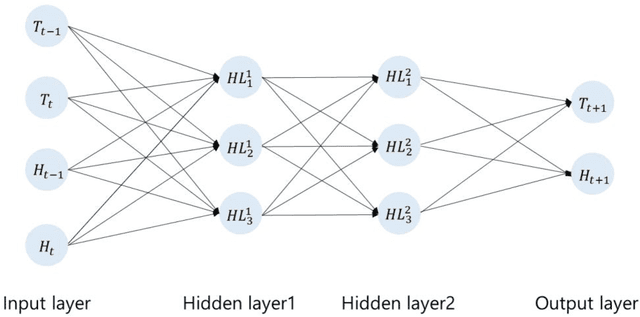

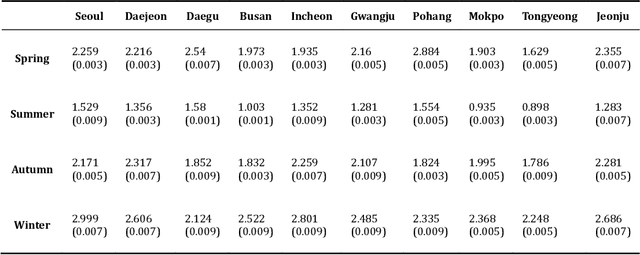

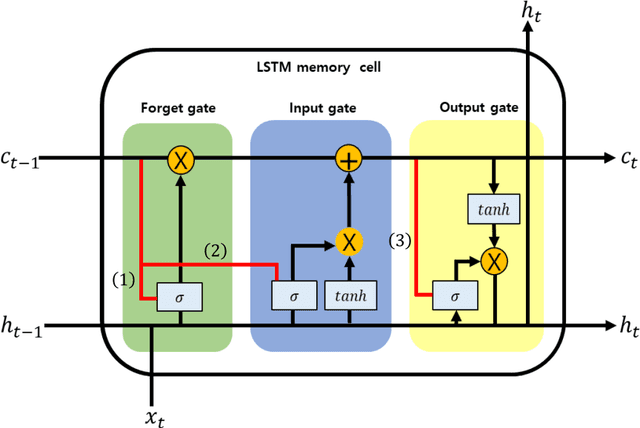

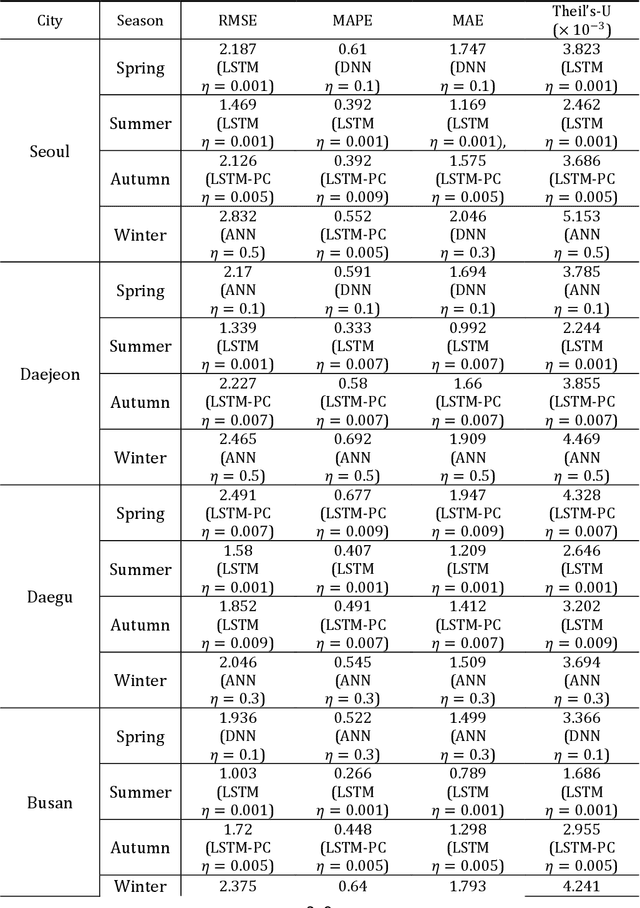

Dynamical prediction of two meteorological factors using the deep neural network and the long short term memory $$

Jan 16, 2021

Abstract:It is important to calculate and analyze temperature and humidity prediction accuracies among quantitative meteorological forecasting. This study manipulates the extant neural network methods to foster the predictive accuracy. To achieve such tasks, we analyze and explore the predictive accuracy and performance in the neural networks using two combined meteorological factors (temperature and humidity). Simulated studies are performed by applying the artificial neural network (ANN), deep neural network (DNN), extreme learning machine (ELM), long short-term memory (LSTM), and long short-term memory with peephole connections (LSTM-PC) machine learning methods, and the accurate prediction value are compared to that obtained from each other methods. Data are extracted from low frequency time-series of ten metropolitan cities of South Korea from March 2014 to February 2020 to validate our observations. To test the robustness of methods, the error of LSTM is found to outperform that of the other four methods in predictive accuracy. Particularly, as testing results, the temperature prediction of LSTM in summer in Tongyeong has a root mean squared error (RMSE) value of 0.866 lower than that of other neural network methods, while the mean absolute percentage error (MAPE) value of LSTM for humidity prediction is 5.525 in summer in Mokpo, significantly better than other metropolitan cities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge