Dominick Hambrick

Enhancing Satellite Imagery using Deep Learning for the Sensor To Shooter Timeline

Mar 30, 2022

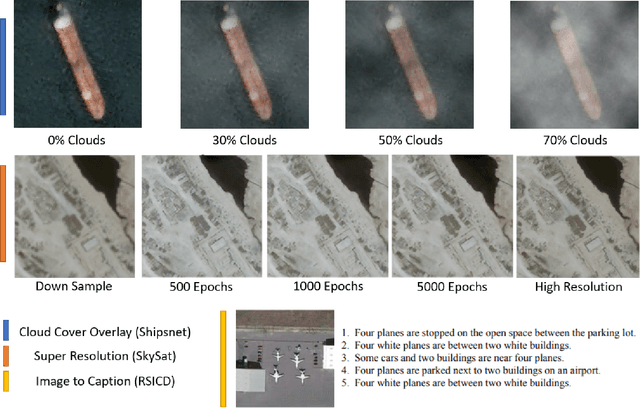

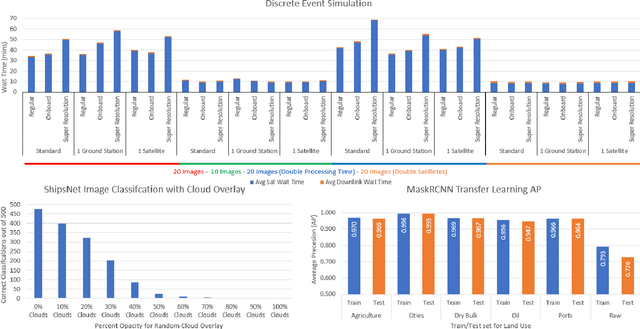

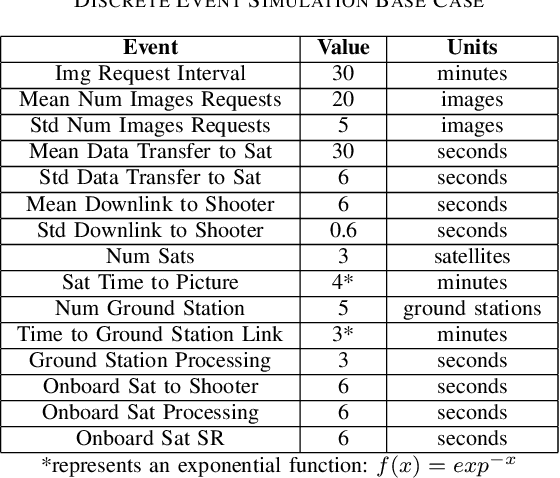

Abstract:The sensor to shooter timeline is affected by two main variables: satellite positioning and asset positioning. Speeding up satellite positioning by adding more sensors or by decreasing processing time is important only if there is a prepared shooter, otherwise the main source of time is getting the shooter into position. However, the intelligence community should work towards the exploitation of sensors to the highest speed and effectiveness possible. Achieving a high effectiveness while keeping speed high is a tradeoff that must be considered in the sensor to shooter timeline. In this paper we investigate two main ideas, increasing the effectiveness of satellite imagery through image manipulation and how on-board image manipulation would affect the sensor to shooter timeline. We cover these ideas in four scenarios: Discrete Event Simulation of onboard processing versus ground station processing, quality of information with cloud cover removal, information improvement with super resolution, and data reduction with image to caption. This paper will show how image manipulation techniques such as Super Resolution, Cloud Removal, and Image to Caption will improve the quality of delivered information in addition to showing how those processes effect the sensor to shooter timeline.

Automating Defense Against Adversarial Attacks: Discovery of Vulnerabilities and Application of Multi-INT Imagery to Protect Deployed Models

Mar 29, 2021

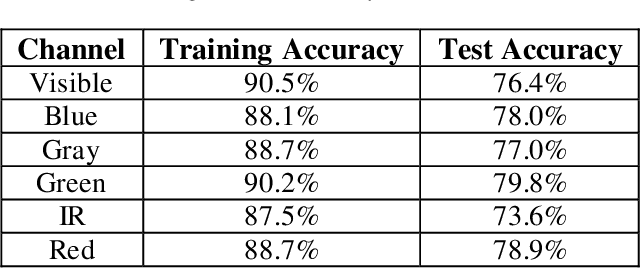

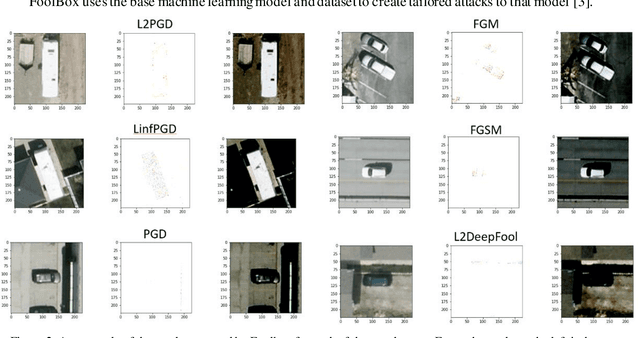

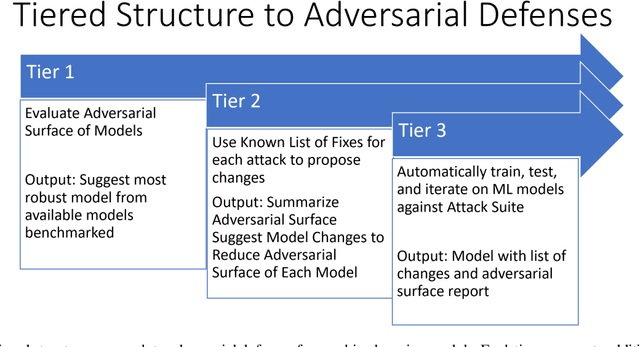

Abstract:Image classification is a common step in image recognition for machine learning in overhead applications. When applying popular model architectures like MobileNetV2, known vulnerabilities expose the model to counter-attacks, either mislabeling a known class or altering box location. This work proposes an automated approach to defend these models. We evaluate the use of multi-spectral image arrays and ensemble learners to combat adversarial attacks. The original contribution demonstrates the attack, proposes a remedy, and automates some key outcomes for protecting the model's predictions against adversaries. In rough analogy to defending cyber-networks, we combine techniques from both offensive ("red team") and defensive ("blue team") approaches, thus generating a hybrid protective outcome ("green team"). For machine learning, we demonstrate these methods with 3-color channels plus infrared for vehicles. The outcome uncovers vulnerabilities and corrects them with supplemental data inputs commonly found in overhead cases particularly.

Training Set Effect on Super Resolution for Automated Target Recognition

Dec 04, 2019

Abstract:Single Image Super Resolution (SISR) is the process of mapping a low resolution image to a high resolution image. This inherently has applications in remote sensing as a way to increase the spatial resolution in satellite imagery. This suggests a possible improvement to automated target recognition in image classification and object detection. We explore the effect that different training sets have on SISR with the network, Super Resolution Generative Adversarial Network (SRGAN). We train 5 SRGANs on different land-use classes (e.g. agriculture, cities, ports) and test them on the same unseen data set. We attempt to find the qualitative and quantitative differences in SISR, binary classification, and object detection performance. We find that curated training sets that contain objects in the test ontology perform better in the computer vision tasks. However, Super Resolution (SR) might not be beneficial to certain problems and will see a diminishing amount of returns for data sets that are closer to being solved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge