Dogan Altan

Physics-guided Neural Network-based Shaft Power Prediction for Vessels

Dec 23, 2025

Abstract:Optimizing maritime operations, particularly fuel consumption for vessels, is crucial, considering its significant share in global trade. As fuel consumption is closely related to the shaft power of a vessel, predicting shaft power accurately is a crucial problem that requires careful consideration to minimize costs and emissions. Traditional approaches, which incorporate empirical formulas, often struggle to model dynamic conditions, such as sea conditions or fouling on vessels. In this paper, we present a hybrid, physics-guided neural network-based approach that utilizes empirical formulas within the network to combine the advantages of both neural networks and traditional techniques. We evaluate the presented method using data obtained from four similar-sized cargo vessels and compare the results with those of a baseline neural network and a traditional approach that employs empirical formulas. The experimental results demonstrate that the physics-guided neural network approach achieves lower mean absolute error, root mean square error, and mean absolute percentage error for all tested vessels compared to both the empirical formula-based method and the base neural network.

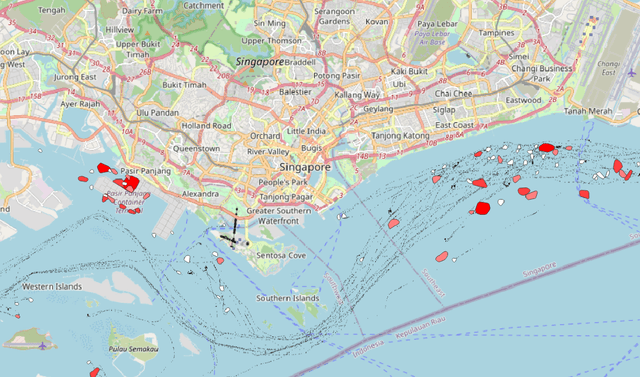

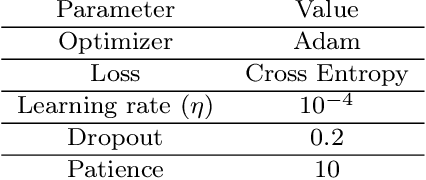

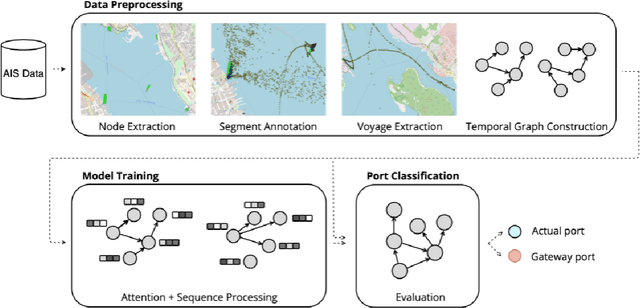

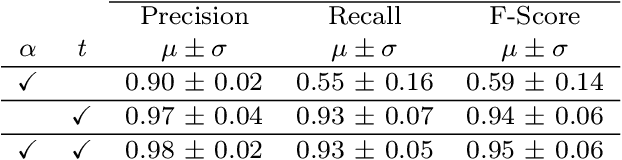

Discovering Gateway Ports in Maritime Using Temporal Graph Neural Network Port Classification

Apr 25, 2022

Abstract:Vessel navigation is influenced by various factors, such as dynamic environmental factors that change over time or static features such as vessel type or depth of the ocean. These dynamic and static navigational factors impose limitations on vessels, such as long waiting times in regions outside the actual ports, and we call these waiting regions gateway ports. Identifying gateway ports and their associated features such as congestion and available utilities can enhance vessel navigation by planning on fuel optimization or saving time in cargo operation. In this paper, we propose a novel temporal graph neural network (TGNN) based port classification method to enable vessels to discover gateway ports efficiently, thus optimizing their operations. The proposed method processes vessel trajectory data to build dynamic graphs capturing spatio-temporal dependencies between a set of static and dynamic navigational features in the data, and it is evaluated in terms of port classification accuracy on a real-world data set collected from ten vessels operating in Halifax, NS, Canada. The experimental results indicate that our TGNN-based port classification method provides an f-score of 95% in classifying ports.

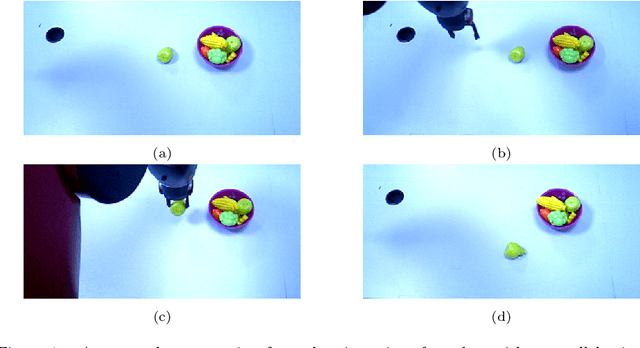

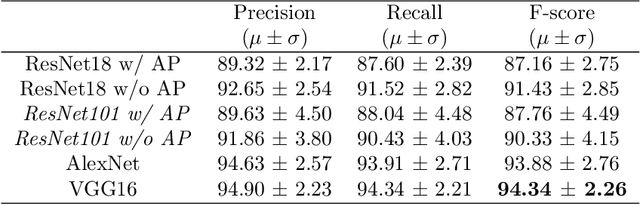

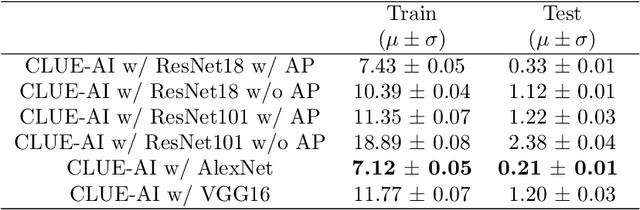

CLUE-AI: A Convolutional Three-stream Anomaly Identification Framework for Robot Manipulation

Mar 16, 2022

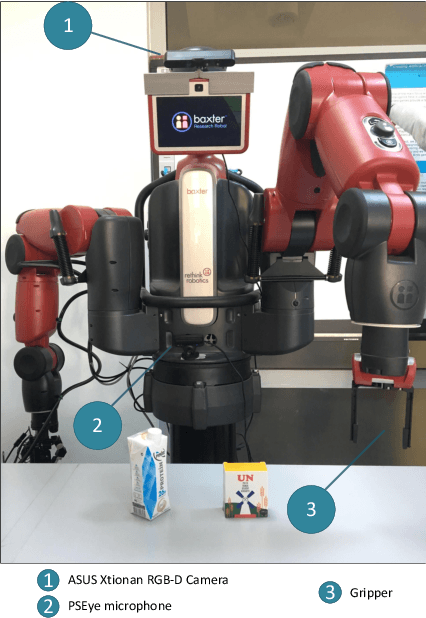

Abstract:Robot safety has been a prominent research topic in recent years since robots are more involved in daily tasks. It is crucial to devise the required safety mechanisms to enable service robots to be aware of and react to anomalies (i.e., unexpected deviations from intended outcomes) that arise during the execution of these tasks. Detection and identification of these anomalies is an essential step towards fulfilling these requirements. Although several architectures are proposed for anomaly detection, identification is not yet thoroughly investigated. This task is challenging since indicators may appear long before anomalies are detected. In this paper, we propose a ConvoLUtional threE-stream Anomaly Identification (CLUE-AI) framework to address this problem. The framework fuses visual, auditory and proprioceptive data streams to identify everyday object manipulation anomalies. A stream of 2D images gathered through an RGB-D camera placed on the head of the robot is processed within a self-attention enabled visual stage to capture visual anomaly indicators. The auditory modality provided by the microphone placed on the robot's lower torso is processed within a designed convolutional neural network (CNN) in the auditory stage. Last, the force applied by the gripper and the gripper state are processed within a CNN to obtain proprioceptive features. These outputs are then combined with a late fusion scheme. Our novel three-stream framework design is analyzed on everyday object manipulation tasks with a Baxter humanoid robot in a semi-structured setting. The results indicate that the framework achieves an f-score of 94% outperforming the other baselines in classifying anomalies that arise during runtime.

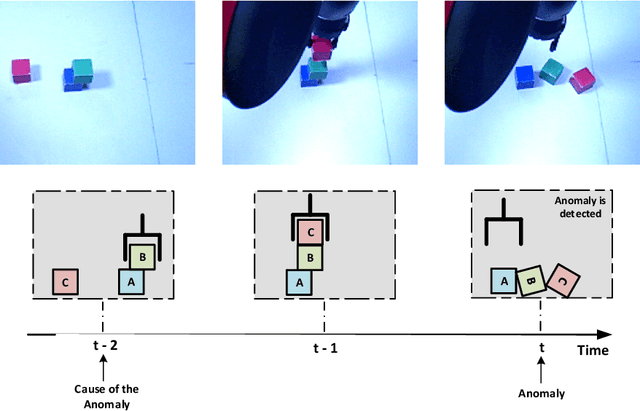

What went wrong?: Identification of Everyday Object Manipulation Anomalies

Jan 24, 2020

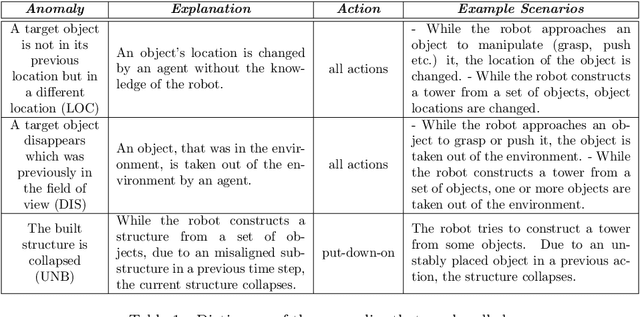

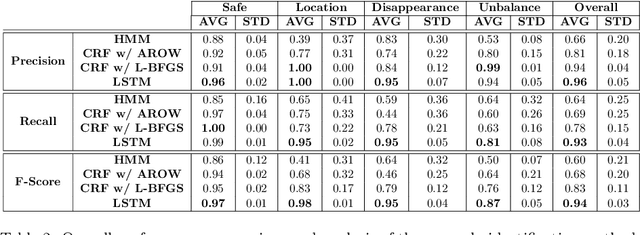

Abstract:Extending the abilities of service robots is important for expanding what they can achieve in everyday manipulation tasks. On the other hand, it is also essential to ensure them to determine what they can not achieve in certain cases due to either anomalies or permanent failures during task execution. Robots need to identify these situations, and reveal the reasons behind these cases to overcome and recover from them. In this paper, we propose and analyze a Long Short-Term Memories-based (LSTM-based) awareness approach to reveal the reasons behind an anomaly case that occurs during a manipulation episode in an unstructured environment. The proposed method takes into account the real-time observations of the robot by fusing visual, auditory and proprioceptive sensory modalities to achieve this task. We also provide a comparative analysis of our method with Hidden Markov Models (HMMs) and Conditional Random Fields (CRFs). The symptoms of anomalies are first learned from a given training set, then they can be classified in real-time based on the learned models. The approaches are evaluated on a Baxter robot executing object manipulation scenarios. The results indicate that the LSTM-based method outperforms the other methods with a 0.94 classification rate in revealing causes of anomalies in case of an unexpected deviation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge