Do-kyum Kim

Applying Embedding-Based Retrieval to Airbnb Search

Jan 11, 2026Abstract:The goal of Airbnb search is to match guests with the ideal accommodation that fits their travel needs. This is a challenging problem, as popular search locations can have around a hundred thousand available homes, and guests themselves have a wide variety of preferences. Furthermore, the launch of new product features, such as \textit{flexible date search,} significantly increased the number of eligible homes per search query. As such, there is a need for a sophisticated retrieval system which can provide high-quality candidates with low latency in a way that integrates with the overall ranking stack. This paper details our journey to build an efficient and high-quality retrieval system for Airbnb search. We describe the key unique challenges we encountered when implementing an Embedding-Based Retrieval (EBR) system for a two sided marketplace like Airbnb -- such as the dynamic nature of the inventory, a lengthy user funnel with multiple stages, and a variety of product surfaces. We cover unique insights when modeling the retrieval problem, how to build robust evaluation systems, and design choices for online serving. The EBR system was launched to production and powers several use-cases such as regular search, flexible date and promotional emails for marketing campaigns. The system demonstrated statistically-significant improvements in key metrics, such as booking conversion, via A/B testing.

Predicting Potential Customer Support Needs and Optimizing Search Ranking in a Two-Sided Marketplace

Mar 21, 2025Abstract:Airbnb is an online marketplace that connects hosts and guests to unique stays and experiences. When guests stay at homes booked on Airbnb, there are a small fraction of stays that lead to support needed from Airbnb's Customer Support (CS), which may cause inconvenience to guests and hosts and require Airbnb resources to resolve. In this work, we show that instances where CS support is needed may be predicted based on hosts and guests behavior. We build a model to predict the likelihood of CS support needs for each match of guest and host. The model score is incorporated into Airbnb's search ranking algorithm as one of the many factors. The change promotes more reliable matches in search results and significantly reduces bookings that require CS support.

Topic Modeling of Hierarchical Corpora

Apr 13, 2015

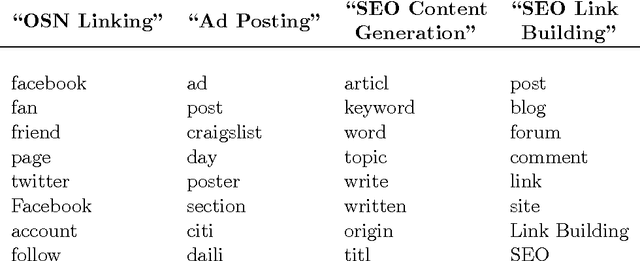

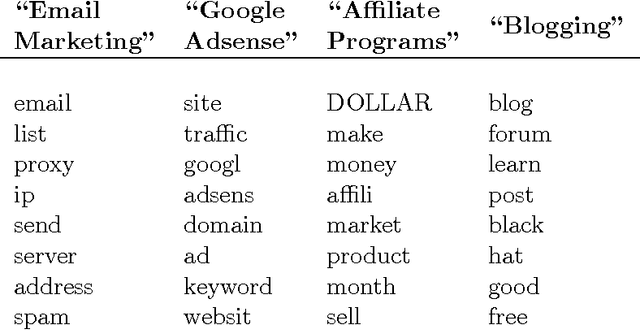

Abstract:We study the problem of topic modeling in corpora whose documents are organized in a multi-level hierarchy. We explore a parametric approach to this problem, assuming that the number of topics is known or can be estimated by cross-validation. The models we consider can be viewed as special (finite-dimensional) instances of hierarchical Dirichlet processes (HDPs). For these models we show that there exists a simple variational approximation for probabilistic inference. The approximation relies on a previously unexploited inequality that handles the conditional dependence between Dirichlet latent variables in adjacent levels of the model's hierarchy. We compare our approach to existing implementations of nonparametric HDPs. On several benchmarks we find that our approach is faster than Gibbs sampling and able to learn more predictive models than existing variational methods. Finally, we demonstrate the large-scale viability of our approach on two newly available corpora from researchers in computer security---one with 350,000 documents and over 6,000 internal subcategories, the other with a five-level deep hierarchy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge