Dingyi Zhang

Persuasion Should be Double-Blind: A Multi-Domain Dialogue Dataset With Faithfulness Based on Causal Theory of Mind

Feb 28, 2025Abstract:Persuasive dialogue plays a pivotal role in human communication, influencing various domains. Recent persuasive dialogue datasets often fail to align with real-world interpersonal interactions, leading to unfaithful representations. For instance, unrealistic scenarios may arise, such as when the persuadee explicitly instructs the persuader on which persuasion strategies to employ, with each of the persuadee's questions corresponding to a specific strategy for the persuader to follow. This issue can be attributed to a violation of the "Double Blind" condition, where critical information is fully shared between participants. In actual human interactions, however, key information such as the mental state of the persuadee and the persuasion strategies of the persuader is not directly accessible. The persuader must infer the persuadee's mental state using Theory of Mind capabilities and construct arguments that align with the persuadee's motivations. To address this gap, we introduce ToMMA, a novel multi-agent framework for dialogue generation that is guided by causal Theory of Mind. This framework ensures that information remains undisclosed between agents, preserving "double-blind" conditions, while causal ToM directs the persuader's reasoning, enhancing alignment with human-like persuasion dynamics. Consequently, we present CToMPersu, a multi-domain, multi-turn persuasive dialogue dataset that tackles both double-blind and logical coherence issues, demonstrating superior performance across multiple metrics and achieving better alignment with real human dialogues. Our dataset and prompts are available at https://github.com/DingyiZhang/ToMMA-CToMPersu .

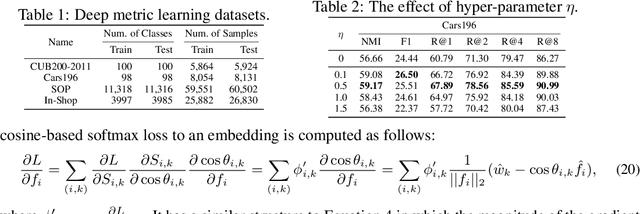

Deep Metric Learning with Spherical Embedding

Nov 05, 2020

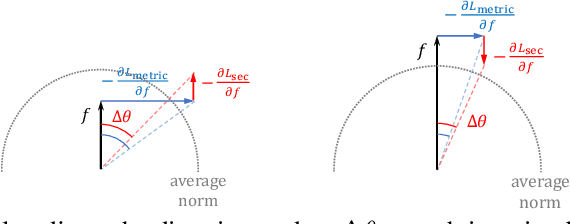

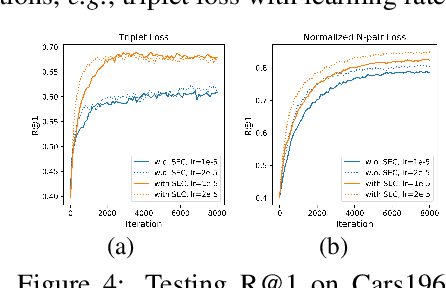

Abstract:Deep metric learning has attracted much attention in recent years, due to seamlessly combining the distance metric learning and deep neural network. Many endeavors are devoted to design different pair-based angular loss functions, which decouple the magnitude and direction information for embedding vectors and ensure the training and testing measure consistency. However, these traditional angular losses cannot guarantee that all the sample embeddings are on the surface of the same hypersphere during the training stage, which would result in unstable gradient in batch optimization and may influence the quick convergence of the embedding learning. In this paper, we first investigate the effect of the embedding norm for deep metric learning with angular distance, and then propose a spherical embedding constraint (SEC) to regularize the distribution of the norms. SEC adaptively adjusts the embeddings to fall on the same hypersphere and performs more balanced direction update. Extensive experiments on deep metric learning, face recognition, and contrastive self-supervised learning show that the SEC-based angular space learning strategy significantly improves the performance of the state-of-the-art.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge