Diego Rybski

Probing the statistical properties of unknown texts: application to the Voynich Manuscript

Mar 02, 2013

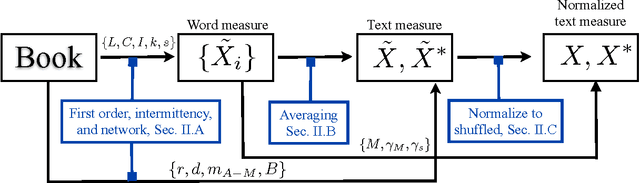

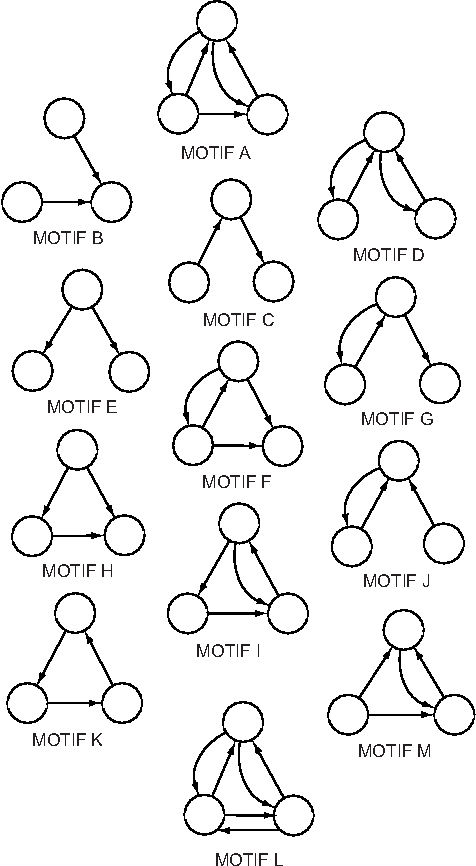

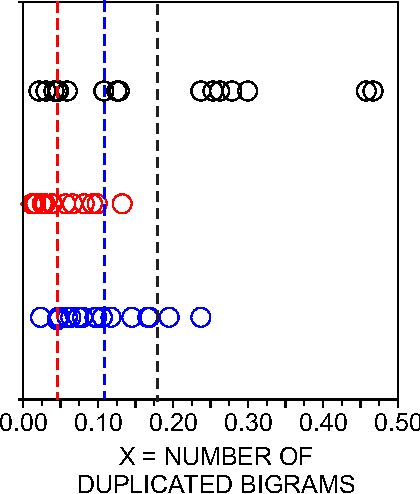

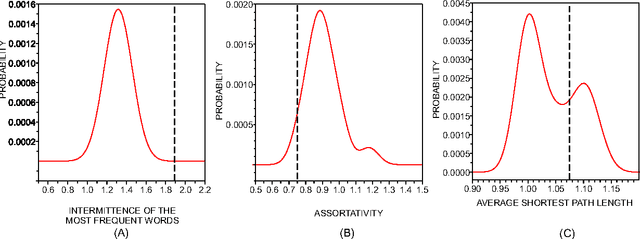

Abstract:While the use of statistical physics methods to analyze large corpora has been useful to unveil many patterns in texts, no comprehensive investigation has been performed investigating the properties of statistical measurements across different languages and texts. In this study we propose a framework that aims at determining if a text is compatible with a natural language and which languages are closest to it, without any knowledge of the meaning of the words. The approach is based on three types of statistical measurements, i.e. obtained from first-order statistics of word properties in a text, from the topology of complex networks representing text, and from intermittency concepts where text is treated as a time series. Comparative experiments were performed with the New Testament in 15 different languages and with distinct books in English and Portuguese in order to quantify the dependency of the different measurements on the language and on the story being told in the book. The metrics found to be informative in distinguishing real texts from their shuffled versions include assortativity, degree and selectivity of words. As an illustration, we analyze an undeciphered medieval manuscript known as the Voynich Manuscript. We show that it is mostly compatible with natural languages and incompatible with random texts. We also obtain candidates for key-words of the Voynich Manuscript which could be helpful in the effort of deciphering it. Because we were able to identify statistical measurements that are more dependent on the syntax than on the semantics, the framework may also serve for text analysis in language-dependent applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge