Diana Kim

Factor(U,T): Controlling Untrusted AI by Monitoring their Plans

Dec 12, 2025

Abstract:As AI capabilities advance, we increasingly rely on powerful models to decompose complex tasks $\unicode{x2013}$ but what if the decomposer itself is malicious? Factored cognition protocols decompose complex tasks into simpler child tasks: one model creates the decomposition, while other models implement the child tasks in isolation. Prior work uses trusted (weaker but reliable) models for decomposition, which limits usefulness for tasks where decomposition itself is challenging. We introduce Factor($U$,$T$), in which an untrusted (stronger but potentially malicious) model decomposes while trusted models implement child tasks. Can monitors detect malicious activity when observing only natural language task instructions, rather than complete solutions? We baseline and red team Factor($U$,$T$) in control evaluations on BigCodeBench, a dataset of Python coding tasks. Monitors distinguishing malicious from honest decompositions perform poorly (AUROC 0.52) compared to monitors evaluating complete Python solutions (AUROC 0.96). Furthermore, Factor($D$,$U$), which uses a trusted decomposer and monitors concrete child solutions, achieves excellent discrimination (AUROC 0.96) and strong safety (1.2% ASR), demonstrating that implementation-context monitoring succeeds where decomposition-only monitoring fails.

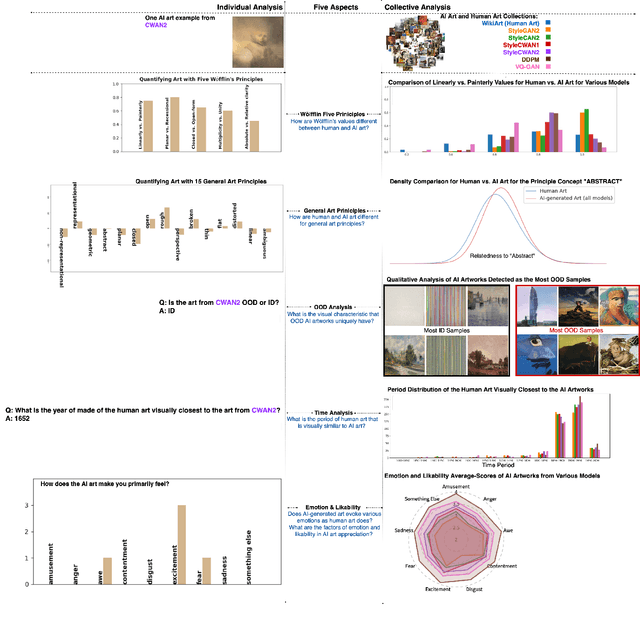

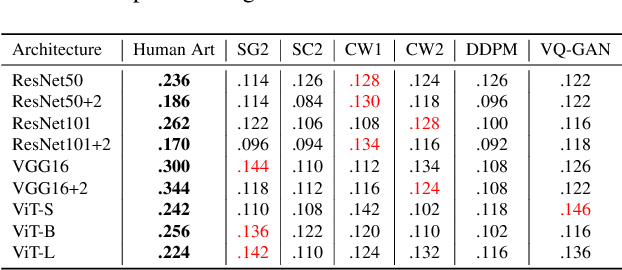

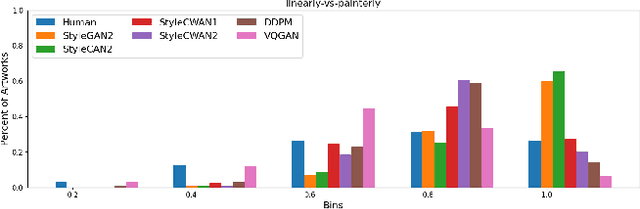

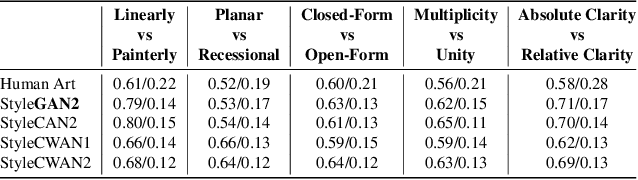

AI Art Neural Constellation: Revealing the Collective and Contrastive State of AI-Generated and Human Art

Feb 04, 2024

Abstract:Discovering the creative potentials of a random signal to various artistic expressions in aesthetic and conceptual richness is a ground for the recent success of generative machine learning as a way of art creation. To understand the new artistic medium better, we conduct a comprehensive analysis to position AI-generated art within the context of human art heritage. Our comparative analysis is based on an extensive dataset, dubbed ``ArtConstellation,'' consisting of annotations about art principles, likability, and emotions for 6,000 WikiArt and 3,200 AI-generated artworks. After training various state-of-the-art generative models, art samples are produced and compared with WikiArt data on the last hidden layer of a deep-CNN trained for style classification. We actively examined the various art principles to interpret the neural representations and used them to drive the comparative knowledge about human and AI-generated art. A key finding in the semantic analysis is that AI-generated artworks are visually related to the principle concepts for modern period art made in 1800-2000. In addition, through Out-Of-Distribution (OOD) and In-Distribution (ID) detection in CLIP space, we find that AI-generated artworks are ID to human art when they depict landscapes and geometric abstract figures, while detected as OOD when the machine art consists of deformed and twisted figures. We observe that machine-generated art is uniquely characterized by incomplete and reduced figuration. Lastly, we conducted a human survey about emotional experience. Color composition and familiar subjects are the key factors of likability and emotions in art appreciation. We propose our whole methodologies and collected dataset as our analytical framework to contrast human and AI-generated art, which we refer to as ``ArtNeuralConstellation''. Code is available at: https://github.com/faixan-khan/ArtNeuralConstellation

Formal Analysis of Art: Proxy Learning of Visual Concepts from Style Through Language Models

Jan 05, 2022

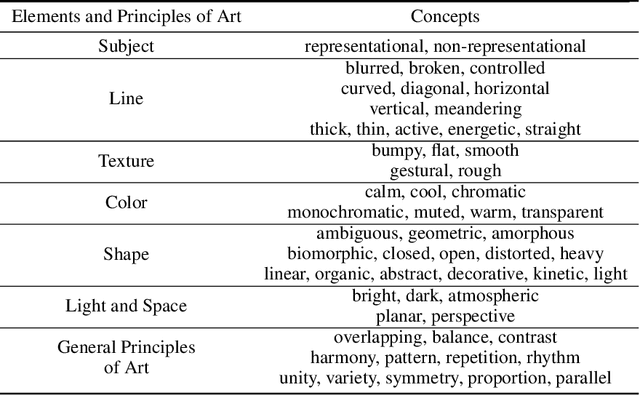

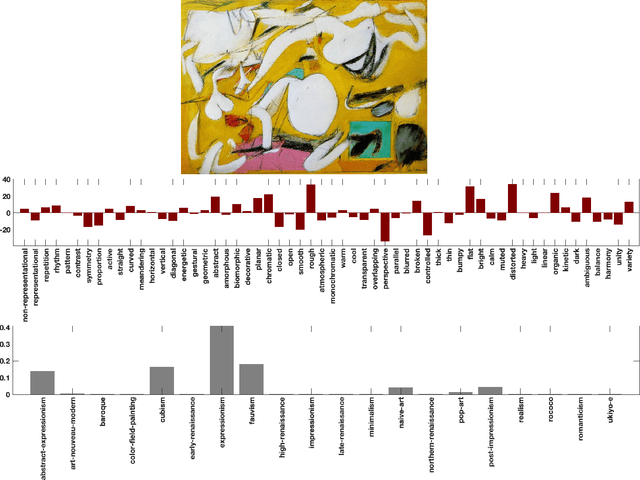

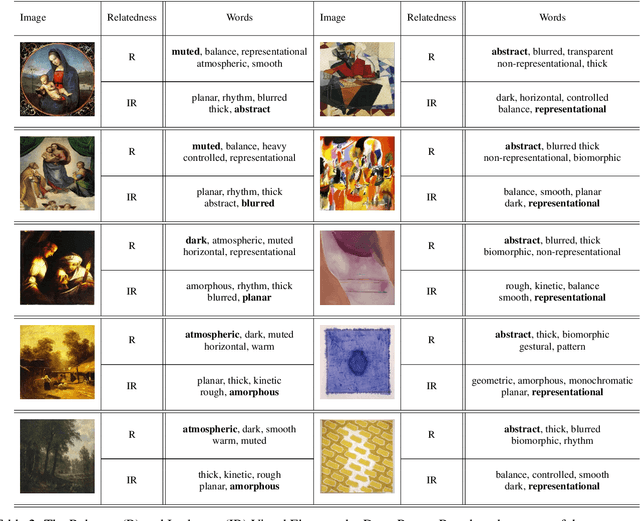

Abstract:We present a machine learning system that can quantify fine art paintings with a set of visual elements and principles of art. This formal analysis is fundamental for understanding art, but developing such a system is challenging. Paintings have high visual complexities, but it is also difficult to collect enough training data with direct labels. To resolve these practical limitations, we introduce a novel mechanism, called proxy learning, which learns visual concepts in paintings though their general relation to styles. This framework does not require any visual annotation, but only uses style labels and a general relationship between visual concepts and style. In this paper, we propose a novel proxy model and reformulate four pre-existing methods in the context of proxy learning. Through quantitative and qualitative comparison, we evaluate these methods and compare their effectiveness in quantifying the artistic visual concepts, where the general relationship is estimated by language models; GloVe or BERT. The language modeling is a practical and scalable solution requiring no labeling, but it is inevitably imperfect. We demonstrate how the new proxy model is robust to the imperfection, while the other models are sensitively affected by it.

The Shape of Art History in the Eyes of the Machine

Feb 12, 2018

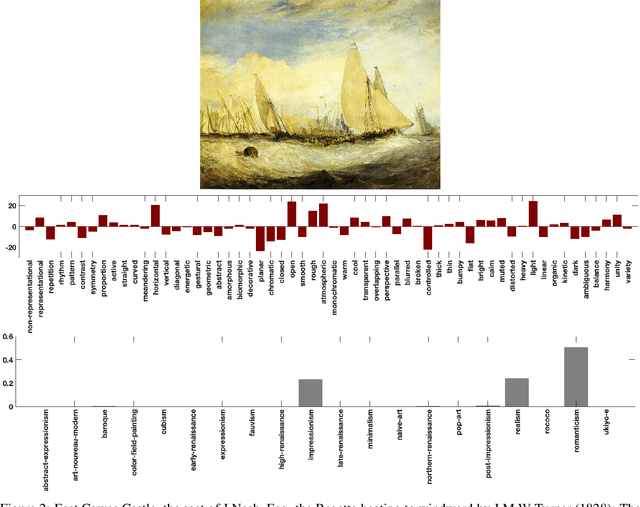

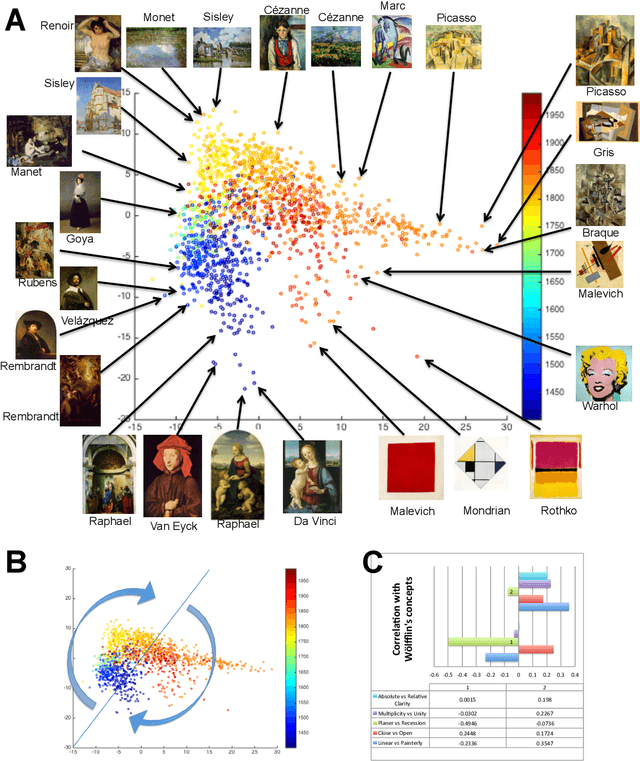

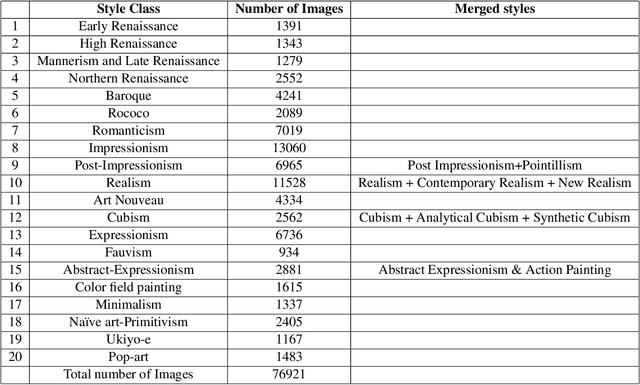

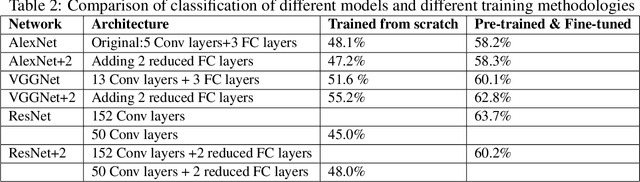

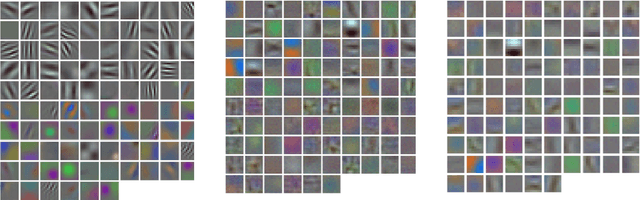

Abstract:How does the machine classify styles in art? And how does it relate to art historians' methods for analyzing style? Several studies have shown the ability of the machine to learn and predict style categories, such as Renaissance, Baroque, Impressionism, etc., from images of paintings. This implies that the machine can learn an internal representation encoding discriminative features through its visual analysis. However, such a representation is not necessarily interpretable. We conducted a comprehensive study of several of the state-of-the-art convolutional neural networks applied to the task of style classification on 77K images of paintings, and analyzed the learned representation through correlation analysis with concepts derived from art history. Surprisingly, the networks could place the works of art in a smooth temporal arrangement mainly based on learning style labels, without any a priori knowledge of time of creation, the historical time and context of styles, or relations between styles. The learned representations showed that there are few underlying factors that explain the visual variations of style in art. Some of these factors were found to correlate with style patterns suggested by Heinrich W\"olfflin (1846-1945). The learned representations also consistently highlighted certain artists as the extreme distinctive representative of their styles, which quantitatively confirms art historian observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge