Dhruv Kapur

Diverse Community Data for Benchmarking Data Privacy Algorithms

Jun 20, 2023

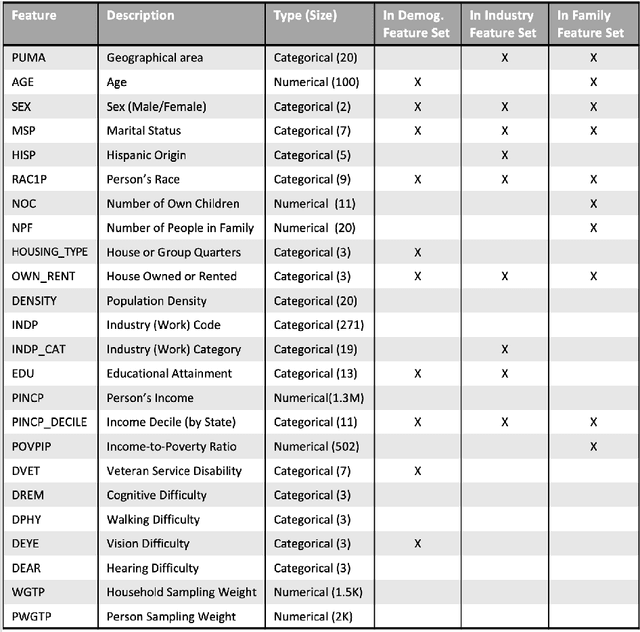

Abstract:The Diverse Communities Data Excerpts are the core of a National Institute of Standards and Technology (NIST) program to strengthen understanding of tabular data deidentification technologies such as synthetic data. Synthetic data is an ambitious attempt to democratize the benefits of big data; it uses generative models to recreate sensitive personal data with new records for public release. However, it is vulnerable to the same bias and privacy issues that impact other machine learning applications, and can even amplify those issues. When deidentified data distributions introduce bias or artifacts, or leak sensitive information, they propagate these problems to downstream applications. Furthermore, real-world survey conditions such as diverse subpopulations, heterogeneous non-ordinal data spaces, and complex dependencies between features pose specific challenges for synthetic data algorithms. These observations motivate the need for real, diverse, and complex benchmark data to support a robust understanding of algorithm behavior. This paper introduces four contributions: new theoretical work on the relationship between diverse populations and challenges for equitable deidentification; public benchmark data focused on diverse populations and challenging features curated from the American Community Survey; an open source suite of evaluation metrology for deidentified datasets; and an archive of evaluation results on a broad collection of deidentification techniques. The initial set of evaluation results demonstrate the suitability of these tools for investigations in this field.

Sim-to-Real Deep Reinforcement Learning based Obstacle Avoidance for UAVs under Measurement Uncertainty

Mar 13, 2023

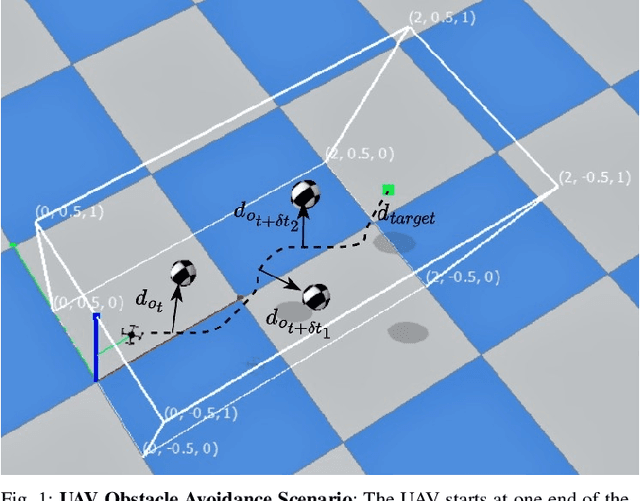

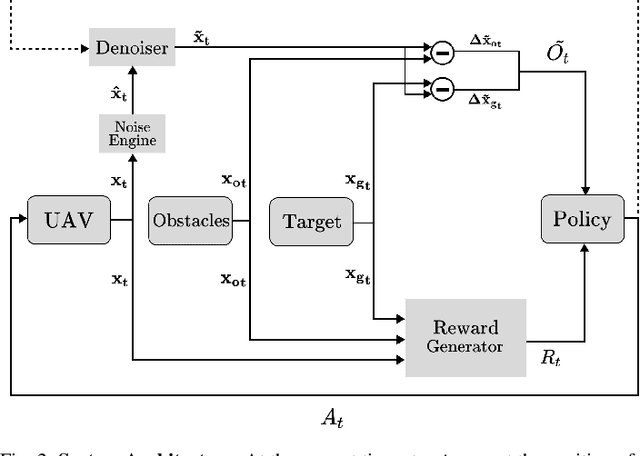

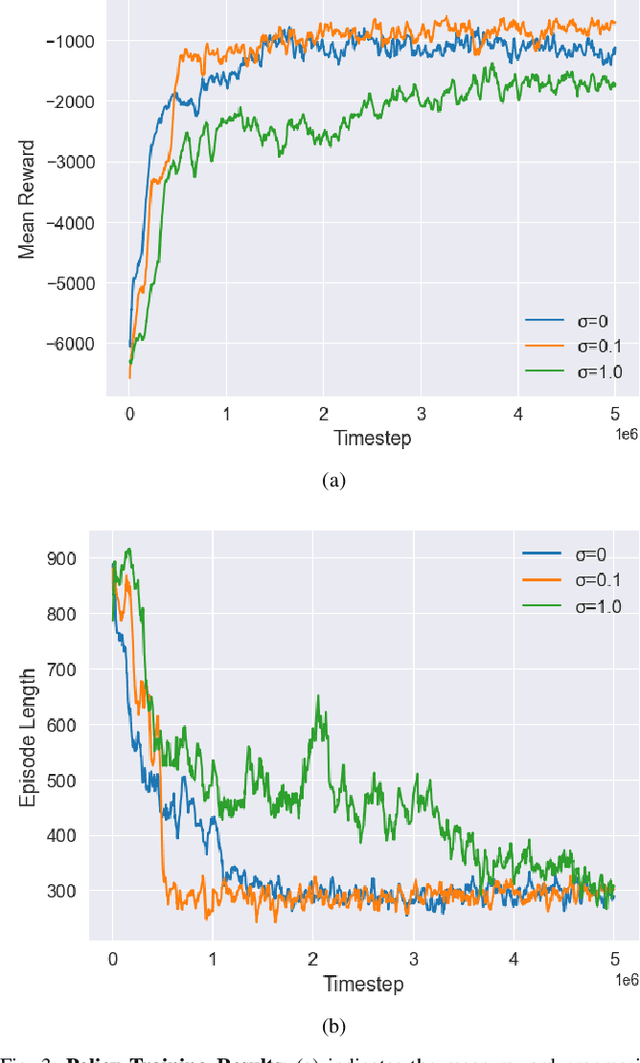

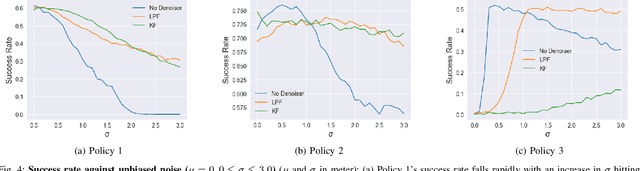

Abstract:Deep Reinforcement Learning is quickly becoming a popular method for training autonomous Unmanned Aerial Vehicles (UAVs). Our work analyzes the effects of measurement uncertainty on the performance of Deep Reinforcement Learning (DRL) based waypoint navigation and obstacle avoidance for UAVs. Measurement uncertainty originates from noise in the sensors used for localization and detecting obstacles. Measurement uncertainty/noise is considered to follow a Gaussian probability distribution with unknown non-zero mean and variance. We evaluate the performance of a DRL agent trained using the Proximal Policy Optimization (PPO) algorithm in an environment with continuous state and action spaces. The environment is randomized with different numbers of obstacles for each simulation episode in the presence of varying degrees of noise, to capture the effects of realistic sensor measurements. Denoising techniques like the low pass filter and Kalman filter improve performance in the presence of unbiased noise. Moreover, we show that artificially injecting noise into the measurements during evaluation actually improves performance in certain scenarios. Extensive training and testing of the DRL agent under various UAV navigation scenarios are performed in the PyBullet physics simulator. To evaluate the practical validity of our method, we port the policy trained in simulation onto a real UAV without any further modifications and verify the results in a real-world environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge