Devichand Budagam

Router-Suggest: Dynamic Routing for Multimodal Auto-Completion in Visually-Grounded Dialogs

Jan 09, 2026Abstract:Real-time multimodal auto-completion is essential for digital assistants, chatbots, design tools, and healthcare consultations, where user inputs rely on shared visual context. We introduce Multimodal Auto-Completion (MAC), a task that predicts upcoming characters in live chats using partially typed text and visual cues. Unlike traditional text-only auto-completion (TAC), MAC grounds predictions in multimodal context to better capture user intent. To enable this task, we adapt MMDialog and ImageChat to create benchmark datasets. We evaluate leading vision-language models (VLMs) against strong textual baselines, highlighting trade-offs in accuracy and efficiency. We present Router-Suggest, a router framework that dynamically selects between textual models and VLMs based on dialog context, along with a lightweight variant for resource-constrained environments. Router-Suggest achieves a 2.3x to 10x speedup over the best-performing VLM. A user study shows that VLMs significantly excel over textual models on user satisfaction, notably saving user typing effort and improving the quality of completions in multi-turn conversations. These findings underscore the need for multimodal context in auto-completions, leading to smarter, user-aware assistants.

Hierarchical Prompting Taxonomy: A Universal Evaluation Framework for Large Language Models

Jun 18, 2024

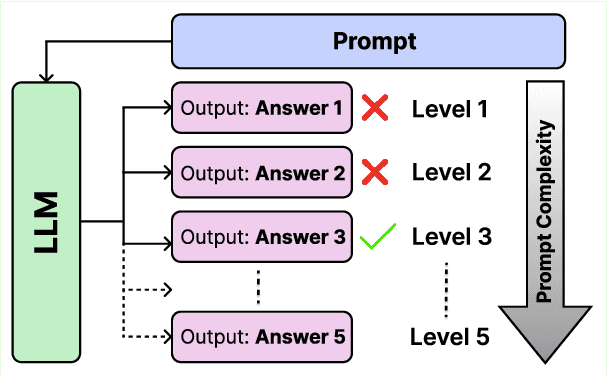

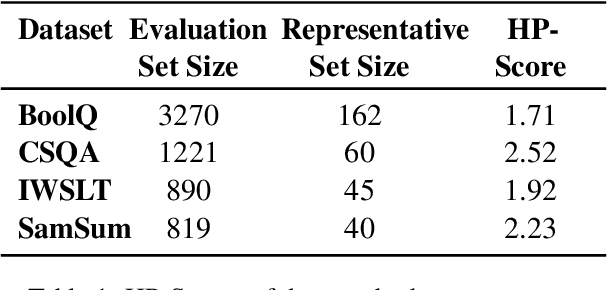

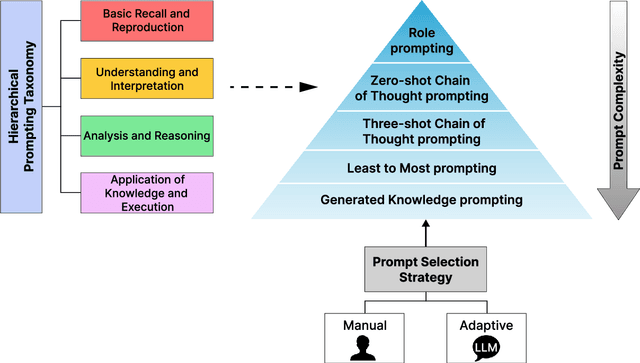

Abstract:Assessing the effectiveness of large language models (LLMs) in addressing diverse tasks is essential for comprehending their strengths and weaknesses. Conventional evaluation techniques typically apply a single prompting strategy uniformly across datasets, not considering the varying degrees of task complexity. We introduce the Hierarchical Prompting Taxonomy (HPT), a taxonomy that employs a Hierarchical Prompt Framework (HPF) composed of five unique prompting strategies, arranged from the simplest to the most complex, to assess LLMs more precisely and to offer a clearer perspective. This taxonomy assigns a score, called the Hierarchical Prompting Score (HP-Score), to datasets as well as LLMs based on the rules of the taxonomy, providing a nuanced understanding of their ability to solve diverse tasks and offering a universal measure of task complexity. Additionally, we introduce the Adaptive Hierarchical Prompt framework, which automates the selection of appropriate prompting strategies for each task. This study compares manual and adaptive hierarchical prompt frameworks using four instruction-tuned LLMs, namely Llama 3 8B, Phi 3 3.8B, Mistral 7B, and Gemma 7B, across four datasets: BoolQ, CommonSenseQA (CSQA), IWSLT-2017 en-fr (IWSLT), and SamSum. Experiments demonstrate the effectiveness of HPT, providing a reliable way to compare different tasks and LLM capabilities. This paper leads to the development of a universal evaluation metric that can be used to evaluate both the complexity of the datasets and the capabilities of LLMs. The implementation of both manual HPF and adaptive HPF is publicly available.

Instance Segmentation and Teeth Classification in Panoramic X-rays

Jun 06, 2024Abstract:Teeth segmentation and recognition are critical in various dental applications and dental diagnosis. Automatic and accurate segmentation approaches have been made possible by integrating deep learning models. Although teeth segmentation has been studied in the past, only some techniques were able to effectively classify and segment teeth simultaneously. This article offers a pipeline of two deep learning models, U-Net and YOLOv8, which results in BB-UNet, a new architecture for the classification and segmentation of teeth on panoramic X-rays that is efficient and reliable. We have improved the quality and reliability of teeth segmentation by utilising the YOLOv8 and U-Net capabilities. The proposed networks have been evaluated using the mean average precision (mAP) and dice coefficient for YOLOv8 and BB-UNet, respectively. We have achieved a 3\% increase in mAP score for teeth classification compared to existing methods, and a 10-15\% increase in dice coefficient for teeth segmentation compared to U-Net across different categories of teeth. A new Dental dataset was created based on UFBA-UESC dataset with Bounding-Box and Polygon annotations of 425 dental panoramic X-rays. The findings of this research pave the way for a wider adoption of object detection models in the field of dental diagnosis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge