Detlef Prescher

IMS, University of Stuttgart

A Tutorial on the Expectation-Maximization Algorithm Including Maximum-Likelihood Estimation and EM Training of Probabilistic Context-Free Grammars

Mar 11, 2005

Abstract:The paper gives a brief review of the expectation-maximization algorithm (Dempster 1977) in the comprehensible framework of discrete mathematics. In Section 2, two prominent estimation methods, the relative-frequency estimation and the maximum-likelihood estimation are presented. Section 3 is dedicated to the expectation-maximization algorithm and a simpler variant, the generalized expectation-maximization algorithm. In Section 4, two loaded dice are rolled. A more interesting example is presented in Section 5: The estimation of probabilistic context-free grammars.

Inside-Outside Estimation Meets Dynamic EM

Dec 03, 2004Abstract:We briefly review the inside-outside and EM algorithm for probabilistic context-free grammars. As a result, we formally prove that inside-outside estimation is a dynamic-programming variant of EM. This is interesting in its own right, but even more when considered in a theoretical context since the well-known convergence behavior of inside-outside estimation has been confirmed by many experiments but apparently has never been formally proved. However, being a version of EM, inside-outside estimation also inherits the good convergence behavior of EM. Therefore, the as yet imperfect line of argumentation can be transformed into a coherent proof.

* 4 pages, some typos corrected

Using a Probabilistic Class-Based Lexicon for Lexical Ambiguity Resolution

Aug 30, 2000

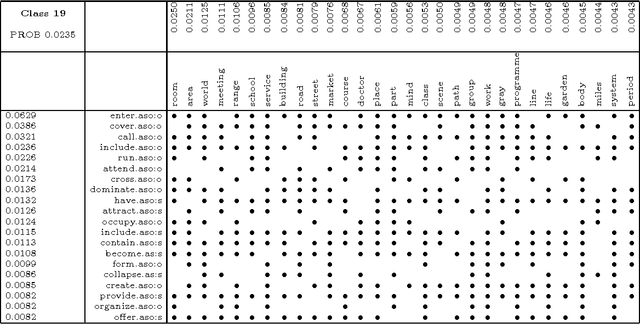

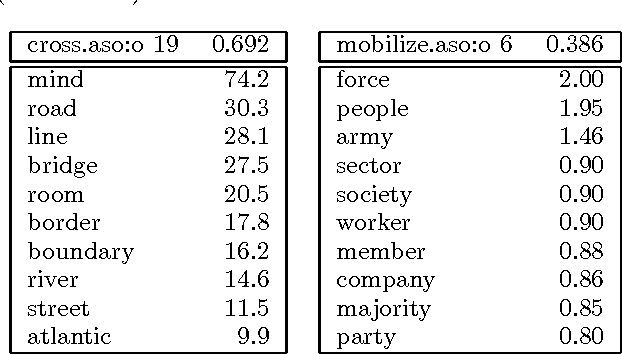

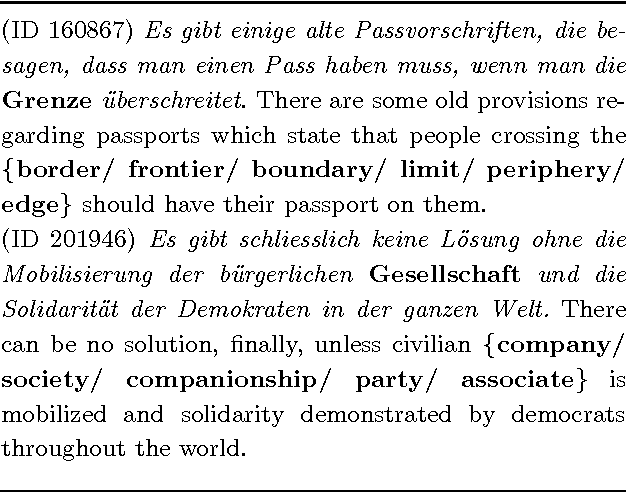

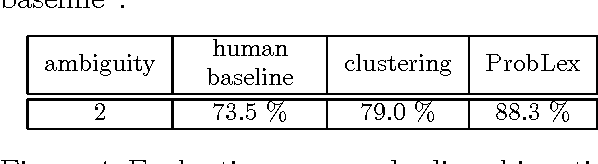

Abstract:This paper presents the use of probabilistic class-based lexica for disambiguation in target-word selection. Our method employs minimal but precise contextual information for disambiguation. That is, only information provided by the target-verb, enriched by the condensed information of a probabilistic class-based lexicon, is used. Induction of classes and fine-tuning to verbal arguments is done in an unsupervised manner by EM-based clustering techniques. The method shows promising results in an evaluation on real-world translations.

* 7 pages, uses colacl.sty

Lexicalized Stochastic Modeling of Constraint-Based Grammars using Log-Linear Measures and EM Training

Aug 30, 2000

Abstract:We present a new approach to stochastic modeling of constraint-based grammars that is based on log-linear models and uses EM for estimation from unannotated data. The techniques are applied to an LFG grammar for German. Evaluation on an exact match task yields 86% precision for an ambiguity rate of 5.4, and 90% precision on a subcat frame match for an ambiguity rate of 25. Experimental comparison to training from a parsebank shows a 10% gain from EM training. Also, a new class-based grammar lexicalization is presented, showing a 10% gain over unlexicalized models.

* 8 pages, uses acl2000.sty

Inducing a Semantically Annotated Lexicon via EM-Based Clustering

May 19, 1999Abstract:We present a technique for automatic induction of slot annotations for subcategorization frames, based on induction of hidden classes in the EM framework of statistical estimation. The models are empirically evalutated by a general decision test. Induction of slot labeling for subcategorization frames is accomplished by a further application of EM, and applied experimentally on frame observations derived from parsing large corpora. We outline an interpretation of the learned representations as theoretical-linguistic decompositional lexical entries.

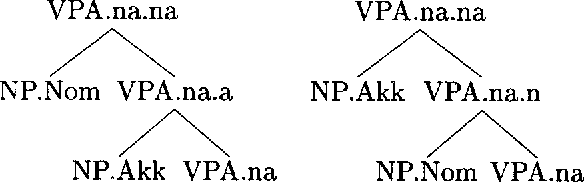

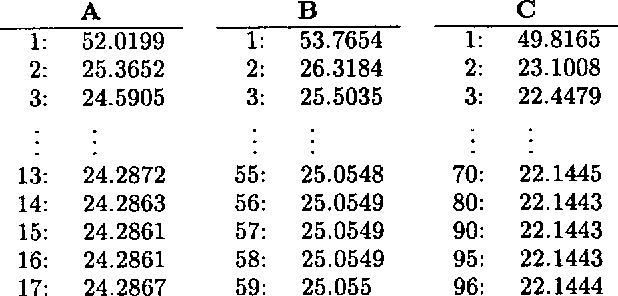

Inside-Outside Estimation of a Lexicalized PCFG for German

May 19, 1999

Abstract:The paper describes an extensive experiment in inside-outside estimation of a lexicalized probabilistic context free grammar for German verb-final clauses. Grammar and formalism features which make the experiment feasible are described. Successive models are evaluated on precision and recall of phrase markup.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge