Derun Cai

Curricular and Cyclical Loss for Time Series Learning Strategy

Dec 26, 2023Abstract:Time series widely exists in real-world applications and many deep learning models have performed well on it. Current research has shown the importance of learning strategy for models, suggesting that the benefit is the order and size of learning samples. However, no effective strategy has been proposed for time series due to its abstract and dynamic construction. Meanwhile, the existing one-shot tasks and continuous tasks for time series necessitate distinct learning processes and mechanisms. No all-purpose approach has been suggested. In this work, we propose a novel Curricular and CyclicaL loss (CRUCIAL) to learn time series for the first time. It is model- and task-agnostic and can be plugged on top of the original loss with no extra procedure. CRUCIAL has two characteristics: It can arrange an easy-to-hard learning order by dynamically determining the sample contribution and modulating the loss amplitude; It can manage a cyclically changed dataset and achieve an adaptive cycle by correlating the loss distribution and the selection probability. We prove that compared with monotonous size, cyclical size can reduce expected error. Experiments on 3 kinds of tasks and 5 real-world datasets show the benefits of CRUCIAL for most deep learning models when learning time series.

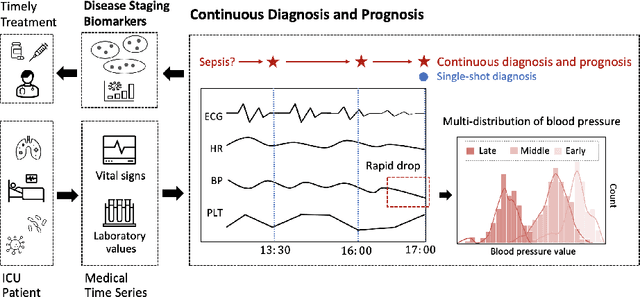

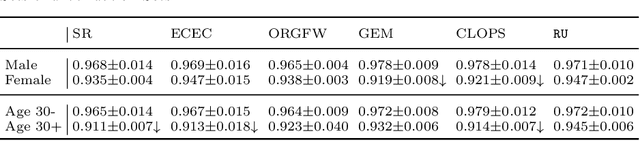

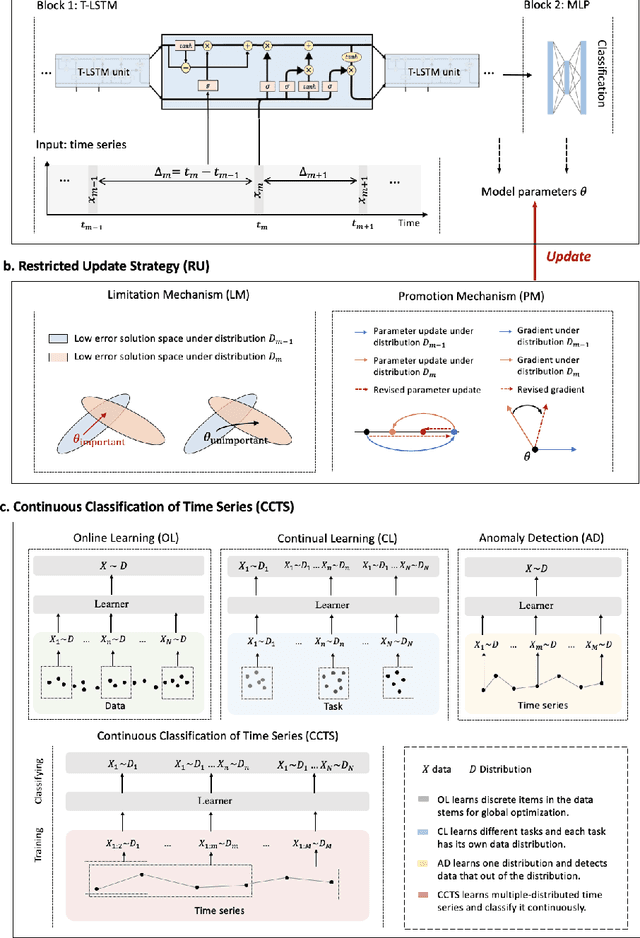

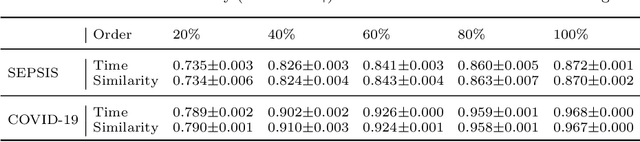

Continuous Diagnosis and Prognosis by Controlling the Update Process of Deep Neural Networks

Oct 06, 2022

Abstract:Continuous diagnosis and prognosis are essential for intensive care patients. It can provide more opportunities for timely treatment and rational resource allocation, especially for sepsis, a main cause of death in ICU, and COVID-19, a new worldwide epidemic. Although deep learning methods have shown their great superiority in many medical tasks, they tend to catastrophically forget, over fit, and get results too late when performing diagnosis and prognosis in the continuous mode. In this work, we summarized the three requirements of this task, proposed a new concept, continuous classification of time series (CCTS), and designed a novel model training method, restricted update strategy of neural networks (RU). In the context of continuous prognosis, our method outperformed all baselines and achieved the average accuracy of 90%, 97%, and 85% on sepsis prognosis, COVID-19 mortality prediction, and eight diseases classification. Superiorly, our method can also endow deep learning with interpretability, having the potential to explore disease mechanisms and provide a new horizon for medical research. We have achieved disease staging for sepsis and COVID-19, discovering four stages and three stages with their typical biomarkers respectively. Further, our method is a data-agnostic and model-agnostic plug-in, it can be used to continuously prognose other diseases with staging and even implement CCTS in other fields.

GRP-FED: Addressing Client Imbalance in Federated Learning via Global-Regularized Personalization

Aug 31, 2021

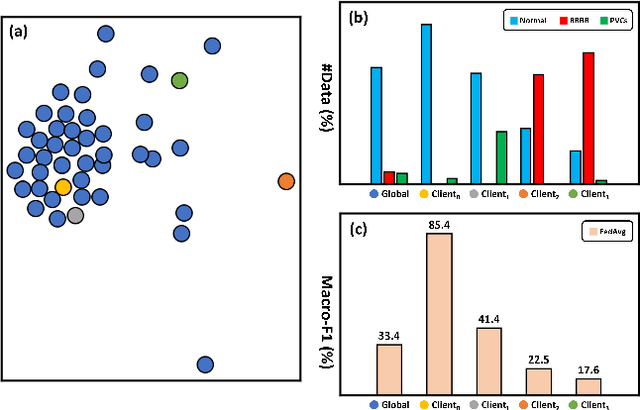

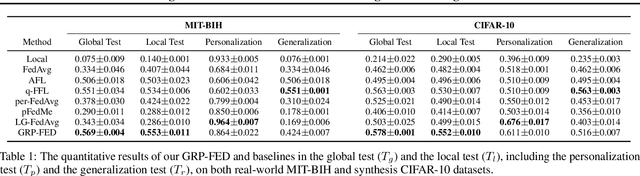

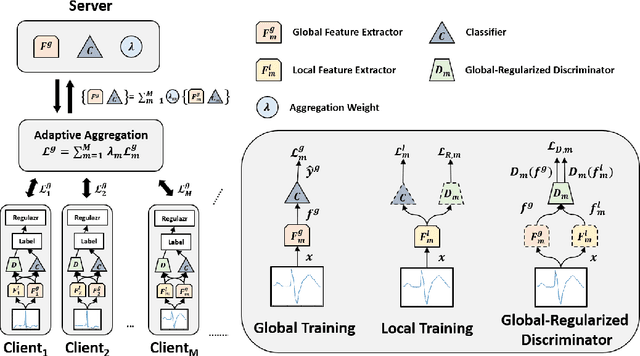

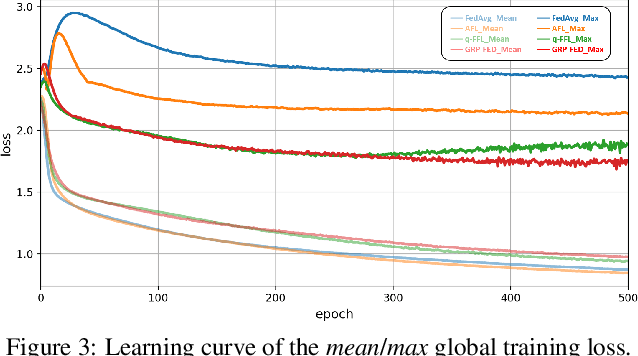

Abstract:Since data is presented long-tailed in reality, it is challenging for Federated Learning (FL) to train across decentralized clients as practical applications. We present Global-Regularized Personalization (GRP-FED) to tackle the data imbalanced issue by considering a single global model and multiple local models for each client. With adaptive aggregation, the global model treats multiple clients fairly and mitigates the global long-tailed issue. Each local model is learned from the local data and aligns with its distribution for customization. To prevent the local model from just overfitting, GRP-FED applies an adversarial discriminator to regularize between the learned global-local features. Extensive results show that our GRP-FED improves under both global and local scenarios on real-world MIT-BIH and synthesis CIFAR-10 datasets, achieving comparable performance and addressing client imbalance.

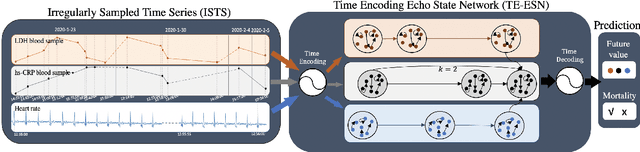

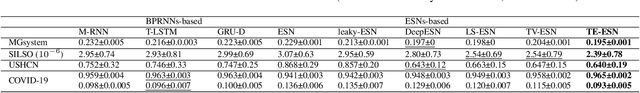

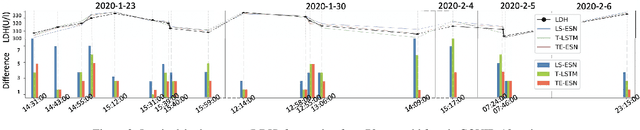

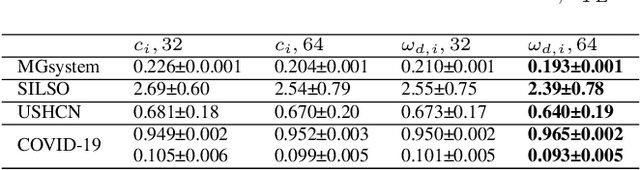

TE-ESN: Time Encoding Echo State Network for Prediction Based on Irregularly Sampled Time Series Data

May 02, 2021

Abstract:Prediction based on Irregularly Sampled Time Series (ISTS) is of wide concern in the real-world applications. For more accurate prediction, the methods had better grasp more data characteristics. Different from ordinary time series, ISTS is characterised with irregular time intervals of intra-series and different sampling rates of inter-series. However, existing methods have suboptimal predictions due to artificially introducing new dependencies in a time series and biasedly learning relations among time series when modeling these two characteristics. In this work, we propose a novel Time Encoding (TE) mechanism. TE can embed the time information as time vectors in the complex domain. It has the the properties of absolute distance and relative distance under different sampling rates, which helps to represent both two irregularities of ISTS. Meanwhile, we create a new model structure named Time Encoding Echo State Network (TE-ESN). It is the first ESNs-based model that can process ISTS data. Besides, TE-ESN can incorporate long short-term memories and series fusion to grasp horizontal and vertical relations. Experiments on one chaos system and three real-world datasets show that TE-ESN performs better than all baselines and has better reservoir property.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge