Der-Hau Lee

Robust Tube-based Control Strategy for Vision-guided Autonomous Vehicles

Mar 24, 2025Abstract:A robust control strategy for autonomous vehicles can improve system stability, enhance riding comfort, and prevent driving accidents. This paper presents a novel interpolation tube-based constrained iterative linear quadratic regulator (itube-CILQR) algorithm for autonomous computer-vision-based vehicle lane-keeping. The goal of the algorithm is to enhance robustness during high-speed cornering on tight turns. The advantages of itube-CILQR over the standard tube-approach include reduced system conservatism and increased computational speed. Numerical and vision-based experiments were conducted to examine the feasibility of the proposed algorithm. The proposed itube-CILQR algorithm is better suited to vehicle lane-keeping than variational CILQR-based methods and model predictive control (MPC) approaches using a classical interior-point solver. Specifically, in evaluation experiments, itube-CILQR achieved an average runtime of 3.16 ms to generate a control signal to guide a self-driving vehicle; itube-MPC typically required a 4.67-times longer computation time to complete the same task. Moreover, the influence of conservatism on system behavior was investigated by exploring the interpolation variable trajectories derived from the proposed itube-CILQR algorithm during lane-keeping maneuvers.

Lane-Keeping Control of Autonomous Vehicles Through a Soft-Constrained Iterative LQR

Nov 28, 2023

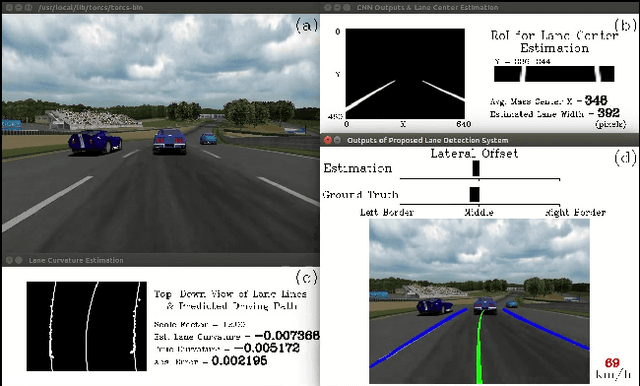

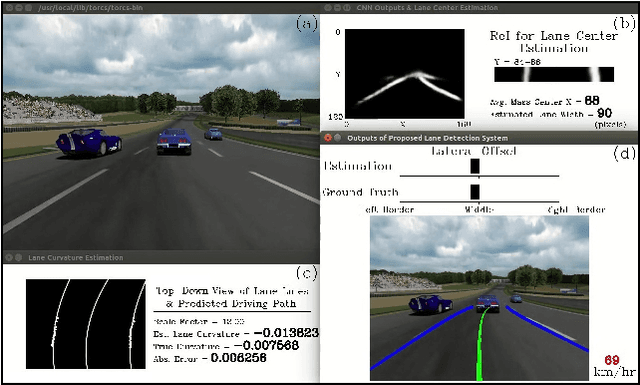

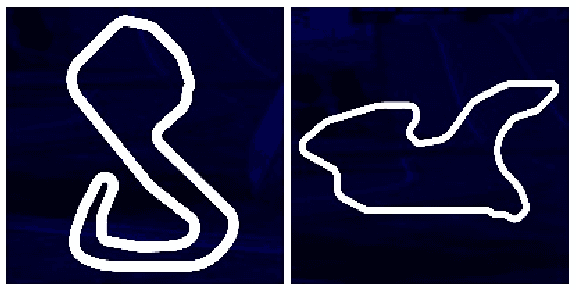

Abstract:The accurate prediction of smooth steering inputs is crucial for autonomous vehicle applications because control actions with jitter might cause the vehicle system to become unstable. To address this problem in automobile lane-keeping control without the use of additional smoothing algorithms, we developed a soft-constrained iterative linear-quadratic regulator (soft-CILQR) algorithm by integrating CILQR algorithm and a model predictive control (MPC) constraint relaxation method. We incorporated slack variables into the state and control barrier functions of the soft-CILQR solver to soften the constraints in the optimization process so that stabilizing control inputs can be calculated in a relatively simple manner. Two types of automotive lane-keeping experiments were conducted with a linear system dynamics model to test the performance of the proposed soft-CILQR algorithm and to compare its performance with that of the CILQR algorithm: numerical simulations and experiments involving challenging vision-based maneuvers. In the numerical simulations, the soft-CILQR and CILQR solvers managed to drive the system toward the reference state asymptotically; however, the soft-CILQR solver obtained smooth steering input trajectories more easily than did the CILQR solver under conditions involving additive disturbances. In the experiments with visual inputs, the soft-CILQR controller outperformed the CILQR controller in terms of tracking accuracy and steering smoothness during the driving of an ego vehicle on TORCS.

Efficient Perception, Planning, and Control Algorithms for Vision-Based Automated Vehicles

Sep 15, 2022

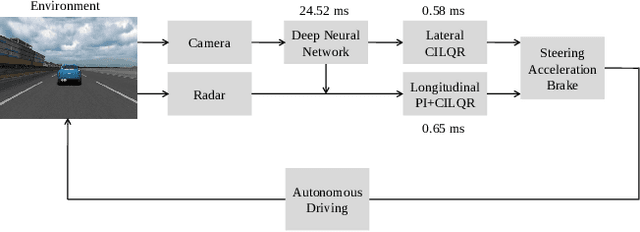

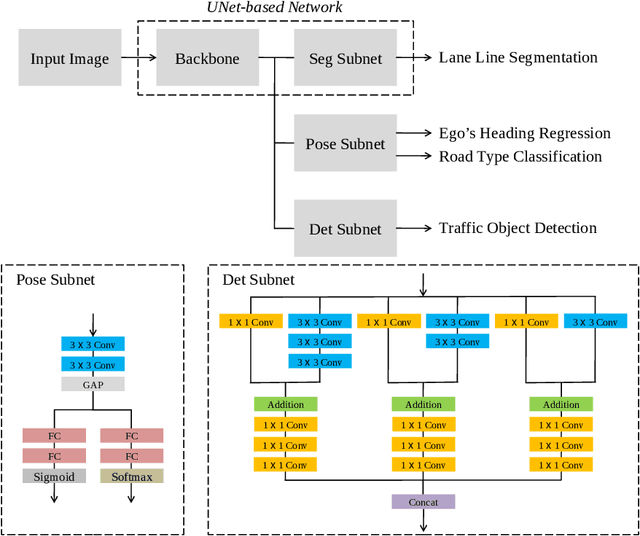

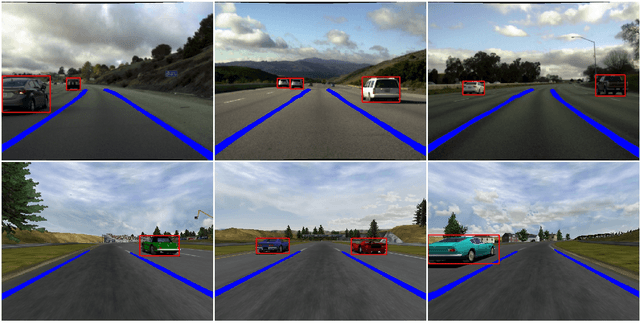

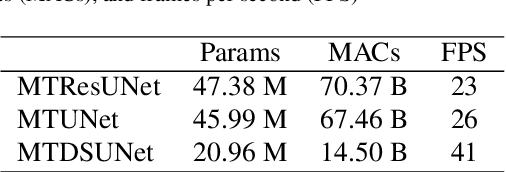

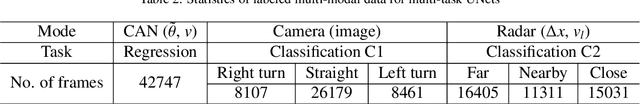

Abstract:Owing to resource limitations, efficient computation systems have long been a critical demand for those designing autonomous vehicles. Additionally, sensor cost and size restrict the development of self-driving cars. This paper presents an efficient framework for the operation of vision-based automatic vehicles; a front-facing camera and a few inexpensive radars are the required sensors for driving environment perception. The proposed algorithm comprises a multi-task UNet (MTUNet) network for extracting image features and constrained iterative linear quadratic regulator (CILQR) modules for rapid lateral and longitudinal motion planning. The MTUNet is designed to simultaneously solve lane line segmentation, ego vehicle heading angle regression, road type classification, and traffic object detection tasks at an approximate speed of 40 FPS when an RGB image of size 228 x 228 is fed into it. The CILQR algorithms then take processed MTUNet outputs and radar data as their input to produce driving commands for lateral and longitudinal vehicle automation guidance; both optimal control problems can be solved within 1 ms. The proposed CILQR controllers are shown to be more efficient than the sequential quadratic programming (SQP) methods and can collaborate with the MTUNet to drive a car autonomously in unseen simulation environments for lane-keeping and car-following maneuvers. Our experiments demonstrate that the proposed autonomous driving system is applicable to modern automobiles.

End-to-End Multi-Task Deep Learning and Model Based Control Algorithm for Autonomous Driving

Dec 16, 2021

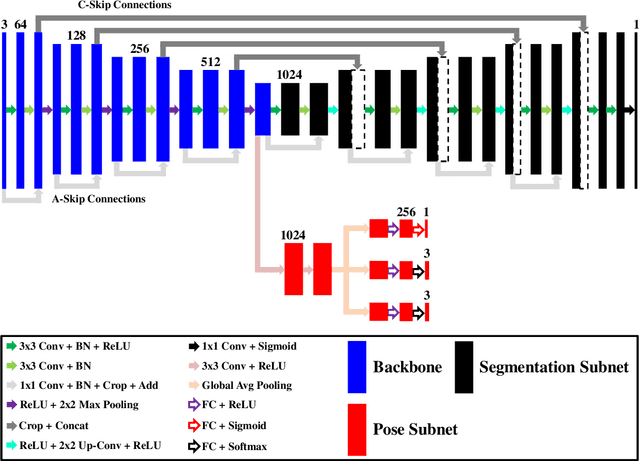

Abstract:End-to-end driving with a deep learning neural network (DNN) has become a rapidly growing paradigm of autonomous driving in industry and academia. Yet safety measures and interpretability still pose challenges to this paradigm. We propose an end-to-end driving algorithm that integrates multi-task DNN, path prediction, and control models in a pipeline of data flow from sensory devices through these models to driving decisions. It provides quantitative measures to evaluate the holistic, dynamic, and real-time performance of end-to-end driving systems, and thus allows to quantify their safety and interpretability. The DNN is a modified UNet, a well known encoder-decoder neural network of semantic segmentation. It consists of one segmentation, one regression, and two classification tasks for lane segmentation, path prediction, and vehicle controls. We present three variants of the modified UNet architecture having different complexities, compare them on different tasks in four static measures for both single and multi-task (MT) architectures, and then identify the best one by two additional dynamic measures in real-time simulation. We also propose a learning- and model-based longitudinal controller using model predictive control method. With the Stanley lateral controller, our results show that MTUNet outperforms an earlier modified UNet in terms of curvature and lateral offset estimation on curvy roads at normal speed, which has been tested in a real car driving on real roads.

End-to-End Deep Learning of Lane Detection and Path Prediction for Real-Time Autonomous Driving

Feb 09, 2021

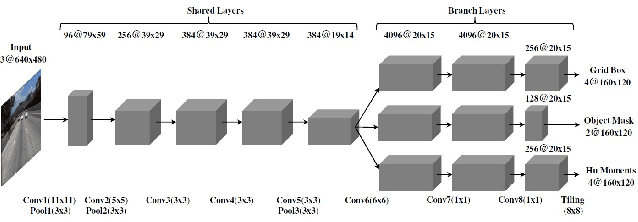

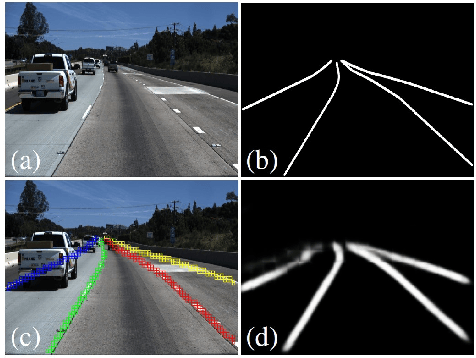

Abstract:We propose an end-to-end three-task convolutional neural network (3TCNN) having two regression branches of bounding boxes and Hu moments and one classification branch of object masks for lane detection and road recognition. The Hu-moment regressor performs lane localization and road guidance using local and global Hu moments of segmented lane objects, respectively. Based on 3TCNN, we then propose lateral offset and path prediction (PP) algorithms to form an integrated model (3TCNN-PP) that can predict driving path with dynamic estimation of lane centerline and path curvature for real-time autonomous driving. We also develop a CNN-PP simulator that can be used to train a CNN by real or artificial traffic images, test it by artificial images, quantify its dynamic errors, and visualize its qualitative performance. Simulation results show that 3TCNN-PP is comparable to related CNNs and better than a previous CNN-PP, respectively. The code, annotated data, and simulation videos of this work can be found on our website for further research on NN-PP algorithms of autonomous driving.

Deep Learning and Control Algorithms of Direct Perception for Autonomous Driving

Nov 12, 2019

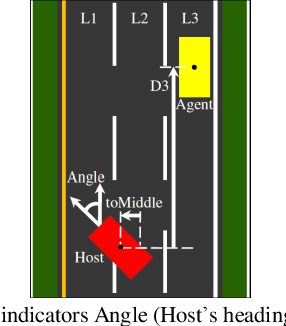

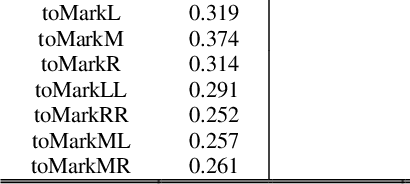

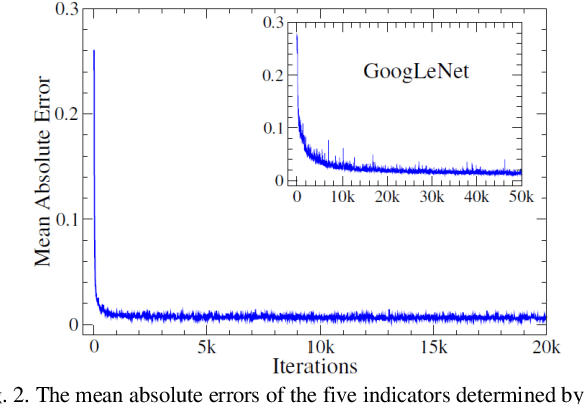

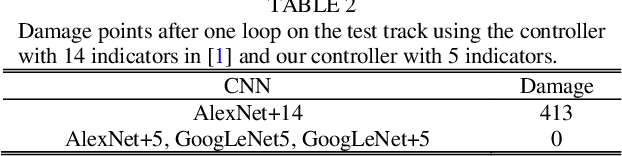

Abstract:Based on the direct perception paradigm of autonomous driving, we investigate and modify the CNNs (convolutional neural networks) AlexNet and GoogLeNet that map an input image to few perception indicators (heading angle, distances to preceding cars, and distance to road centerline) for estimating driving affordances in highway traffic. We also design a controller with these indicators and the short-range sensor information of TORCS (the open racing car simulator) for driving simulated cars to avoid collisions. We collect a set of images from a TORCS camera in various driving scenarios, train these CNNs using the dataset, test them in unseen traffics, and find that they perform better than earlier algorithms and controllers in terms of training efficiency and driving stability. Source code and data are available on our website.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge