Efficient Perception, Planning, and Control Algorithms for Vision-Based Automated Vehicles

Paper and Code

Sep 15, 2022

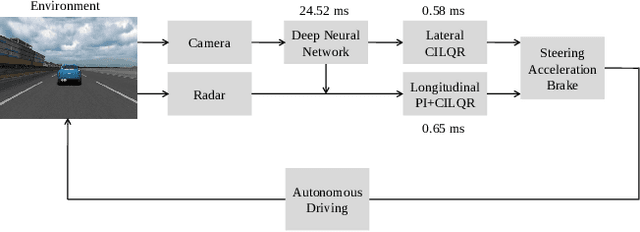

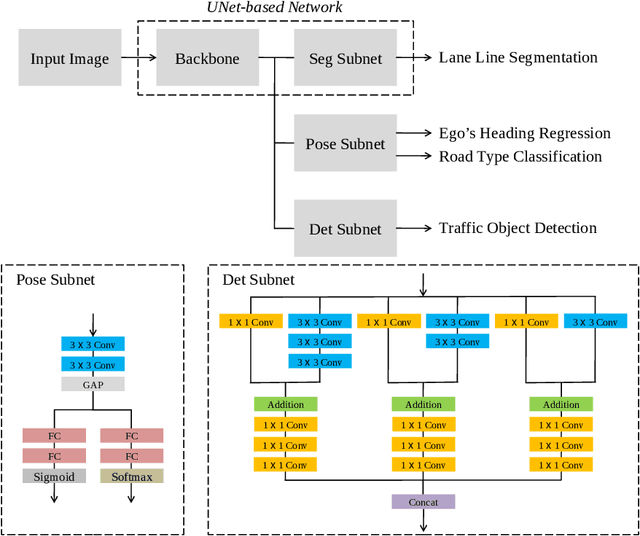

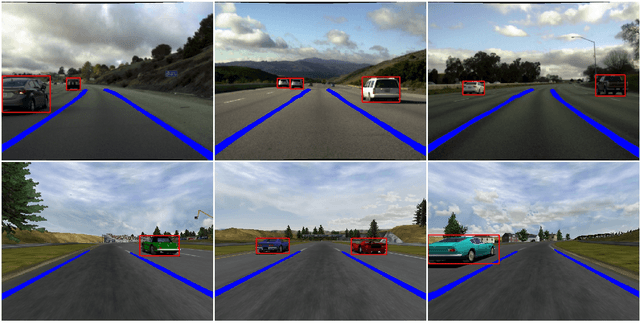

Owing to resource limitations, efficient computation systems have long been a critical demand for those designing autonomous vehicles. Additionally, sensor cost and size restrict the development of self-driving cars. This paper presents an efficient framework for the operation of vision-based automatic vehicles; a front-facing camera and a few inexpensive radars are the required sensors for driving environment perception. The proposed algorithm comprises a multi-task UNet (MTUNet) network for extracting image features and constrained iterative linear quadratic regulator (CILQR) modules for rapid lateral and longitudinal motion planning. The MTUNet is designed to simultaneously solve lane line segmentation, ego vehicle heading angle regression, road type classification, and traffic object detection tasks at an approximate speed of 40 FPS when an RGB image of size 228 x 228 is fed into it. The CILQR algorithms then take processed MTUNet outputs and radar data as their input to produce driving commands for lateral and longitudinal vehicle automation guidance; both optimal control problems can be solved within 1 ms. The proposed CILQR controllers are shown to be more efficient than the sequential quadratic programming (SQP) methods and can collaborate with the MTUNet to drive a car autonomously in unseen simulation environments for lane-keeping and car-following maneuvers. Our experiments demonstrate that the proposed autonomous driving system is applicable to modern automobiles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge