Deokki Hong

Enhancing Generalization in Data-free Quantization via Mixup-class Prompting

Jul 29, 2025

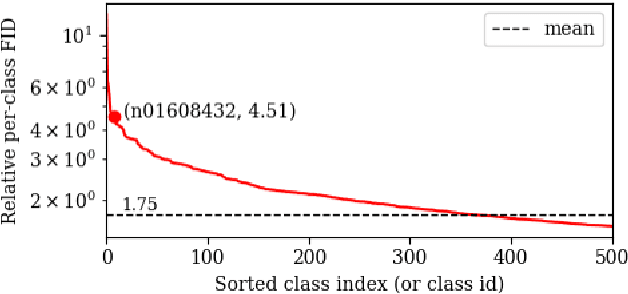

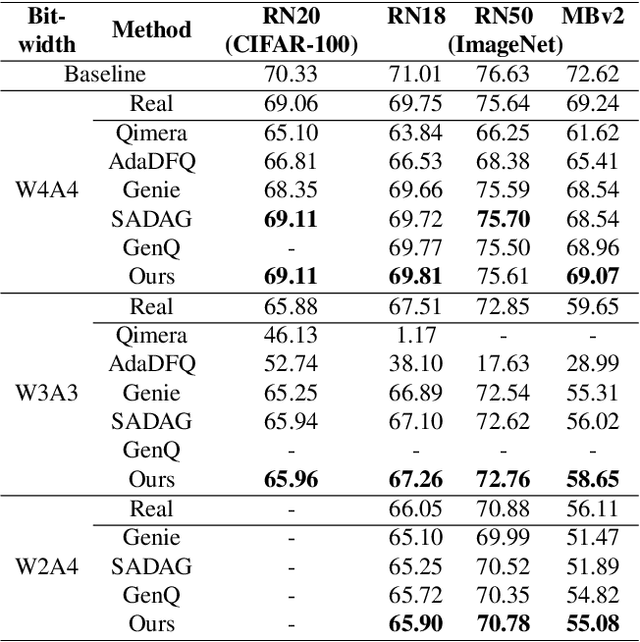

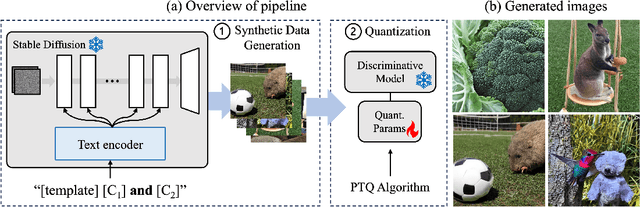

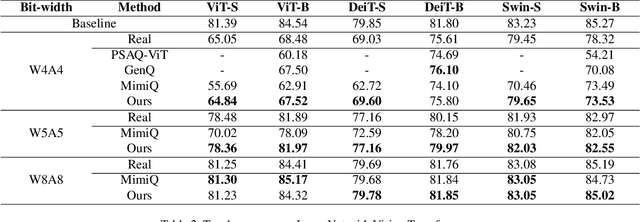

Abstract:Post-training quantization (PTQ) improves efficiency but struggles with limited calibration data, especially under privacy constraints. Data-free quantization (DFQ) mitigates this by generating synthetic images using generative models such as generative adversarial networks (GANs) and text-conditioned latent diffusion models (LDMs), while applying existing PTQ algorithms. However, the relationship between generated synthetic images and the generalizability of the quantized model during PTQ remains underexplored. Without investigating this relationship, synthetic images generated by previous prompt engineering methods based on single-class prompts suffer from issues such as polysemy, leading to performance degradation. We propose \textbf{mixup-class prompt}, a mixup-based text prompting strategy that fuses multiple class labels at the text prompt level to generate diverse, robust synthetic data. This approach enhances generalization, and improves optimization stability in PTQ. We provide quantitative insights through gradient norm and generalization error analysis. Experiments on convolutional neural networks (CNNs) and vision transformers (ViTs) show that our method consistently outperforms state-of-the-art DFQ methods like GenQ. Furthermore, it pushes the performance boundary in extremely low-bit scenarios, achieving new state-of-the-art accuracy in challenging 2-bit weight, 4-bit activation (W2A4) quantization.

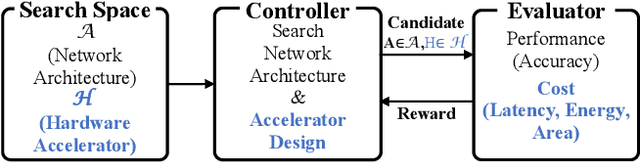

Enabling Hard Constraints in Differentiable Neural Network and Accelerator Co-Exploration

Jan 23, 2023Abstract:Co-exploration of an optimal neural architecture and its hardware accelerator is an approach of rising interest which addresses the computational cost problem, especially in low-profile systems. The large co-exploration space is often handled by adopting the idea of differentiable neural architecture search. However, despite the superior search efficiency of the differentiable co-exploration, it faces a critical challenge of not being able to systematically satisfy hard constraints such as frame rate. To handle the hard constraint problem of differentiable co-exploration, we propose HDX, which searches for hard-constrained solutions without compromising the global design objectives. By manipulating the gradients in the interest of the given hard constraint, high-quality solutions satisfying the constraint can be obtained.

Tech Report: One-stage Lightweight Object Detectors

Oct 31, 2022Abstract:This work is for designing one-stage lightweight detectors which perform well in terms of mAP and latency. With baseline models each of which targets on GPU and CPU respectively, various operations are applied instead of the main operations in backbone networks of baseline models. In addition to experiments about backbone networks and operations, several feature pyramid network (FPN) architectures are investigated. Benchmarks and proposed detectors are analyzed in terms of the number of parameters, Gflops, GPU latency, CPU latency and mAP, on MS COCO dataset which is a benchmark dataset in object detection. This work propose similar or better network architectures considering the trade-off between accuracy and latency. For example, our proposed GPU-target backbone network outperforms that of YOLOX-tiny which is selected as the benchmark by 1.43x in speed and 0.5 mAP in accuracy on NVIDIA GeForce RTX 2080 Ti GPU.

It's All In the Teacher: Zero-Shot Quantization Brought Closer to the Teacher

Apr 01, 2022

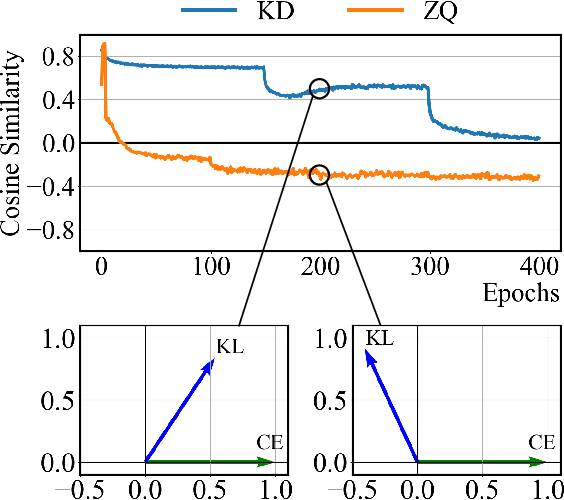

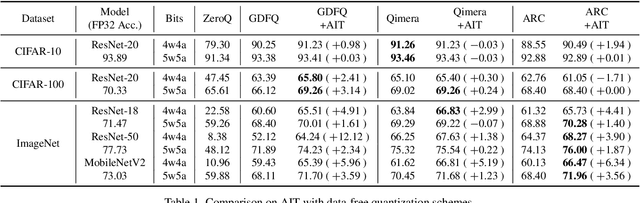

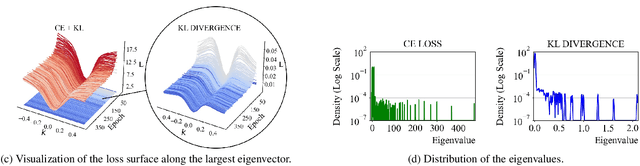

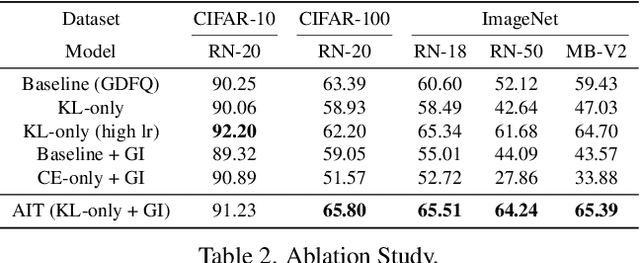

Abstract:Model quantization is considered as a promising method to greatly reduce the resource requirements of deep neural networks. To deal with the performance drop induced by quantization errors, a popular method is to use training data to fine-tune quantized networks. In real-world environments, however, such a method is frequently infeasible because training data is unavailable due to security, privacy, or confidentiality concerns. Zero-shot quantization addresses such problems, usually by taking information from the weights of a full-precision teacher network to compensate the performance drop of the quantized networks. In this paper, we first analyze the loss surface of state-of-the-art zero-shot quantization techniques and provide several findings. In contrast to usual knowledge distillation problems, zero-shot quantization often suffers from 1) the difficulty of optimizing multiple loss terms together, and 2) the poor generalization capability due to the use of synthetic samples. Furthermore, we observe that many weights fail to cross the rounding threshold during training the quantized networks even when it is necessary to do so for better performance. Based on the observations, we propose AIT, a simple yet powerful technique for zero-shot quantization, which addresses the aforementioned two problems in the following way: AIT i) uses a KL distance loss only without a cross-entropy loss, and ii) manipulates gradients to guarantee that a certain portion of weights are properly updated after crossing the rounding thresholds. Experiments show that AIT outperforms the performance of many existing methods by a great margin, taking over the overall state-of-the-art position in the field.

Qimera: Data-free Quantization with Synthetic Boundary Supporting Samples

Nov 04, 2021

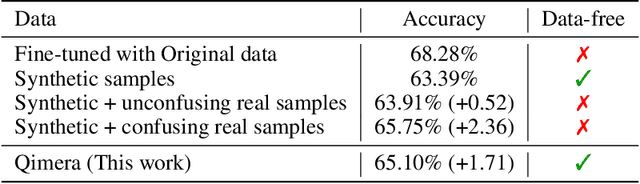

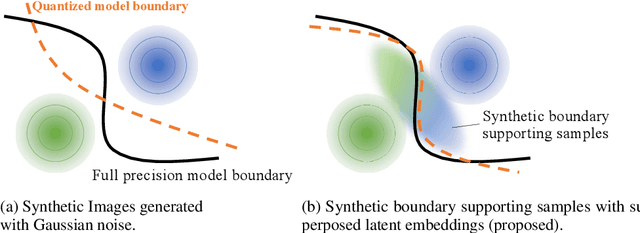

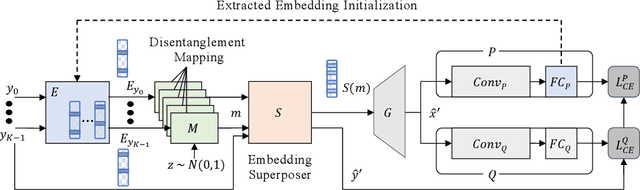

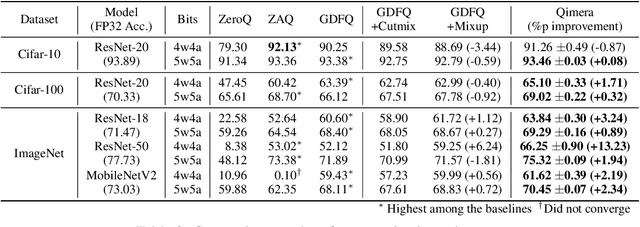

Abstract:Model quantization is known as a promising method to compress deep neural networks, especially for inferences on lightweight mobile or edge devices. However, model quantization usually requires access to the original training data to maintain the accuracy of the full-precision models, which is often infeasible in real-world scenarios for security and privacy issues. A popular approach to perform quantization without access to the original data is to use synthetically generated samples, based on batch-normalization statistics or adversarial learning. However, the drawback of such approaches is that they primarily rely on random noise input to the generator to attain diversity of the synthetic samples. We find that this is often insufficient to capture the distribution of the original data, especially around the decision boundaries. To this end, we propose Qimera, a method that uses superposed latent embeddings to generate synthetic boundary supporting samples. For the superposed embeddings to better reflect the original distribution, we also propose using an additional disentanglement mapping layer and extracting information from the full-precision model. The experimental results show that Qimera achieves state-of-the-art performances for various settings on data-free quantization. Code is available at https://github.com/iamkanghyunchoi/qimera.

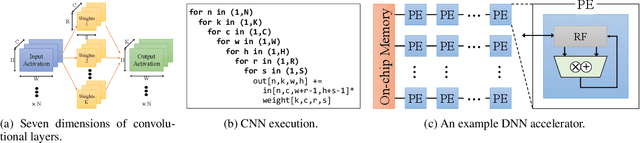

DANCE: Differentiable Accelerator/Network Co-Exploration

Sep 14, 2020

Abstract:To cope with the ever-increasing computational demand of the DNN execution, recent neural architecture search (NAS) algorithms consider hardware cost metrics into account, such as GPU latency. To further pursue a fast, efficient execution, DNN-specialized hardware accelerators are being designed for multiple purposes, which far-exceeds the efficiency of the GPUs. However, those hardware-related metrics have been proven to exhibit non-linear relationships with the network architectures. Therefore it became a chicken-and-egg problem to optimize the network against the accelerator, or to optimize the accelerator against the network. In such circumstances, this work presents DANCE, a differentiable approach towards the co-exploration of the hardware accelerator and network architecture design. At the heart of DANCE is a differentiable evaluator network. By modeling the hardware evaluation software with a neural network, the relation between the accelerator architecture and the hardware metrics becomes differentiable, allowing the search to be performed with backpropagation. Compared to the naive existing approaches, our method performs co-exploration in a significantly shorter time, while achieving superior accuracy and hardware cost metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge