Demin Xu

Fast and Accurate Multi-Agent Trajectory Prediction For Crowded Unknown Scenes

Jul 12, 2024

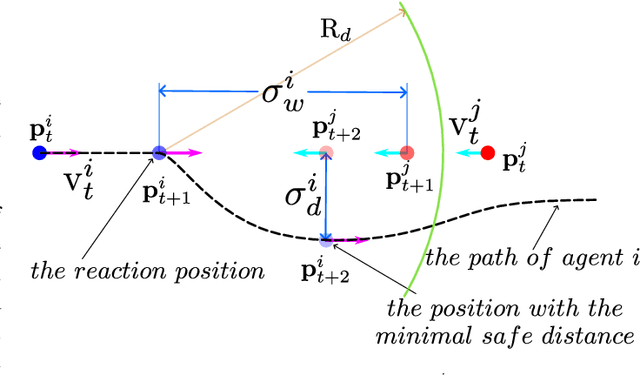

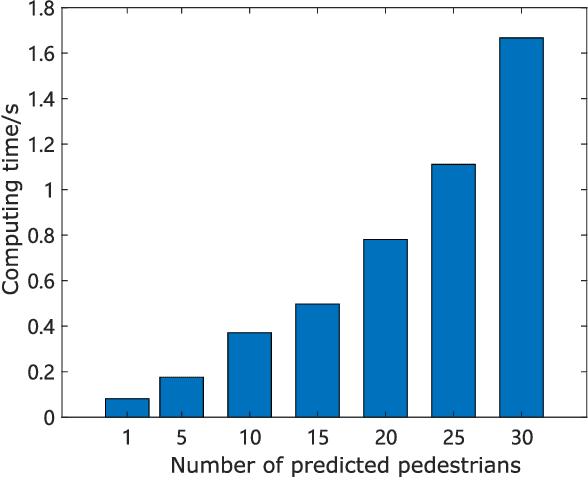

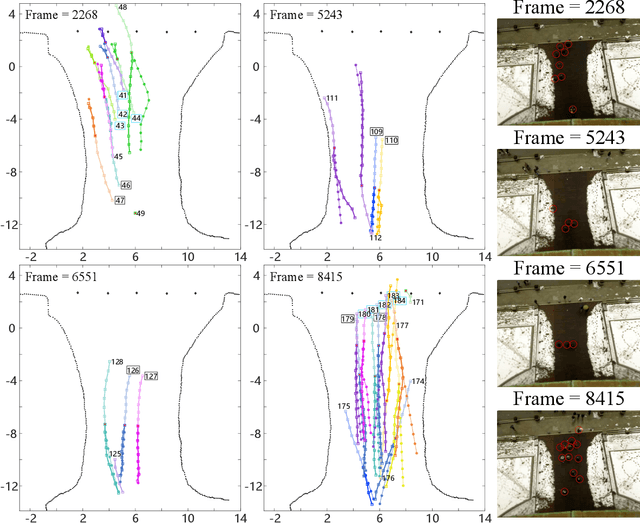

Abstract:This paper studies the problem of multi-agent trajectory prediction in crowded unknown environments. A novel energy function optimization-based framework is proposed to generate prediction trajectories. Firstly, a new energy function is designed for easier optimization. Secondly, an online optimization pipeline for calculating parameters and agents' velocities is developed. In this pipeline, we first design an efficient group division method based on Frechet distance to classify agents online. Then the strategy on decoupling the optimization of velocities and critical parameters in the energy function is developed, where the the slap swarm algorithm and gradient descent algorithms are integrated to solve the optimization problems more efficiently. Thirdly, we propose a similarity-based resample evaluation algorithm to predict agents' optimal goals, defined as the target-moving headings of agents, which effectively extracts hidden information in observed states and avoids learning agents' destinations via the training dataset in advance. Experiments and comparison studies verify the advantages of the proposed method in terms of prediction accuracy and speed.

QFree: A Universal Value Function Factorization for Multi-Agent Reinforcement Learning

Nov 01, 2023

Abstract:Centralized training is widely utilized in the field of multi-agent reinforcement learning (MARL) to assure the stability of training process. Once a joint policy is obtained, it is critical to design a value function factorization method to extract optimal decentralized policies for the agents, which needs to satisfy the individual-global-max (IGM) principle. While imposing additional limitations on the IGM function class can help to meet the requirement, it comes at the cost of restricting its application to more complex multi-agent environments. In this paper, we propose QFree, a universal value function factorization method for MARL. We start by developing mathematical equivalent conditions of the IGM principle based on the advantage function, which ensures that the principle holds without any compromise, removing the conservatism of conventional methods. We then establish a more expressive mixing network architecture that can fulfill the equivalent factorization. In particular, the novel loss function is developed by considering the equivalent conditions as regularization term during policy evaluation in the MARL algorithm. Finally, the effectiveness of the proposed method is verified in a nonmonotonic matrix game scenario. Moreover, we show that QFree achieves the state-of-the-art performance in a general-purpose complex MARL benchmark environment, Starcraft Multi-Agent Challenge (SMAC).

Policy Learning for Nonlinear Model Predictive Control with Application to USVs

Nov 18, 2022

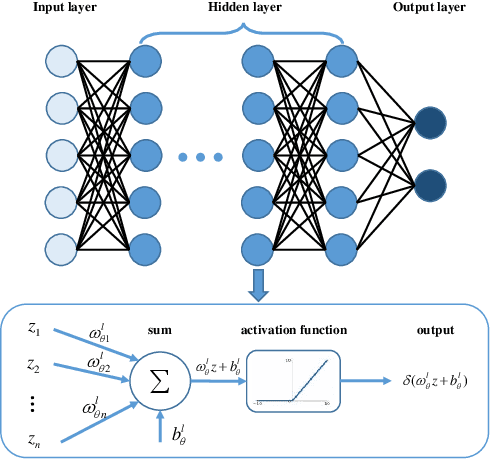

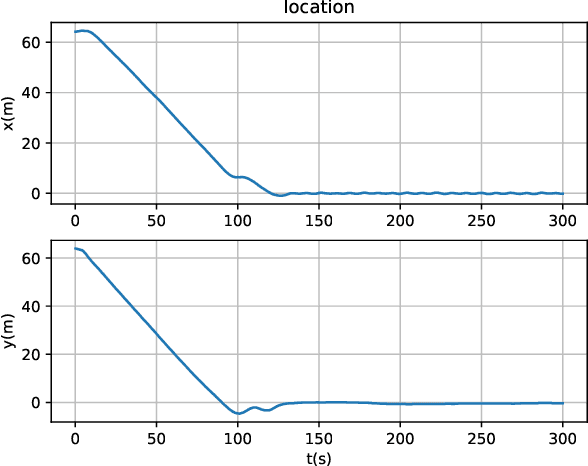

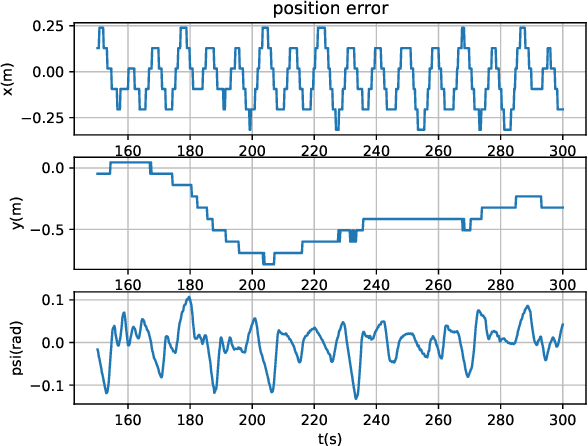

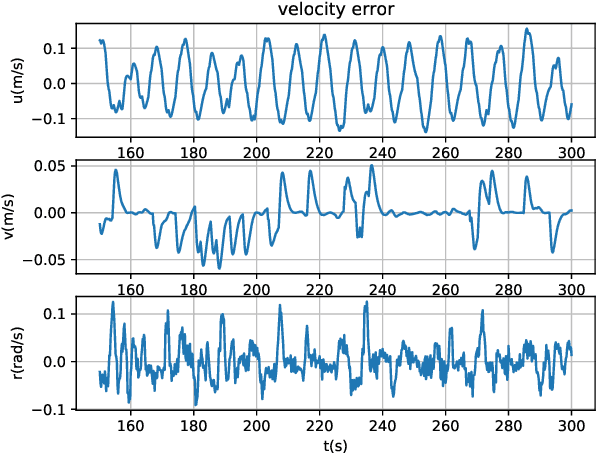

Abstract:The unaffordable computation load of nonlinear model predictive control (NMPC) has prevented it for being used in robots with high sampling rates for decades. This paper is concerned with the policy learning problem for nonlinear MPC with system constraints, and its applications to unmanned surface vehicles (USVs), where the nonlinear MPC policy is learned offline and deployed online to resolve the computational complexity issue. A deep neural networks (DNN) based policy learning MPC (PL-MPC) method is proposed to avoid solving nonlinear optimal control problems online. The detailed policy learning method is developed and the PL-MPC algorithm is designed. The strategy to ensure the practical feasibility of policy implementation is proposed, and it is theoretically proved that the closed-loop system under the proposed method is asymptotically stable in probability. In addition, we apply the PL-MPC algorithm successfully to the motion control of USVs. It is shown that the proposed algorithm can be implemented at a sampling rate up to $5 Hz$ with high-precision motion control. The experiment video is available via:\url{https://v.youku.com/v_show/id_XNTkwMTM0NzM5Ng==.html

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge