Debora Clever

Learning to Play Foosball: System and Baselines

Jul 23, 2024

Abstract:This work stages Foosball as a versatile platform for advancing scientific research, particularly in the realm of robot learning. We present an automated Foosball table along with its corresponding simulated counterpart, showcasing a diverse range of challenges through example tasks within the Foosball environment. Initial findings are shared using a simple baseline approach. Foosball constitutes a versatile learning environment with the potential to yield cutting-edge research in various fields of artificial intelligence and machine learning, notably robust learning, while also extending its applicability to industrial robotics and automation setups. To transform our physical Foosball table into a research-friendly system, we augmented it with a 2 degrees of freedom kinematic chain to control the goalkeeper rod as an initial setup with the intention to be extended to the full game as soon as possible. Our experiments reveal that a realistic simulation is essential for mastering complex robotic tasks, yet translating these accomplishments to the real system remains challenging, often accompanied by a performance decline. This emphasizes the critical importance of research in this direction. In this concern, we spotlight the automated Foosball table as an invaluable tool, possessing numerous desirable attributes, to serve as a demanding learning environment for advancing robotics and automation research.

HJB Optimal Feedback Control with Deep Differential Value Functions and Action Constraints

Oct 11, 2019

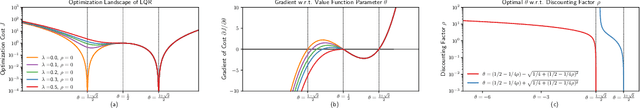

Abstract:Learning optimal feedback control laws capable of executing optimal trajectories is essential for many robotic applications. Such policies can be learned using reinforcement learning or planned using optimal control. While reinforcement learning is sample inefficient, optimal control only plans an optimal trajectory from a specific starting configuration. In this paper we propose deep optimal feedback control to learn an optimal feedback policy rather than a single trajectory. By exploiting the inherent structure of the robot dynamics and strictly convex action cost, we can derive principled cost functions such that the optimal policy naturally obeys the action limits, is globally optimal and stable on the training domain given the optimal value function. The corresponding optimal value function is learned end-to-end by embedding a deep differential network in the Hamilton-Jacobi-Bellmann differential equation and minimizing the error of this equality while simultaneously decreasing the discounting from short- to far-sighted to enable the learning. Our proposed approach enables us to learn an optimal feedback control law in continuous time, that in contrast to existing approaches generates an optimal trajectory from any point in state-space without the need of replanning. The resulting approach is evaluated on non-linear systems and achieves optimal feedback control, where standard optimal control methods require frequent replanning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge