David Radke

Simulating Tracking Data to Advance Sports Analytics Research

Mar 25, 2025Abstract:Advanced analytics have transformed how sports teams operate, particularly in episodic sports like baseball. Their impact on continuous invasion sports, such as soccer and ice hockey, has been limited due to increased game complexity and restricted access to high-resolution game tracking data. In this demo, we present a method to collect and utilize simulated soccer tracking data from the Google Research Football environment to support the development of models designed for continuous tracking data. The data is stored in a schema that is representative of real tracking data and we provide processes that extract high-level features and events. We include examples of established tracking data models to showcase the efficacy of the simulated data. We address the scarcity of publicly available tracking data, providing support for research at the intersection of artificial intelligence and sports analytics.

Towards a Better Understanding of Learning with Multiagent Teams

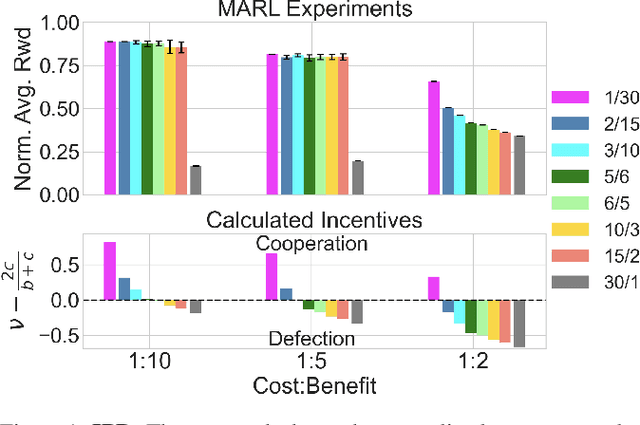

Jun 28, 2023Abstract:While it has long been recognized that a team of individual learning agents can be greater than the sum of its parts, recent work has shown that larger teams are not necessarily more effective than smaller ones. In this paper, we study why and under which conditions certain team structures promote effective learning for a population of individual learning agents. We show that, depending on the environment, some team structures help agents learn to specialize into specific roles, resulting in more favorable global results. However, large teams create credit assignment challenges that reduce coordination, leading to large teams performing poorly compared to smaller ones. We support our conclusions with both theoretical analysis and empirical results.

Learning to Learn Group Alignment: A Self-Tuning Credo Framework with Multiagent Teams

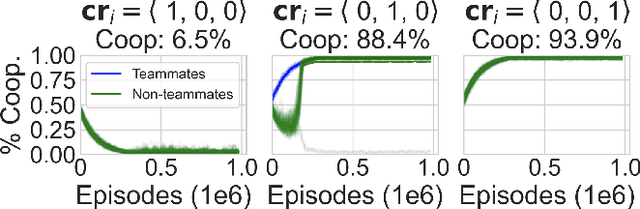

Apr 14, 2023Abstract:Mixed incentives among a population with multiagent teams has been shown to have advantages over a fully cooperative system; however, discovering the best mixture of incentives or team structure is a difficult and dynamic problem. We propose a framework where individual learning agents self-regulate their configuration of incentives through various parts of their reward function. This work extends previous work by giving agents the ability to dynamically update their group alignment during learning and by allowing teammates to have different group alignment. Our model builds on ideas from hierarchical reinforcement learning and meta-learning to learn the configuration of a reward function that supports the development of a behavioral policy. We provide preliminary results in a commonly studied multiagent environment and find that agents can achieve better global outcomes by self-tuning their respective group alignment parameters.

Presenting Multiagent Challenges in Team Sports Analytics

Mar 23, 2023Abstract:This paper draws correlations between several challenges and opportunities within the area of team sports analytics and key research areas within multiagent systems (MAS). We specifically consider invasion games, defined as sports where players invade the opposing team's territory and can interact anywhere on a playing surface such as ice hockey, soccer, and basketball. We argue that MAS is well-equipped to study invasion games and will benefit both MAS and sports analytics fields. Our discussion highlights areas for MAS implementation and further development along two axes: short-term in-game strategy (coaching) and long-term team planning (management).

Exploring the Benefits of Teams in Multiagent Learning

May 04, 2022

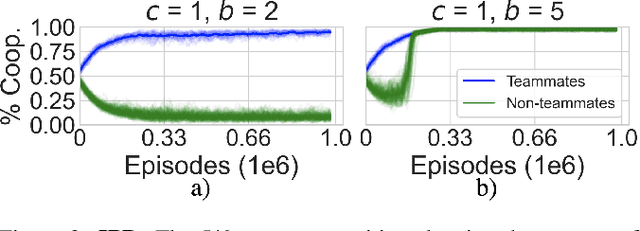

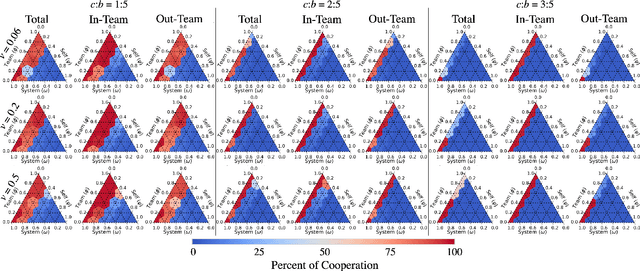

Abstract:For problems requiring cooperation, many multiagent systems implement solutions among either individual agents or across an entire population towards a common goal. Multiagent teams are primarily studied when in conflict; however, organizational psychology (OP) highlights the benefits of teams among human populations for learning how to coordinate and cooperate. In this paper, we propose a new model of multiagent teams for reinforcement learning (RL) agents inspired by OP and early work on teams in artificial intelligence. We validate our model using complex social dilemmas that are popular in recent multiagent RL and find that agents divided into teams develop cooperative pro-social policies despite incentives to not cooperate. Furthermore, agents are better able to coordinate and learn emergent roles within their teams and achieve higher rewards compared to when the interests of all agents are aligned.

The Importance of Credo in Multiagent Learning

Apr 15, 2022

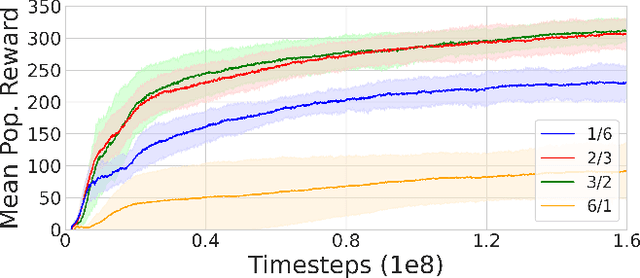

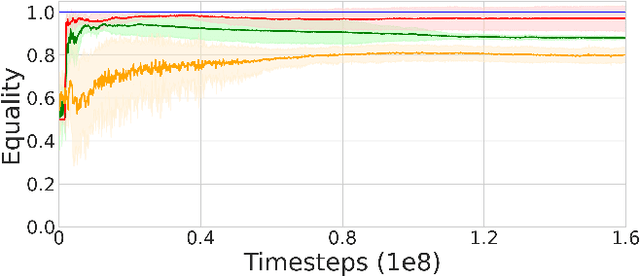

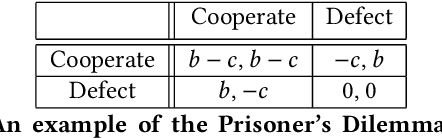

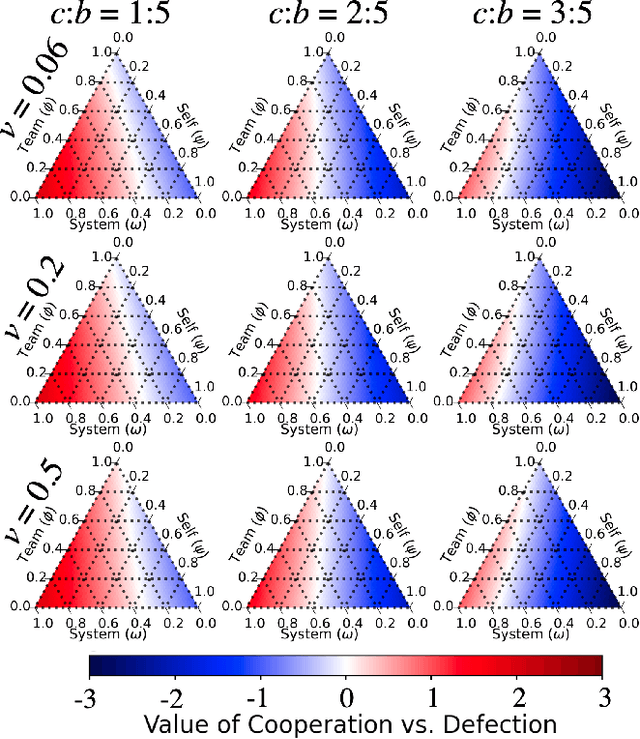

Abstract:We propose a model for multi-objective optimization, a credo, for agents in a system that are configured into multiple groups (i.e., teams). Our model of credo regulates how agents optimize their behavior for the component groups they belong to. We evaluate credo in the context of challenging social dilemmas with reinforcement learning agents. Our results indicate that the interests of teammates, or the entire system, are not required to be fully aligned for globally beneficial outcomes. We identify two scenarios without full common interest that achieve high equality and significantly higher mean population rewards compared to when the interests of all agents are aligned.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge