David Pfaff

The Limitations of Model Uncertainty in Adversarial Settings

Dec 06, 2018

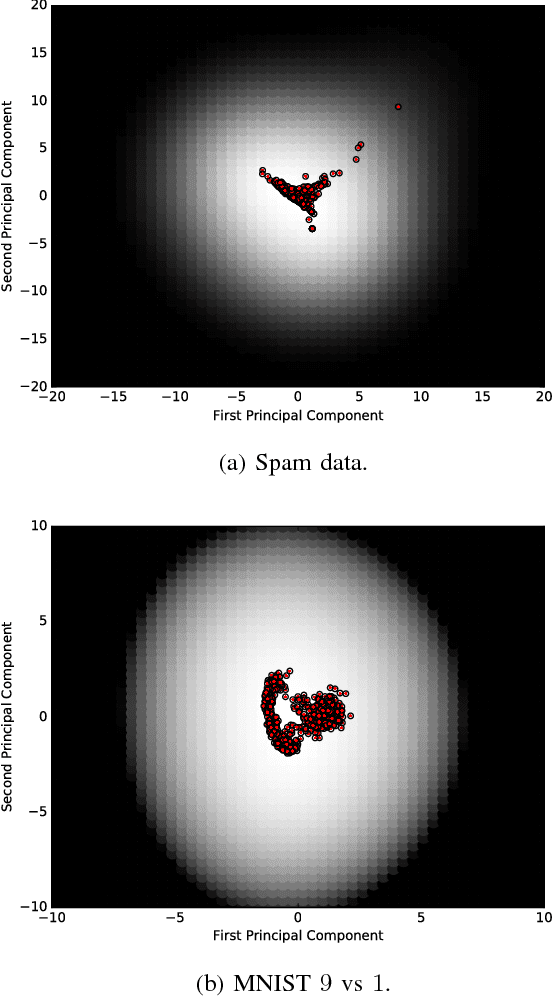

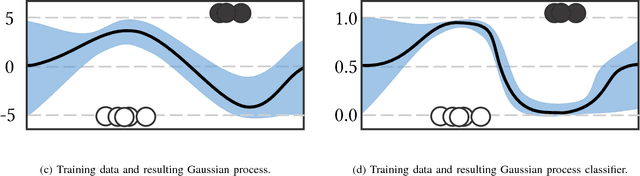

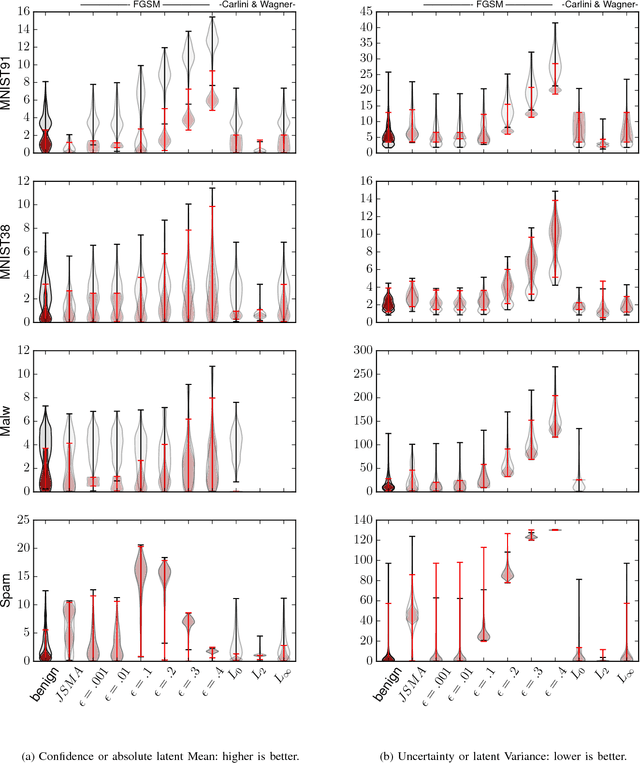

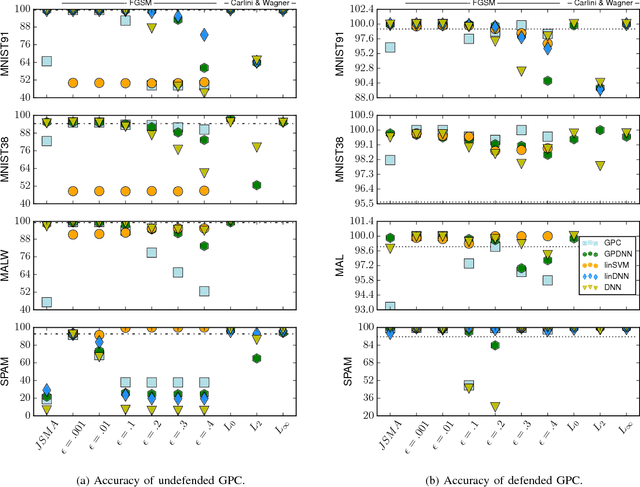

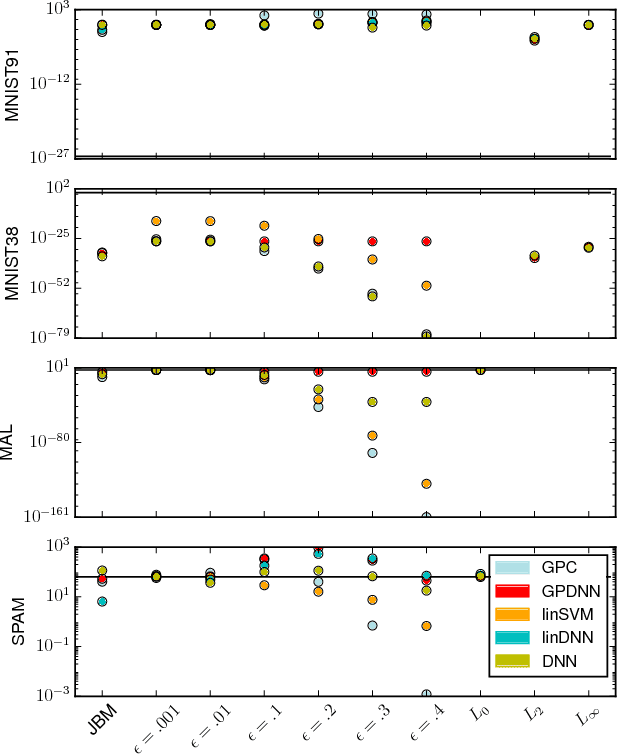

Abstract:Machine learning models are vulnerable to adversarial examples: minor perturbations to input samples intended to deliberately cause misclassification. Many defenses have led to an arms race-we thus study a promising, recent trend in this setting, Bayesian uncertainty measures. These measures allow a classifier to provide principled confidence and uncertainty for an input, where the latter refers to how usual the input is. We focus on Gaussian processes (GP), a classifier providing such principled uncertainty and confidence measures. Using correctly classified benign data as comparison, GP's intrinsic uncertainty and confidence deviate for misclassified benign samples and misclassified adversarial examples. We therefore introduce high-confidence-low-uncertainty adversarial examples: adversarial examples crafted maximizing GP confidence and minimizing GP uncertainty. Visual inspection shows HCLU adversarial examples are malicious, and resemble the original rather than the target class. HCLU adversarial examples also transfer to other classifiers. We focus on transferability to other algorithms providing uncertainty measures, and find that a Bayesian neural network confidently misclassifies HCLU adversarial examples. We conclude that uncertainty and confidence, even in the Bayesian sense, can be circumvented by both white-box and black-box attackers.

How Wrong Am I? - Studying Adversarial Examples and their Impact on Uncertainty in Gaussian Process Machine Learning Models

Feb 16, 2018

Abstract:Machine learning models are vulnerable to Adversarial Examples: minor perturbations to input samples intended to deliberately cause misclassification. Current defenses against adversarial examples, especially for Deep Neural Networks (DNN), are primarily derived from empirical developments, and their security guarantees are often only justified retroactively. Many defenses therefore rely on hidden assumptions that are subsequently subverted by increasingly elaborate attacks. This is not surprising: deep learning notoriously lacks a comprehensive mathematical framework to provide meaningful guarantees. In this paper, we leverage Gaussian Processes to investigate adversarial examples in the framework of Bayesian inference. Across different models and datasets, we find deviating levels of uncertainty reflect the perturbation introduced to benign samples by state-of-the-art attacks, including novel white-box attacks on Gaussian Processes. Our experiments demonstrate that even unoptimized uncertainty thresholds already reject adversarial examples in many scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge