David MacLeod

Hierarchical Quantized Autoencoders

Feb 19, 2020

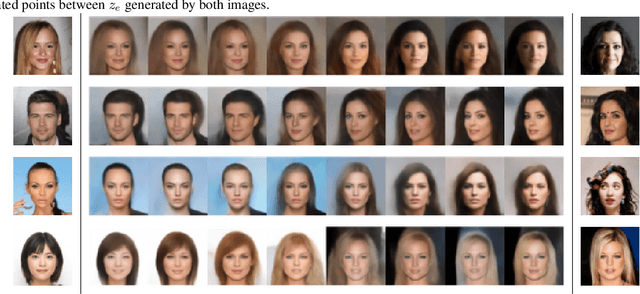

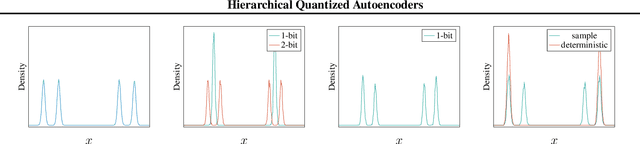

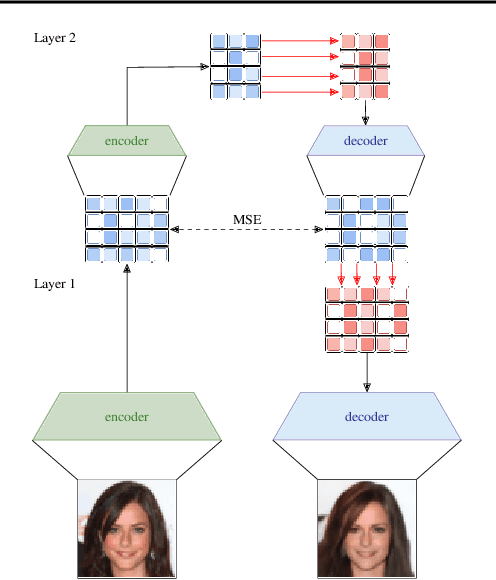

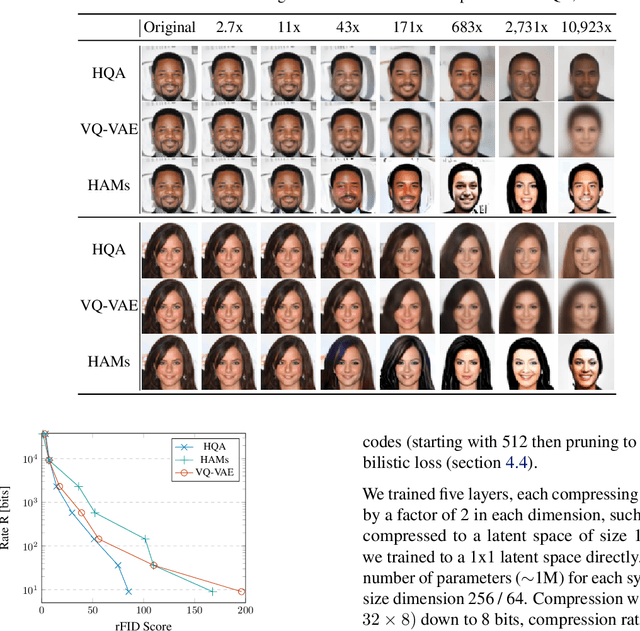

Abstract:Despite progress in training neural networks for lossy image compression, current approaches fail to maintain both perceptual quality and high-level features at very low bitrates. Encouraged by recent success in learning discrete representations with Vector Quantized Variational AutoEncoders (VQ-VAEs), we motivate the use of a hierarchy of VQ-VAEs to attain high factors of compression. We show that the combination of quantization and hierarchical latent structure aids likelihood-based image compression. This leads us to introduce a more probabilistic framing of the VQ-VAE, of which previous work is a limiting case. Our hierarchy produces a Markovian series of latent variables that reconstruct high-quality images which retain semantically meaningful features. These latents can then be further used to generate realistic samples. We provide qualitative and quantitative evaluations of reconstructions and samples on the CelebA and MNIST datasets.

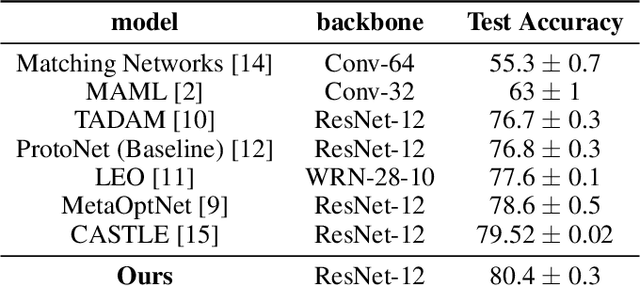

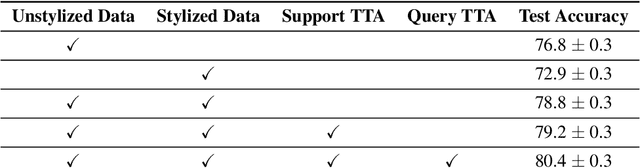

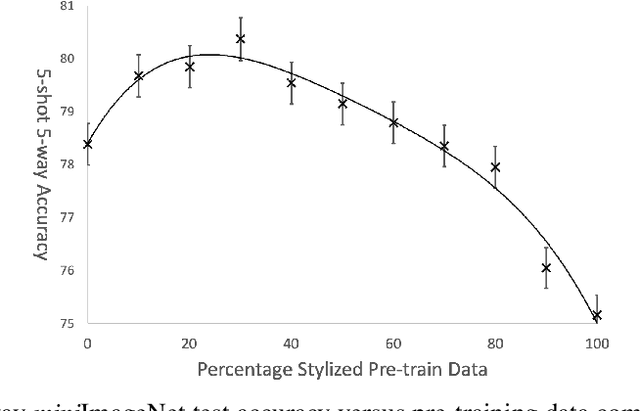

Texture Bias Of CNNs Limits Few-Shot Classification Performance

Oct 18, 2019

Abstract:Accurate image classification given small amounts of labelled data (few-shot classification) remains an open problem in computer vision. In this work we examine how the known texture bias of Convolutional Neural Networks (CNNs) affects few-shot classification performance. Although texture bias can help in standard image classification, in this work we show it significantly harms few-shot classification performance. After correcting this bias we demonstrate state-of-the-art performance on the competitive miniImageNet task using a method far simpler than the current best performing few-shot learning approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge