David Gray Widder

Basic Research, Lethal Effects: Military AI Research Funding as Enlistment

Nov 26, 2024Abstract:In the context of unprecedented U.S. Department of Defense (DoD) budgets, this paper examines the recent history of DoD funding for academic research in algorithmically based warfighting. We draw from a corpus of DoD grant solicitations from 2007 to 2023, focusing on those addressed to researchers in the field of artificial intelligence (AI). Considering the implications of DoD funding for academic research, the paper proceeds through three analytic sections. In the first, we offer a critical examination of the distinction between basic and applied research, showing how funding calls framed as basic research nonetheless enlist researchers in a war fighting agenda. In the second, we offer a diachronic analysis of the corpus, showing how a 'one small problem' caveat, in which affirmation of progress in military technologies is qualified by acknowledgement of outstanding problems, becomes justification for additional investments in research. We close with an analysis of DoD aspirations based on a subset of Defense Advanced Research Projects Agency (DARPA) grant solicitations for the use of AI in battlefield applications. Taken together, we argue that grant solicitations work as a vehicle for the mutual enlistment of DoD funding agencies and the academic AI research community in setting research agendas. The trope of basic research in this context offers shelter from significant moral questions that military applications of one's research would raise, by obscuring the connections that implicate researchers in U.S. militarism.

From Stem to Stern: Contestability Along AI Value Chains

Aug 02, 2024

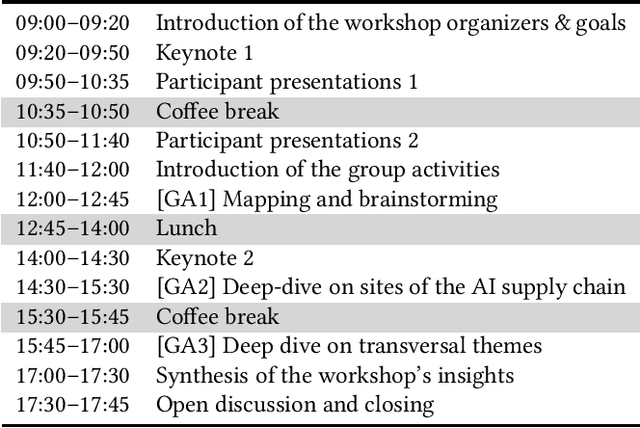

Abstract:This workshop will grow and consolidate a community of interdisciplinary CSCW researchers focusing on the topic of contestable AI. As an outcome of the workshop, we will synthesize the most pressing opportunities and challenges for contestability along AI value chains in the form of a research roadmap. This roadmap will help shape and inspire imminent work in this field. Considering the length and depth of AI value chains, it will especially spur discussions around the contestability of AI systems along various sites of such chains. The workshop will serve as a platform for dialogue and demonstrations of concrete, successful, and unsuccessful examples of AI systems that (could or should) have been contested, to identify requirements, obstacles, and opportunities for designing and deploying contestable AI in various contexts. This will be held primarily as an in-person workshop, with some hybrid accommodation. The day will consist of individual presentations and group activities to stimulate ideation and inspire broad reflections on the field of contestable AI. Our aim is to facilitate interdisciplinary dialogue by bringing together researchers, practitioners, and stakeholders to foster the design and deployment of contestable AI.

To Build Our Future, We Must Know Our Past: Contextualizing Paradigm Shifts in Natural Language Processing

Oct 11, 2023Abstract:NLP is in a period of disruptive change that is impacting our methodologies, funding sources, and public perception. In this work, we seek to understand how to shape our future by better understanding our past. We study factors that shape NLP as a field, including culture, incentives, and infrastructure by conducting long-form interviews with 26 NLP researchers of varying seniority, research area, institution, and social identity. Our interviewees identify cyclical patterns in the field, as well as new shifts without historical parallel, including changes in benchmark culture and software infrastructure. We complement this discussion with quantitative analysis of citation, authorship, and language use in the ACL Anthology over time. We conclude by discussing shared visions, concerns, and hopes for the future of NLP. We hope that this study of our field's past and present can prompt informed discussion of our community's implicit norms and more deliberate action to consciously shape the future.

The Ethics of AI Value Chains: An Approach for Integrating and Expanding AI Ethics Research, Practice, and Governance

Jul 31, 2023

Abstract:Recent criticisms of AI ethics principles and practices have indicated a need for new approaches to AI ethics that can account for and intervene in the design, development, use, and governance of AI systems across multiple actors, contexts, and scales of activity. This paper positions AI value chains as an integrative concept that satisfies those needs, enabling AI ethics researchers, practitioners, and policymakers to take a more comprehensive view of the ethical and practical implications of AI systems. We review and synthesize theoretical perspectives on value chains from the literature on strategic management, service science, and economic geography. We then review perspectives on AI value chains from the academic, industry, and policy literature. We connect an inventory of ethical concerns in AI to the actors and resourcing activities involved in AI value chains to demonstrate that approaching AI ethics issues as value chain issues can enable more comprehensive and integrative research and governance practices. We illustrate this by suggesting five future directions for researchers, practitioners, and policymakers to investigate and intervene in the ethical concerns associated with AI value chains.

Gender and Robots: A Literature Review

Jun 09, 2022Abstract:Here, I ask what we can learn about how how gender affects how people engage with robots. I review 46 empirical studies of social robots, published 2018 or earlier, which report on the gender of their participants or the perceived or intended gender of the robot, or both, and perform some analysis with respect to either participant or robot gender. From these studies, I find that robots are by default perceived as male, that robots absorb human gender stereotypes, and that men tend to engage with robots more than women. I highlight open questions about how such gender effects may be different in younger participants, and whether one should seek to match the gender of the robot to the gender of the participant to ensure positive interaction outcomes. I conclude by suggesting that future research should: include gender diverse participant pools, include non-binary participants, rely on self-identification for discerning gender rather than researcher perception, control for known covariates of gender, test for different study outcomes with respect to gender, and test whether the robot used was perceived as gendered by participants. I include an appendix with a narrative summary of gender-relevant findings from each of the 46 papers to aid in future literature reviews.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge