David Day Uei Li

Towards On-Device Learning and Reconfigurable Hardware Implementation for Encoded Single-Photon Signal Processing

Apr 12, 2025

Abstract:Deep neural networks (DNNs) enhance the accuracy and efficiency of reconstructing key parameters from time-resolved photon arrival signals recorded by single-photon detectors. However, the performance of conventional backpropagation-based DNNs is highly dependent on various parameters of the optical setup and biological samples under examination, necessitating frequent network retraining, either through transfer learning or from scratch. Newly collected data must also be stored and transferred to a high-performance GPU server for retraining, introducing latency and storage overhead. To address these challenges, we propose an online training algorithm based on a One-Sided Jacobi rotation-based Online Sequential Extreme Learning Machine (OSOS-ELM). We fully exploit parallelism in executing OSOS-ELM on a heterogeneous FPGA with integrated ARM cores. Extensive evaluations of OSOS-ELM and OSELM demonstrate that both achieve comparable accuracy across different network dimensions (i.e., input, hidden, and output layers), while OSOS-ELM proves to be more hardware-efficient. By leveraging the parallelism of OSOS-ELM, we implement a holistic computing prototype on a Xilinx ZCU104 FPGA, which integrates a multi-core CPU and programmable logic fabric. We validate our approach through three case studies involving single-photon signal analysis: sensing through fog using commercial single-photon LiDAR, fluorescence lifetime estimation in FLIM, and blood flow index reconstruction in DCS, all utilizing one-dimensional data encoded from photonic signals. From a hardware perspective, we optimize the OSOS-ELM workload by employing multi-tasked processing on ARM CPU cores and pipelined execution on the FPGA's logic fabric. We also implement our OSOS-ELM on the NVIDIA Jetson Xavier NX GPU to comprehensively investigate its computing performance on another type of heterogeneous computing platform.

Spiking Neural Network Enhanced Hand Gesture Recognition Using Low-Cost Single-photon Avalanche Diode Array

Feb 08, 2024Abstract:We present a compact spiking convolutional neural network (SCNN) and spiking multilayer perceptron (SMLP) to recognize ten different gestures in dark and bright light environments, using a $9.6 single-photon avalanche diode (SPAD) array. In our hand gesture recognition (HGR) system, photon intensity data was leveraged to train and test the network. A vanilla convolutional neural network (CNN) was also implemented to compare the performance of SCNN with the same network topologies and training strategies. Our SCNN was trained from scratch instead of being converted from the CNN. We tested the three models in dark and ambient light (AL)-corrupted environments. The results indicate that SCNN achieves comparable accuracy (90.8%) to CNN (92.9%) and exhibits lower floating operations with only 8 timesteps. SMLP also presents a trade-off between computational workload and accuracy. The code and collected datasets of this work are available at https://github.com/zzy666666zzy/TinyLiDAR_NET_SNN.

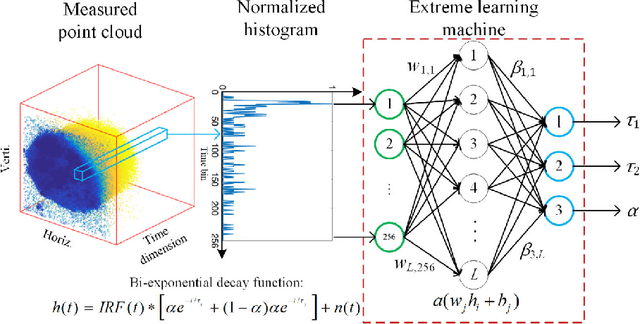

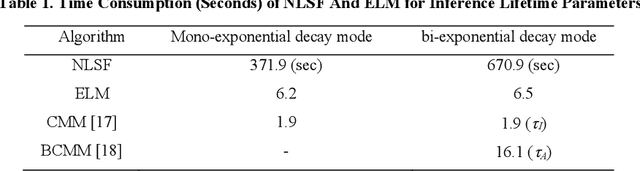

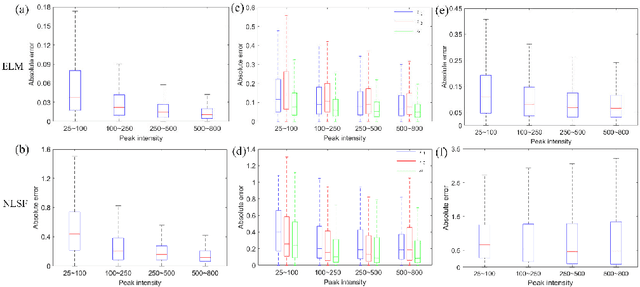

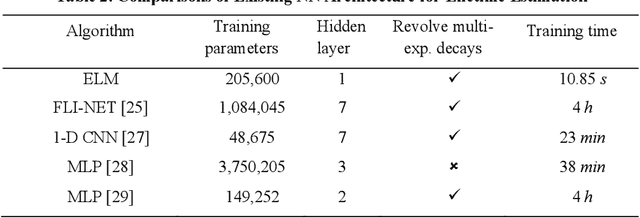

Fast fluorescence lifetime imaging analysis via extreme learning machine

Mar 25, 2022

Abstract:We present a fast and accurate analytical method for fluorescence lifetime imaging microscopy (FLIM) using the extreme learning machine (ELM). We used extensive metrics to evaluate ELM and existing algorithms. First, we compared these algorithms using synthetic datasets. Results indicate that ELM can obtain higher fidelity, even in low-photon conditions. Afterwards, we used ELM to retrieve lifetime components from human prostate cancer cells loaded with gold nanosensors, showing that ELM also outperforms the iterative fitting and non-fitting algorithms. By comparing ELM with a computational efficient neural network, ELM achieves comparable accuracy with less training and inference time. As there is no back-propagation process for ELM during the training phase, the training speed is much higher than existing neural network approaches. The proposed strategy is promising for edge computing with online training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge