Dave Coleman

Simultaneous Localization, Mapping, and Manipulation for Unsupervised Object Discovery

Nov 04, 2014

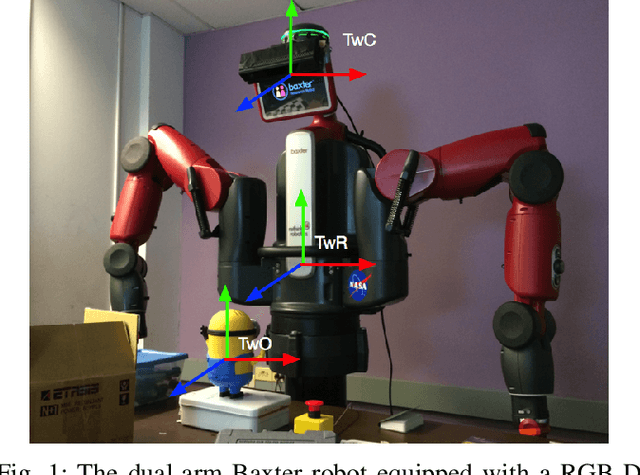

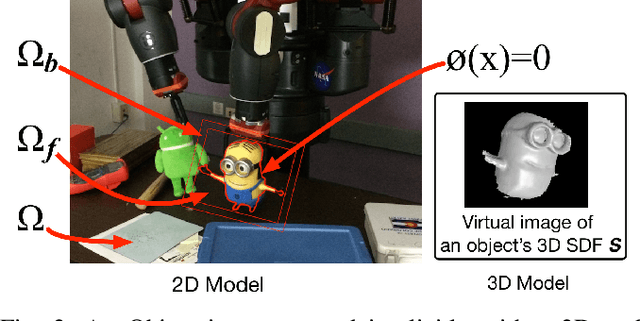

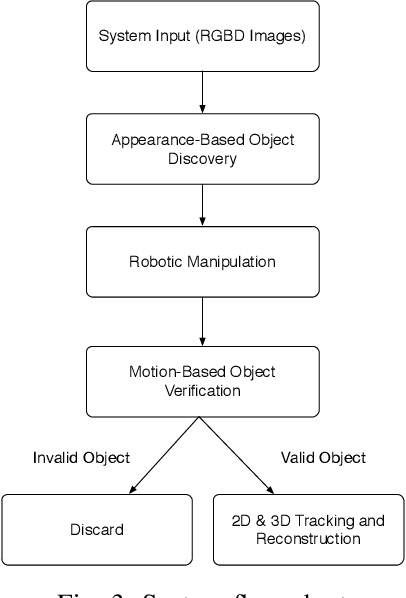

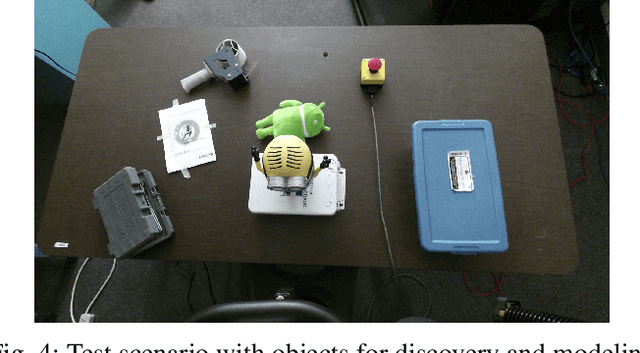

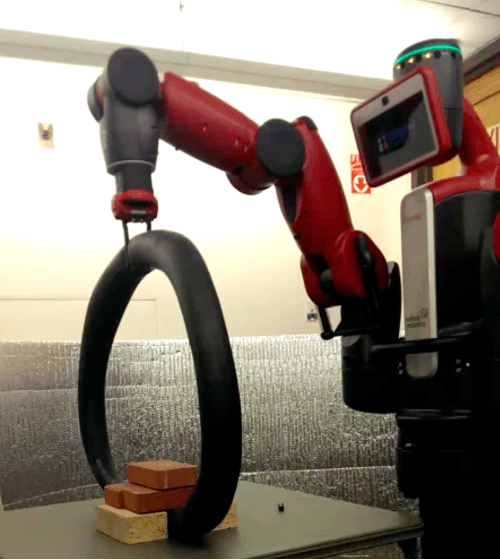

Abstract:We present an unsupervised framework for simultaneous appearance-based object discovery, detection, tracking and reconstruction using RGBD cameras and a robot manipulator. The system performs dense 3D simultaneous localization and mapping concurrently with unsupervised object discovery. Putative objects that are spatially and visually coherent are manipulated by the robot to gain additional motion-cues. The robot uses appearance alone, followed by structure and motion cues, to jointly discover, verify, learn and improve models of objects. Induced motion segmentation reinforces learned models which are represented implicitly as 2D and 3D level sets to capture both shape and appearance. We compare three different approaches for appearance-based object discovery and find that a novel form of spatio-temporal super-pixels gives the highest quality candidate object models in terms of precision and recall. Live experiments with a Baxter robot demonstrate a holistic pipeline capable of automatic discovery, verification, detection, tracking and reconstruction of unknown objects.

Optimal Parameter Identification for Discrete Mechanical Systems with Application to Flexible Object Manipulation

Feb 12, 2014

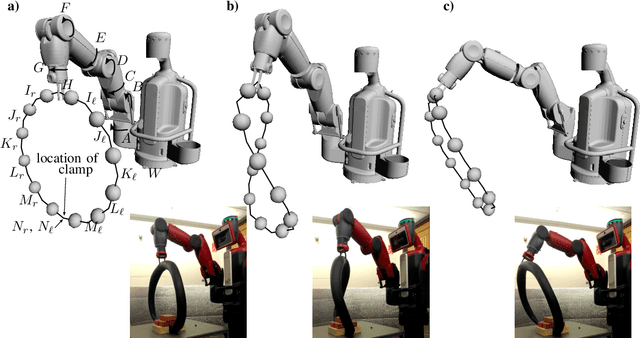

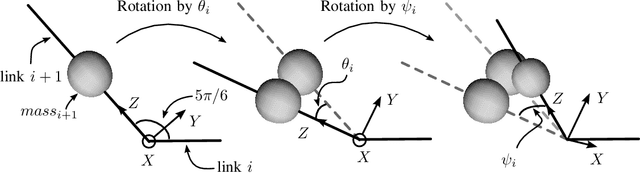

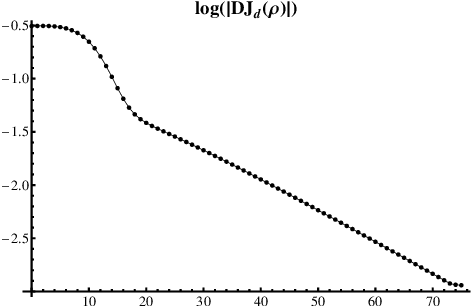

Abstract:We present a method for system identification of flexible objects by measuring forces and displacement during interaction with a manipulating arm. We model the object's structure and flexibility by a chain of rigid bodies connected by torsional springs. Unlike previous work, the proposed optimal control approach using variational integrators allows identification of closed loops, which include the robot arm itself. This allows using the resulting models for planning in configuration space of the robot. In order to solve the resulting problem efficiently, we develop a novel method for fast discrete-time adjoint-based gradient calculation. The feasibility of the approach is demonstrated using full physics simulation in trep and using data recorded from a 7-DOF series elastic robot arm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge