Simultaneous Localization, Mapping, and Manipulation for Unsupervised Object Discovery

Paper and Code

Nov 04, 2014

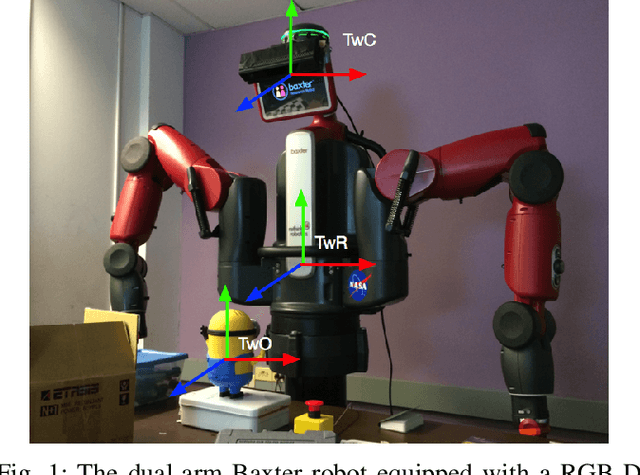

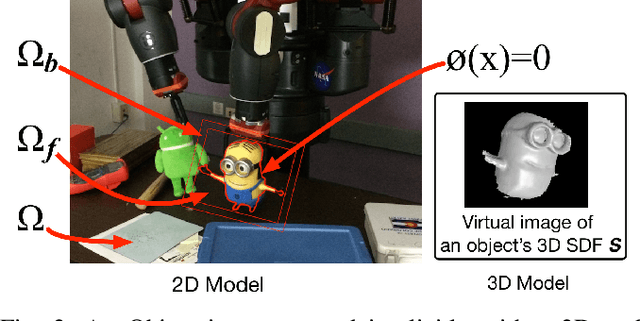

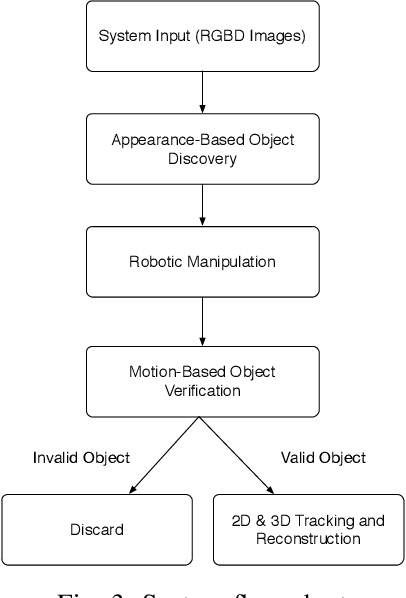

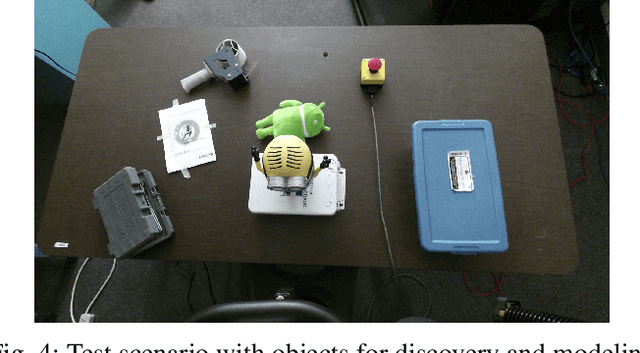

We present an unsupervised framework for simultaneous appearance-based object discovery, detection, tracking and reconstruction using RGBD cameras and a robot manipulator. The system performs dense 3D simultaneous localization and mapping concurrently with unsupervised object discovery. Putative objects that are spatially and visually coherent are manipulated by the robot to gain additional motion-cues. The robot uses appearance alone, followed by structure and motion cues, to jointly discover, verify, learn and improve models of objects. Induced motion segmentation reinforces learned models which are represented implicitly as 2D and 3D level sets to capture both shape and appearance. We compare three different approaches for appearance-based object discovery and find that a novel form of spatio-temporal super-pixels gives the highest quality candidate object models in terms of precision and recall. Live experiments with a Baxter robot demonstrate a holistic pipeline capable of automatic discovery, verification, detection, tracking and reconstruction of unknown objects.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge