Dario Konopatzki

The Tensor Brain: A Unified Theory of Perception, Memory and Semantic Decoding

Oct 06, 2021

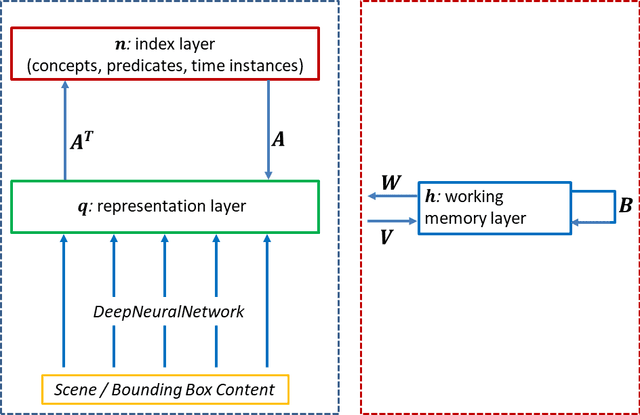

Abstract:We present a unified computational theory of perception and memory. In our model, perception, episodic memory, and semantic memory are realized by different functional and operational modes of the oscillating interactions between an index layer and a representation layer in a bilayer tensor network (BTN). The memoryless semantic {representation layer} broadcasts information. In cognitive neuroscience, it would be the "mental canvas", or the "global workspace" and reflects the cognitive brain state. The symbolic {index layer} represents concepts and past episodes, whose semantic embeddings are implemented in the connection weights between both layers. In addition, we propose a {working memory layer} as a processing center and information buffer. Episodic and semantic memory realize memory-based reasoning, i.e., the recall of relevant past information to enrich perception, and are personalized to an agent's current state, as well as to an agent's unique memories. Episodic memory stores and retrieves past observations and provides provenance and context. Recent episodic memory enriches perception by the retrieval of perceptual experiences, which provide the agent with a sense about the here and now: to understand its own state, and the world's semantic state in general, the agent needs to know what happened recently, in recent scenes, and on recently perceived entities. Remote episodic memory retrieves relevant past experiences, contributes to our conscious self, and, together with semantic memory, to a large degree defines who we are as individuals.

The Tensor Brain: Semantic Decoding for Perception and Memory

Feb 10, 2020

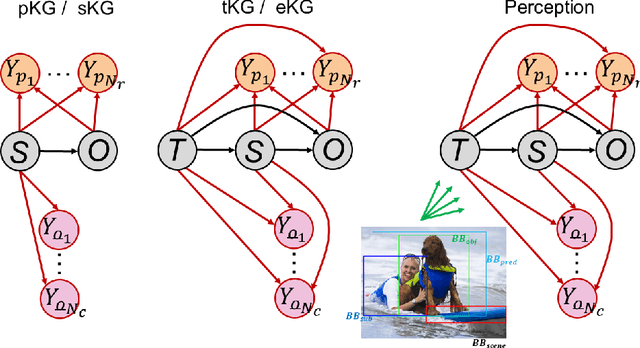

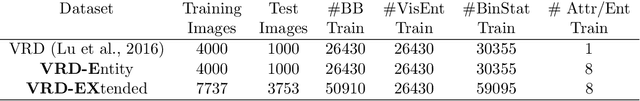

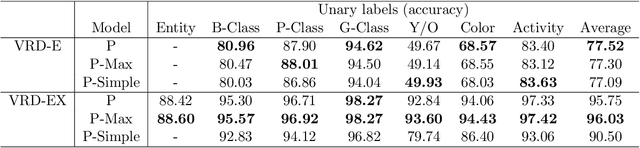

Abstract:We analyse perception and memory, using mathematical models for knowledge graphs and tensors, to gain insights into the corresponding functionalities of the human mind. Our discussion is based on the concept of propositional sentences consisting of \textit{subject-predicate-object} (SPO) triples for expressing elementary facts. SPO sentences are the basis for most natural languages but might also be important for explicit perception and declarative memories, as well as intra-brain communication and the ability to argue and reason. A set of SPO sentences can be described as a knowledge graph, which can be transformed into an adjacency tensor. We introduce tensor models, where concepts have dual representations as indices and associated embeddings, two constructs we believe are essential for the understanding of implicit and explicit perception and memory in the brain. We argue that a biological realization of perception and memory imposes constraints on information processing. In particular, we propose that explicit perception and declarative memories require a semantic decoder, which, in a simple realization, is based on four layers: First, a sensory memory layer, as a buffer for sensory input, second, an index layer representing concepts, third, a memoryless representation layer for the broadcasting of information ---the "blackboard", or the "canvas" of the brain--- and fourth, a working memory layer as a processing center and data buffer. We discuss the operations of the four layers and relate them to the global workspace theory. In a Bayesian brain interpretation, semantic memory defines the prior for observable triple statements. We propose that ---in evolution and during development--- semantic memory, episodic memory, and natural language evolved as emergent properties in agents' process to gain a deeper understanding of sensory information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge