Danyu Wang

Exemplar-free Class Incremental Learning via Discriminative and Comparable One-class Classifiers

Jan 05, 2022

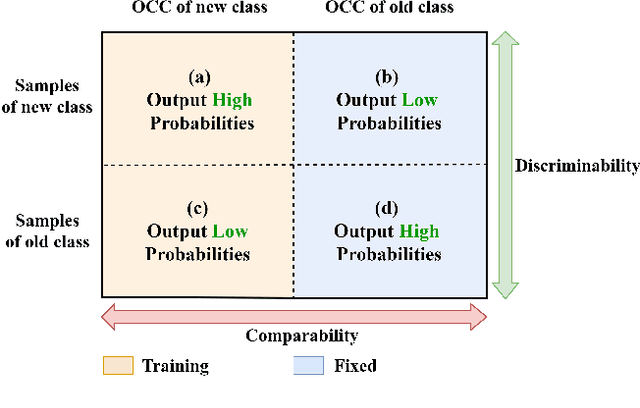

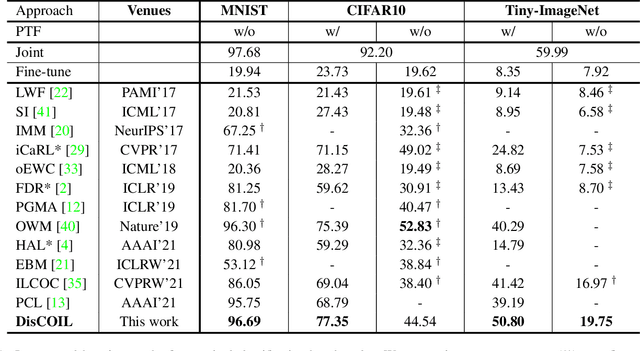

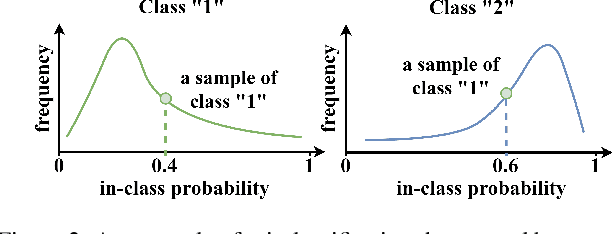

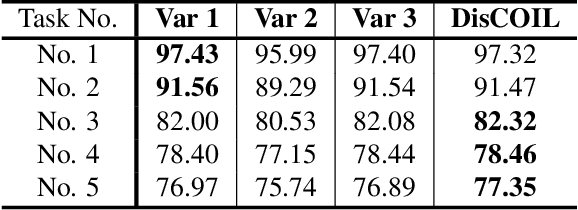

Abstract:The exemplar-free class incremental learning requires classification models to learn new class knowledge incrementally without retaining any old samples. Recently, the framework based on parallel one-class classifiers (POC), which trains a one-class classifier (OCC) independently for each category, has attracted extensive attention, since it can naturally avoid catastrophic forgetting. POC, however, suffers from weak discriminability and comparability due to its independent training strategy for different OOCs. To meet this challenge, we propose a new framework, named Discriminative and Comparable One-class classifiers for Incremental Learning (DisCOIL). DisCOIL follows the basic principle of POC, but it adopts variational auto-encoders (VAE) instead of other well-established one-class classifiers (e.g. deep SVDD), because a trained VAE can not only identify the probability of an input sample belonging to a class but also generate pseudo samples of the class to assist in learning new tasks. With this advantage, DisCOIL trains a new-class VAE in contrast with the old-class VAEs, which forces the new-class VAE to reconstruct better for new-class samples but worse for the old-class pseudo samples, thus enhancing the comparability. Furthermore, DisCOIL introduces a hinge reconstruction loss to ensure the discriminability. We evaluate our method extensively on MNIST, CIFAR10, and Tiny-ImageNet. The experimental results show that DisCOIL achieves state-of-the-art performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge