Danyal Bhutto

Synthetic Low-Field MRI Super-Resolution Via Nested U-Net Architecture

Nov 28, 2022Abstract:Low-field (LF) MRI scanners have the power to revolutionize medical imaging by providing a portable and cheaper alternative to high-field MRI scanners. However, such scanners are usually significantly noisier and lower quality than their high-field counterparts. The aim of this paper is to improve the SNR and overall image quality of low-field MRI scans to improve diagnostic capability. To address this issue, we propose a Nested U-Net neural network architecture super-resolution algorithm that outperforms previously suggested deep learning methods with an average PSNR of 78.83 and SSIM of 0.9551. We tested our network on artificial noisy downsampled synthetic data from a major T1 weighted MRI image dataset called the T1-mix dataset. One board-certified radiologist scored 25 images on the Likert scale (1-5) assessing overall image quality, anatomical structure, and diagnostic confidence across our architecture and other published works (SR DenseNet, Generator Block, SRCNN, etc.). We also introduce a new type of loss function called natural log mean squared error (NLMSE). In conclusion, we present a more accurate deep learning method for single image super-resolution applied to synthetic low-field MRI via a Nested U-Net architecture.

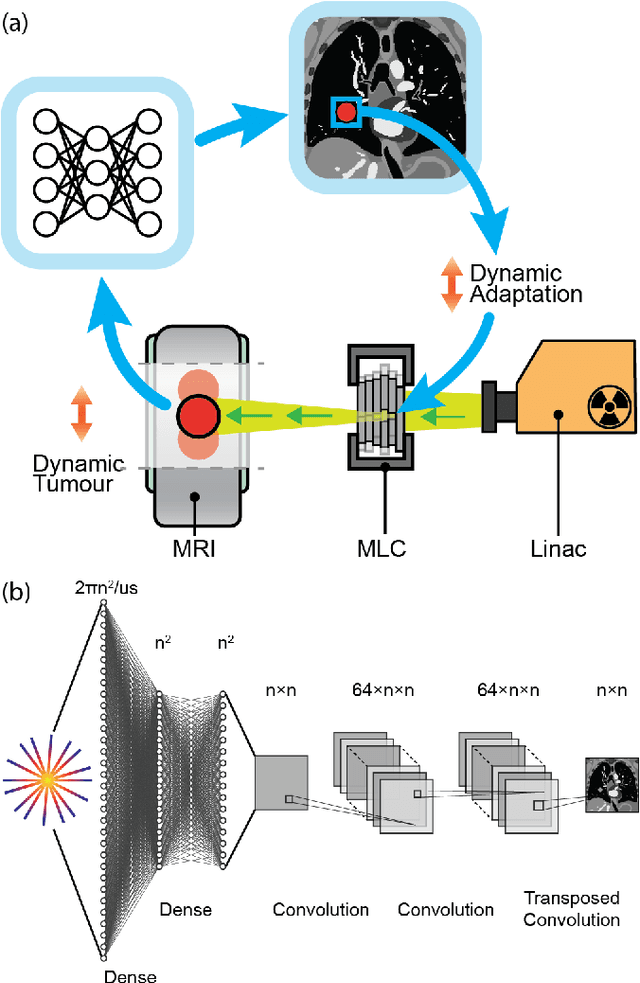

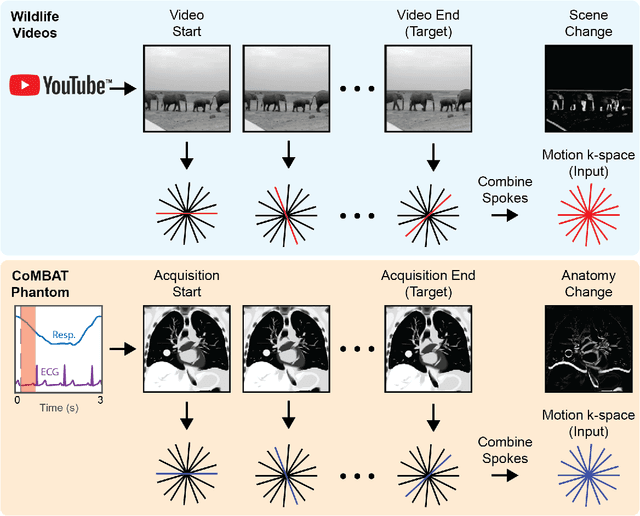

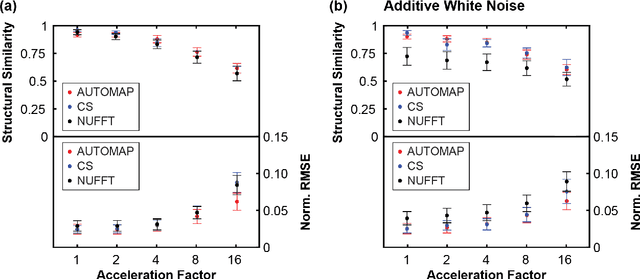

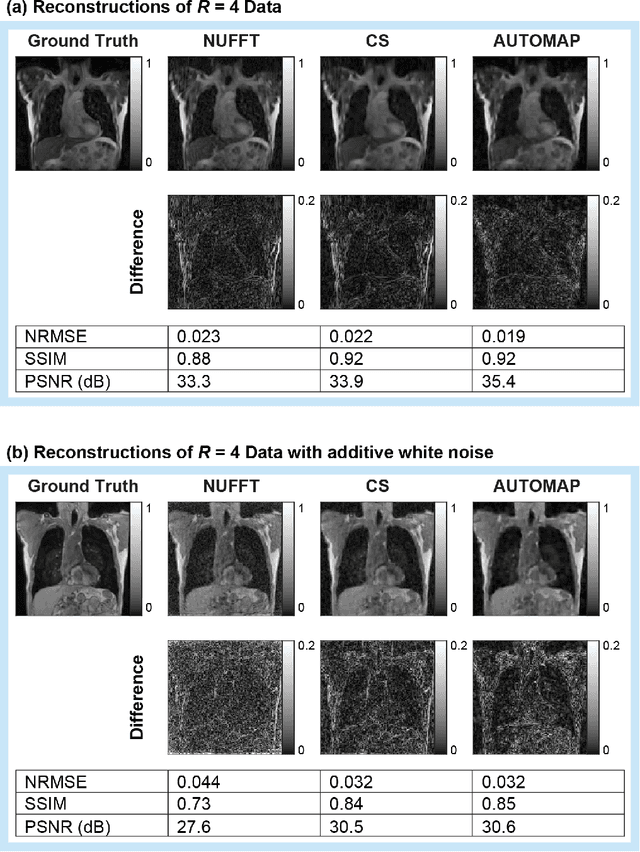

On Real-time Image Reconstruction with Neural Networks for MRI-guided Radiotherapy

Feb 10, 2022

Abstract:MRI-guidance techniques that dynamically adapt radiation beams to follow tumor motion in real-time will lead to more accurate cancer treatments and reduced collateral healthy tissue damage. The gold-standard for reconstruction of undersampled MR data is compressed sensing (CS) which is computationally slow and limits the rate that images can be available for real-time adaptation. Here, we demonstrate the use of automated transform by manifold approximation (AUTOMAP), a generalized framework that maps raw MR signal to the target image domain, to rapidly reconstruct images from undersampled radial k-space data. The AUTOMAP neural network was trained to reconstruct images from a golden-angle radial acquisition, a benchmark for motion-sensitive imaging, on lung cancer patient data and generic images from ImageNet. Model training was subsequently augmented with motion-encoded k-space data derived from videos in the YouTube-8M dataset to encourage motion robust reconstruction. We find that AUTOMAP-reconstructed radial k-space has equivalent accuracy to CS but with much shorter processing times after initial fine-tuning on retrospectively acquired lung cancer patient data. Validation of motion-trained models with a virtual dynamic lung tumor phantom showed that the generalized motion properties learned from YouTube lead to improved target tracking accuracy. Our work shows that AUTOMAP can achieve real-time, accurate reconstruction of radial data. These findings imply that neural-network-based reconstruction is potentially superior to existing approaches for real-time image guidance applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge