Daniele Gravina

Moment-to-moment Engagement Prediction through the Eyes of the Observer: PUBG Streaming on Twitch

Aug 17, 2020

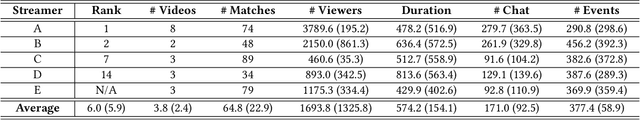

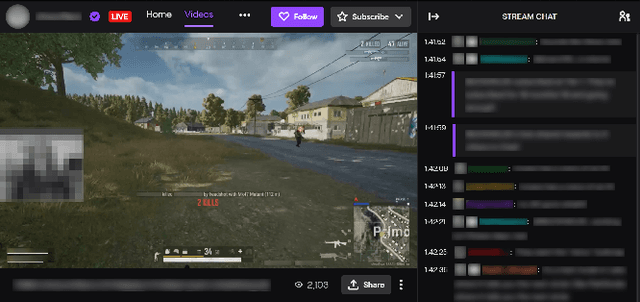

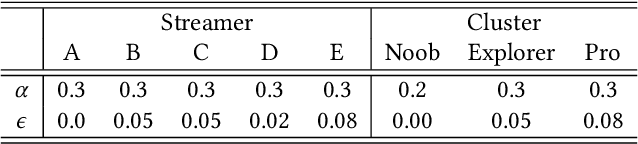

Abstract:Is it possible to predict moment-to-moment gameplay engagement based solely on game telemetry? Can we reveal engaging moments of gameplay by observing the way the viewers of the game behave? To address these questions in this paper, we reframe the way gameplay engagement is defined and we view it, instead, through the eyes of a game's live audience. We build prediction models for viewers' engagement based on data collected from the popular battle royale game PlayerUnknown's Battlegrounds as obtained from the Twitch streaming service. In particular, we collect viewers' chat logs and in-game telemetry data from several hundred matches of five popular streamers (containing over 100,000 game events) and machine learn the mapping between gameplay and viewer chat frequency during play, using small neural network architectures. Our key findings showcase that engagement models trained solely on 40 gameplay features can reach accuracies of up to 80% on average and 84% at best. Our models are scalable and generalisable as they perform equally well within- and across-streamers, as well as across streamer play styles.

Procedural Content Generation through Quality Diversity

Jul 09, 2019

Abstract:Quality-diversity (QD) algorithms search for a set of good solutions which cover a space as defined by behavior metrics. This simultaneous focus on quality and diversity with explicit metrics sets QD algorithms apart from standard single- and multi-objective evolutionary algorithms, as well as from diversity preservation approaches such as niching. These properties open up new avenues for artificial intelligence in games, in particular for procedural content generation. Creating multiple systematically varying solutions allows new approaches to creative human-AI interaction as well as adaptivity. In the last few years, a handful of applications of QD to procedural content generation and game playing have been proposed; we discuss these and propose challenges for future work.

Quality Diversity Through Surprise

Oct 24, 2018

Abstract:Quality diversity is a recent family of evolutionary search algorithms which focus on finding several well-performing (quality) yet different (diversity) solutions with the aim to maintain an appropriate balance between divergence and convergence during search. While quality diversity has already delivered promising results in complex problems, the capacity of divergent search variants for quality diversity remains largely unexplored. Inspired by the notion of surprise as an effective driver of divergent search and its orthogonal nature to novelty this paper investigates the impact of the former to quality diversity performance. For that purpose we introduce three new quality diversity algorithms which employ surprise as a diversity measure, either on its own or combined with novelty, and compare their performance against novelty search with local competition, the state of the art quality diversity algorithm. The algorithms are tested in a robot navigation task across 60 highly deceptive mazes. Our findings suggest that allowing surprise and novelty to operate synergistically for divergence and in combination with local competition leads to quality diversity algorithms of significantly higher efficiency, speed and robustness.

Surprise Search for Evolutionary Divergence

Jun 08, 2017

Abstract:Inspired by the notion of surprise for unconventional discovery we introduce a general search algorithm we name surprise search as a new method of evolutionary divergent search. Surprise search is grounded in the divergent search paradigm and is fabricated within the principles of evolutionary search. The algorithm mimics the self-surprise cognitive process and equips evolutionary search with the ability to seek for solutions that deviate from the algorithm's expected behaviour. The predictive model of expected solutions is based on historical trails of where the search has been and local information about the search space. Surprise search is tested extensively in a robot maze navigation task: experiments are held in four authored deceptive mazes and in 60 generated mazes and compared against objective-based evolutionary search and novelty search. The key findings of this study reveal that surprise search is advantageous compared to the other two search processes. In particular, it outperforms objective search and it is as efficient as novelty search in all tasks examined. Most importantly, surprise search is faster, on average, and more robust in solving the navigation problem compared to any other algorithm examined. Finally, our analysis reveals that surprise search explores the behavioural space more extensively and yields higher population diversity compared to novelty search. What distinguishes surprise search from other forms of divergent search, such as the search for novelty, is its ability to diverge not from earlier and seen solutions but rather from predicted and unseen points in the domain considered.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge