Daniela Schkoda

Root Cause Analysis of Outliers in Unknown Cyclic Graphs

Oct 08, 2025Abstract:We study the propagation of outliers in cyclic causal graphs with linear structural equations, tracing them back to one or several "root cause" nodes. We show that it is possible to identify a short list of potential root causes provided that the perturbation is sufficiently strong and propagates according to the same structural equations as in the normal mode. This shortlist consists of the true root causes together with those of its parents lying on a cycle with the root cause. Notably, our method does not require prior knowledge of the causal graph.

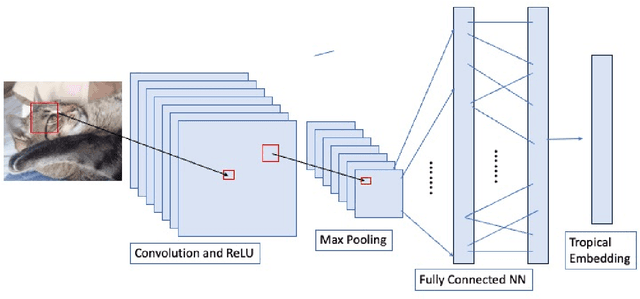

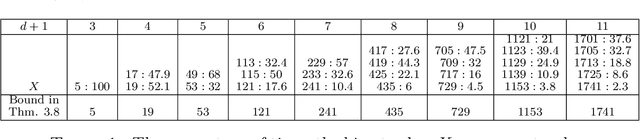

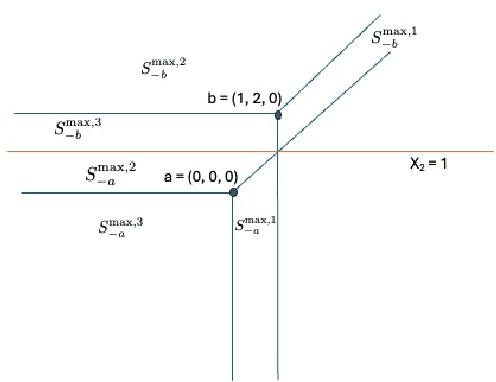

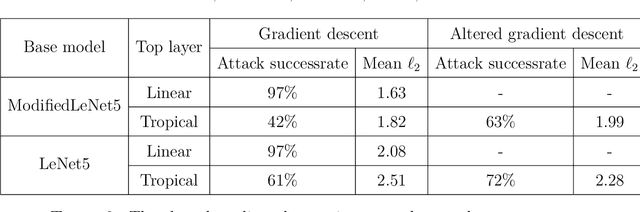

Tropical Bisectors and Carlini-Wagner Attacks

Mar 28, 2025

Abstract:Pasque et al. showed that using a tropical symmetric metric as an activation function in the last layer can improve the robustness of convolutional neural networks (CNNs) against state-of-the-art attacks, including the Carlini-Wagner attack. This improvement occurs when the attacks are not specifically adapted to the non-differentiability of the tropical layer. Moreover, they showed that the decision boundary of a tropical CNN is defined by tropical bisectors. In this paper, we explore the combinatorics of tropical bisectors and analyze how the tropical embedding layer enhances robustness against Carlini-Wagner attacks. We prove an upper bound on the number of linear segments the decision boundary of a tropical CNN can have. We then propose a refined version of the Carlini-Wagner attack, specifically tailored for the tropical architecture. Computational experiments with MNIST and LeNet5 showcase our attacks improved success rate.

Cross-validating causal discovery via Leave-One-Variable-Out

Nov 08, 2024Abstract:We propose a new approach to falsify causal discovery algorithms without ground truth, which is based on testing the causal model on a pair of variables that has been dropped when learning the causal model. To this end, we use the "Leave-One-Variable-Out (LOVO)" prediction where $Y$ is inferred from $X$ without any joint observations of $X$ and $Y$, given only training data from $X,Z_1,\dots,Z_k$ and from $Z_1,\dots,Z_k,Y$. We demonstrate that causal models on the two subsets, in the form of Acyclic Directed Mixed Graphs (ADMGs), often entail conclusions on the dependencies between $X$ and $Y$, enabling this type of prediction. The prediction error can then be estimated since the joint distribution $P(X, Y)$ is assumed to be available, and $X$ and $Y$ have only been omitted for the purpose of falsification. After presenting this graphical method, which is applicable to general causal discovery algorithms, we illustrate how to construct a LOVO predictor tailored towards algorithms relying on specific a priori assumptions, such as linear additive noise models. Simulations indicate that the LOVO prediction error is indeed correlated with the accuracy of the causal outputs, affirming the method's effectiveness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge