Daniela Cuza

Deep ensembles in bioimage segmentation

Dec 24, 2021

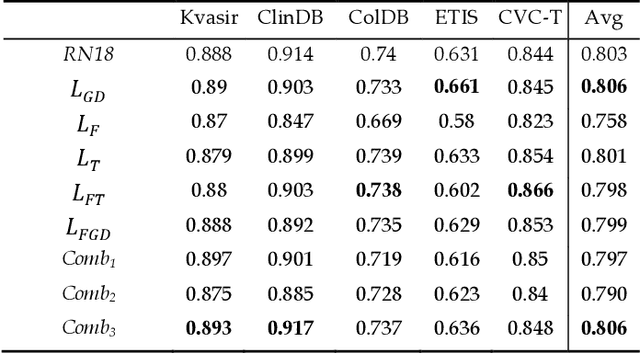

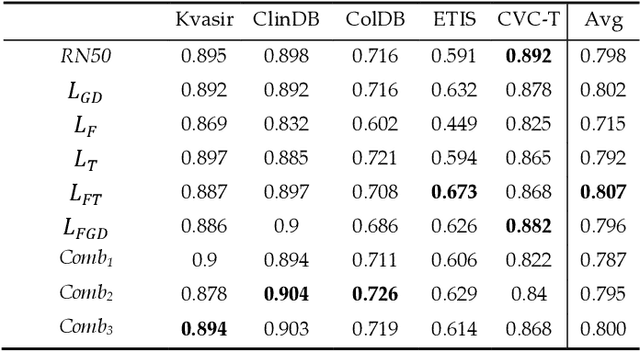

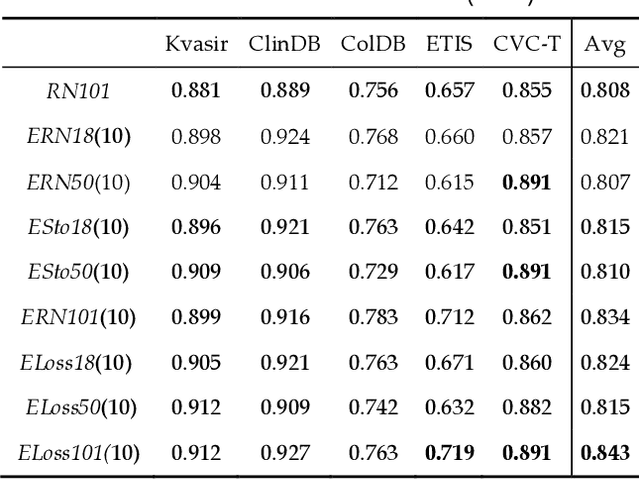

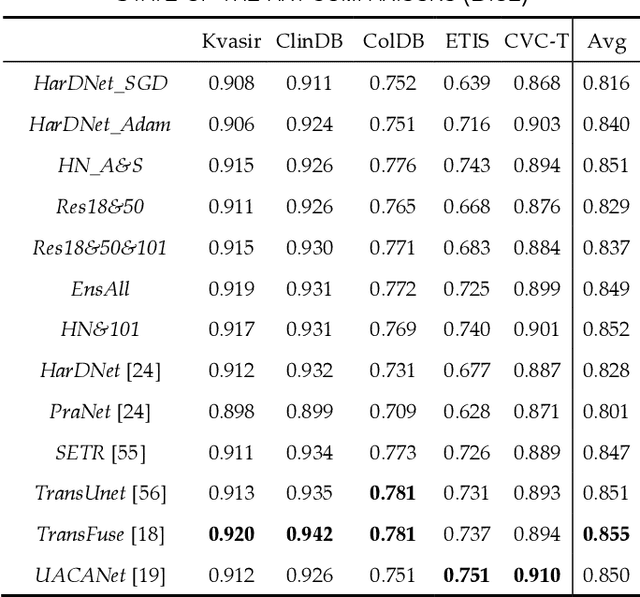

Abstract:Semantic segmentation consists in classifying each pixel of an image by assigning it to a specific label chosen from a set of all the available ones. During the last few years, a lot of attention shifted to this kind of task. Many computer vision researchers tried to apply autoencoder structures to develop models that can learn the semantics of the image as well as a low-level representation of it. In an autoencoder architecture, given an input, an encoder computes a low dimensional representation of the input that is then used by a decoder to reconstruct the original data. In this work, we propose an ensemble of convolutional neural networks (CNNs). In ensemble methods, many different models are trained and then used for classification, the ensemble aggregates the outputs of the single classifiers. The approach leverages on differences of various classifiers to improve the performance of the whole system. Diversity among the single classifiers is enforced by using different loss functions. In particular, we present a new loss function that results from the combination of Dice and Structural Similarity Index. The proposed ensemble is implemented by combining different backbone networks using the DeepLabV3+ and HarDNet environment. The proposal is evaluated through an extensive empirical evaluation on two real-world scenarios: polyp and skin segmentation. All the code is available online at https://github.com/LorisNanni.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge