Daniel Peterson

Self-Supervised Learning and Opportunistic Inference for Continuous Monitoring of Freezing of Gait in Parkinson's Disease

Oct 27, 2024

Abstract:Parkinson's disease (PD) is a progressive neurological disorder that impacts the quality of life significantly, making in-home monitoring of motor symptoms such as Freezing of Gait (FoG) critical. However, existing symptom monitoring technologies are power-hungry, rely on extensive amounts of labeled data, and operate in controlled settings. These shortcomings limit real-world deployment of the technology. This work presents LIFT-PD, a computationally-efficient self-supervised learning framework for real-time FoG detection. Our method combines self-supervised pre-training on unlabeled data with a novel differential hopping windowing technique to learn from limited labeled instances. An opportunistic model activation module further minimizes power consumption by selectively activating the deep learning module only during active periods. Extensive experimental results show that LIFT-PD achieves a 7.25% increase in precision and 4.4% improvement in accuracy compared to supervised models while using as low as 40% of the labeled training data used for supervised learning. Additionally, the model activation module reduces inference time by up to 67% compared to continuous inference. LIFT-PD paves the way for practical, energy-efficient, and unobtrusive in-home monitoring of PD patients with minimal labeling requirements.

Private Cross-Silo Federated Learning for Extracting Vaccine Adverse Event Mentions

Mar 12, 2021

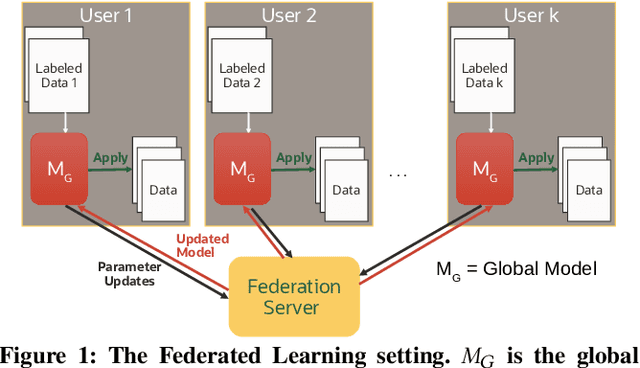

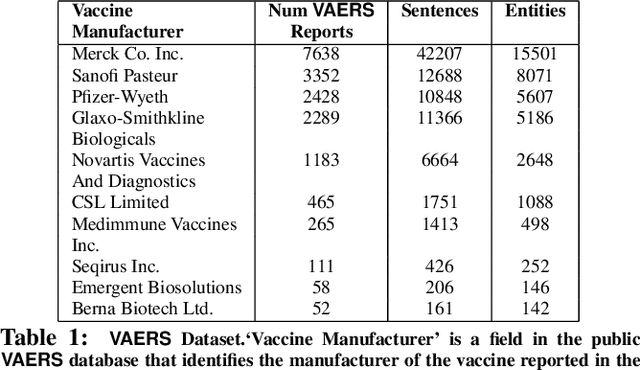

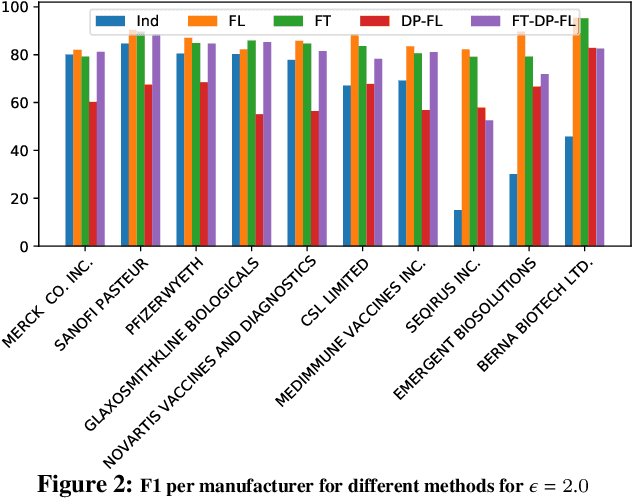

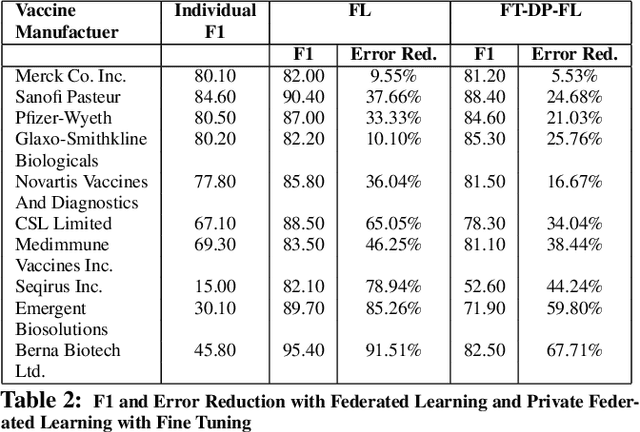

Abstract:Federated Learning (FL) is quickly becoming a goto distributed training paradigm for users to jointly train a global model without physically sharing their data. Users can indirectly contribute to, and directly benefit from a much larger aggregate data corpus used to train the global model. However, literature on successful application of FL in real-world problem settings is somewhat sparse. In this paper, we describe our experience applying a FL based solution to the Named Entity Recognition (NER) task for an adverse event detection application in the context of mass scale vaccination programs. We present a comprehensive empirical analysis of various dimensions of benefits gained with FL based training. Furthermore, we investigate effects of tighter Differential Privacy (DP) constraints in highly sensitive settings where federation users must enforce Local DP to ensure strict privacy guarantees. We show that local DP can severely cripple the global model's prediction accuracy, thus dis-incentivizing users from participating in the federation. In response, we demonstrate how recent innovation on personalization methods can help significantly recover the lost accuracy. We focus our analysis on the Federated Fine-Tuning algorithm, FedFT, and prove that it is not PAC Identifiable, thus making it even more attractive for FL-based training.

Private Federated Learning with Domain Adaptation

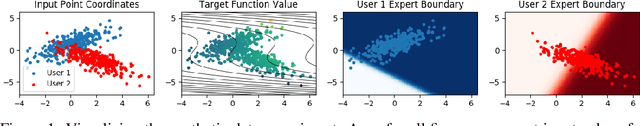

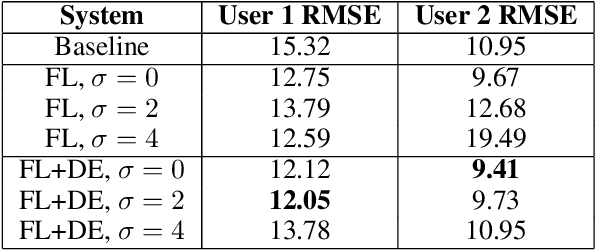

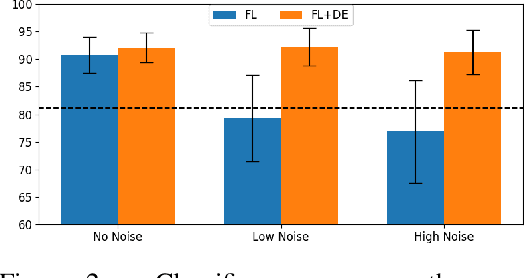

Dec 13, 2019

Abstract:Federated Learning (FL) is a distributed machine learning (ML) paradigm that enables multiple parties to jointly re-train a shared model without sharing their data with any other parties, offering advantages in both scale and privacy. We propose a framework to augment this collaborative model-building with per-user domain adaptation. We show that this technique improves model accuracy for all users, using both real and synthetic data, and that this improvement is much more pronounced when differential privacy bounds are imposed on the FL model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge