Pallika Kanani

Subject Granular Differential Privacy in Federated Learning

Jun 07, 2022Abstract:This paper introduces subject granular privacy in the Federated Learning (FL) setting, where a subject is an individual whose private information is embodied by several data items either confined within a single federation user or distributed across multiple federation users. We formally define the notion of subject level differential privacy for FL. We propose three new algorithms that enforce subject level DP. Two of these algorithms are based on notions of user level local differential privacy (LDP) and group differential privacy respectively. The third algorithm is based on a novel idea of hierarchical gradient averaging (HiGradAvgDP) for subjects participating in a training mini-batch. We also introduce horizontal composition of privacy loss for a subject across multiple federation users. We show that horizontal composition is equivalent to sequential composition in the worst case. We prove the subject level DP guarantee for all our algorithms and empirically analyze them using the FEMNIST and Shakespeare datasets. Our evaluation shows that, of our three algorithms, HiGradAvgDP delivers the best model performance, approaching that of a model trained using a DP-SGD based algorithm that provides a weaker item level privacy guarantee.

Subject Membership Inference Attacks in Federated Learning

Jun 07, 2022

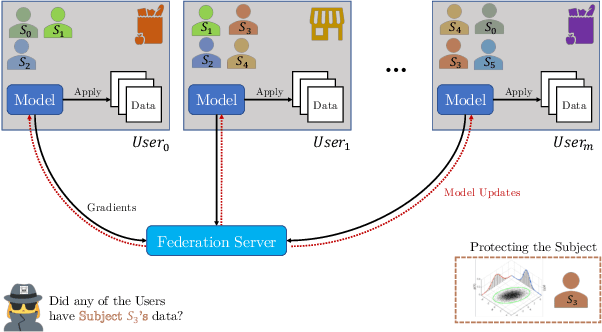

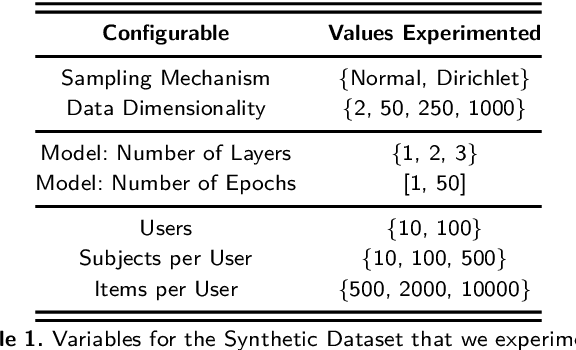

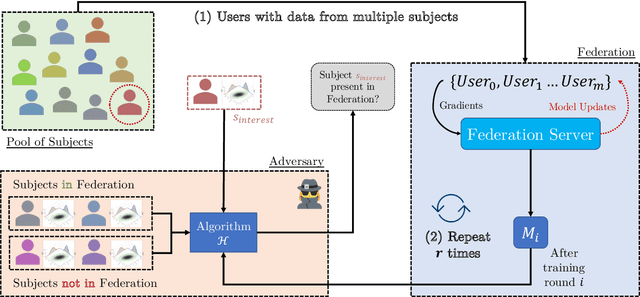

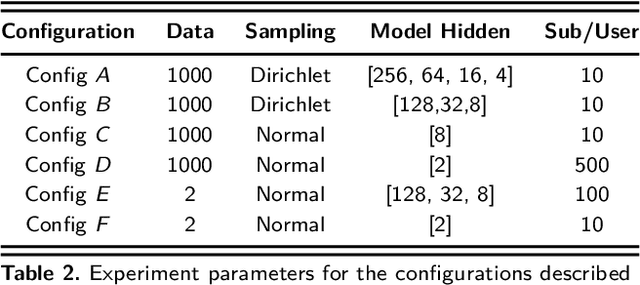

Abstract:Privacy in Federated Learning (FL) is studied at two different granularities: item-level, which protects individual data points, and user-level, which protects each user (participant) in the federation. Nearly all of the private FL literature is dedicated to studying privacy attacks and defenses at these two granularities. Recently, subject-level privacy has emerged as an alternative privacy granularity to protect the privacy of individuals (data subjects) whose data is spread across multiple (organizational) users in cross-silo FL settings. An adversary might be interested in recovering private information about these individuals (a.k.a. \emph{data subjects}) by attacking the trained model. A systematic study of these patterns requires complete control over the federation, which is impossible with real-world datasets. We design a simulator for generating various synthetic federation configurations, enabling us to study how properties of the data, model design and training, and the federation itself impact subject privacy risk. We propose three attacks for \emph{subject membership inference} and examine the interplay between all factors within a federation that affect the attacks' efficacy. We also investigate the effectiveness of Differential Privacy in mitigating this threat. Our takeaways generalize to real-world datasets like FEMNIST, giving credence to our findings.

Private Cross-Silo Federated Learning for Extracting Vaccine Adverse Event Mentions

Mar 12, 2021

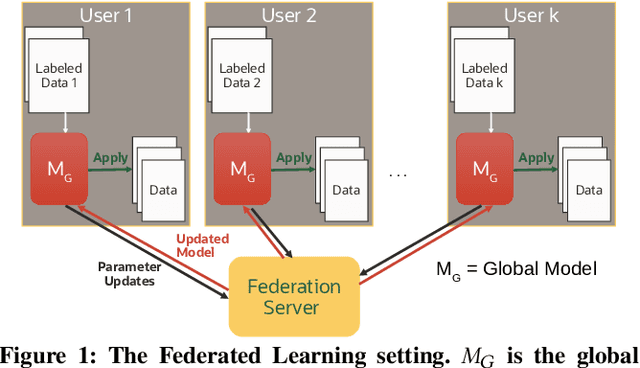

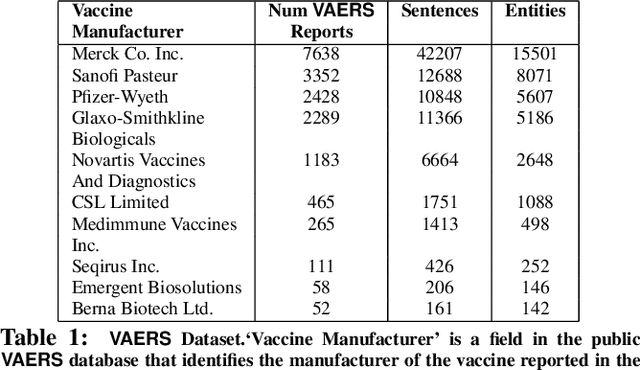

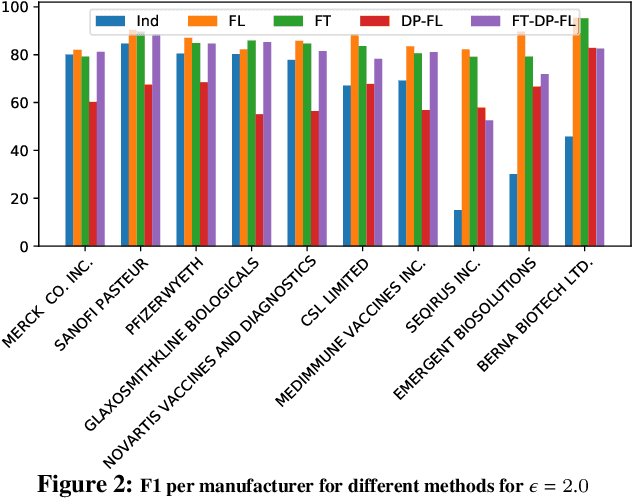

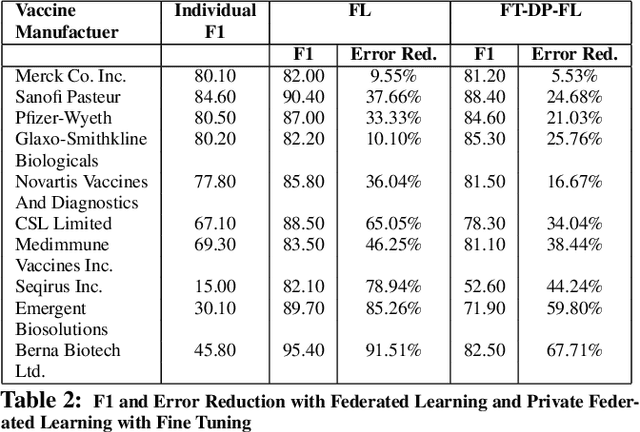

Abstract:Federated Learning (FL) is quickly becoming a goto distributed training paradigm for users to jointly train a global model without physically sharing their data. Users can indirectly contribute to, and directly benefit from a much larger aggregate data corpus used to train the global model. However, literature on successful application of FL in real-world problem settings is somewhat sparse. In this paper, we describe our experience applying a FL based solution to the Named Entity Recognition (NER) task for an adverse event detection application in the context of mass scale vaccination programs. We present a comprehensive empirical analysis of various dimensions of benefits gained with FL based training. Furthermore, we investigate effects of tighter Differential Privacy (DP) constraints in highly sensitive settings where federation users must enforce Local DP to ensure strict privacy guarantees. We show that local DP can severely cripple the global model's prediction accuracy, thus dis-incentivizing users from participating in the federation. In response, we demonstrate how recent innovation on personalization methods can help significantly recover the lost accuracy. We focus our analysis on the Federated Fine-Tuning algorithm, FedFT, and prove that it is not PAC Identifiable, thus making it even more attractive for FL-based training.

Private Federated Learning with Domain Adaptation

Dec 13, 2019

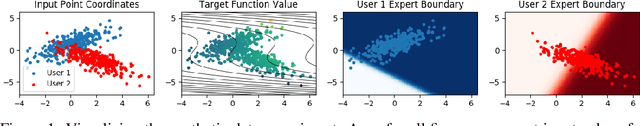

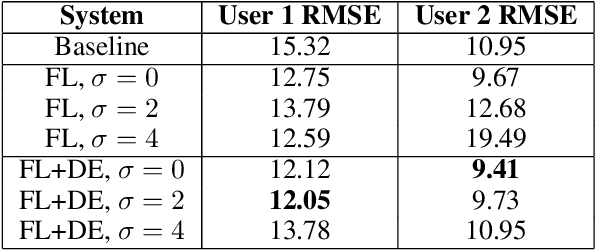

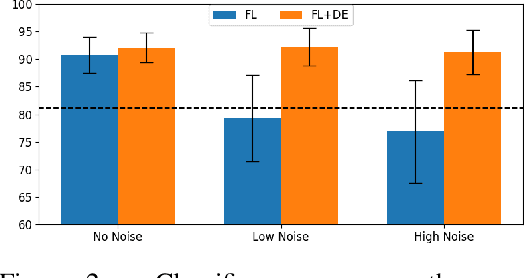

Abstract:Federated Learning (FL) is a distributed machine learning (ML) paradigm that enables multiple parties to jointly re-train a shared model without sharing their data with any other parties, offering advantages in both scale and privacy. We propose a framework to augment this collaborative model-building with per-user domain adaptation. We show that this technique improves model accuracy for all users, using both real and synthetic data, and that this improvement is much more pronounced when differential privacy bounds are imposed on the FL model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge