Daniel Gutiérrez Reina

Optimizing Plastic Waste Collection in Water Bodies Using Heterogeneous Autonomous Surface Vehicles with Deep Reinforcement Learning

Dec 03, 2024Abstract:This paper presents a model-free deep reinforcement learning framework for informative path planning with heterogeneous fleets of autonomous surface vehicles to locate and collect plastic waste. The system employs two teams of vehicles: scouts and cleaners. Coordination between these teams is achieved through a deep reinforcement approach, allowing agents to learn strategies to maximize cleaning efficiency. The primary objective is for the scout team to provide an up-to-date contamination model, while the cleaner team collects as much waste as possible following this model. This strategy leads to heterogeneous teams that optimize fleet efficiency through inter-team cooperation supported by a tailored reward function. Different trainings of the proposed algorithm are compared with other state-of-the-art heuristics in two distinct scenarios, one with high convexity and another with narrow corridors and challenging access. According to the obtained results, it is demonstrated that deep reinforcement learning based algorithms outperform other benchmark heuristics, exhibiting superior adaptability. In addition, training with greedy actions further enhances performance, particularly in scenarios with intricate layouts.

Deep Reinforcement Multi-agent Learning framework for Information Gathering with Local Gaussian Processes for Water Monitoring

Jan 09, 2024

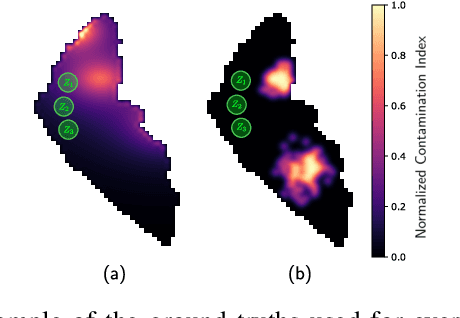

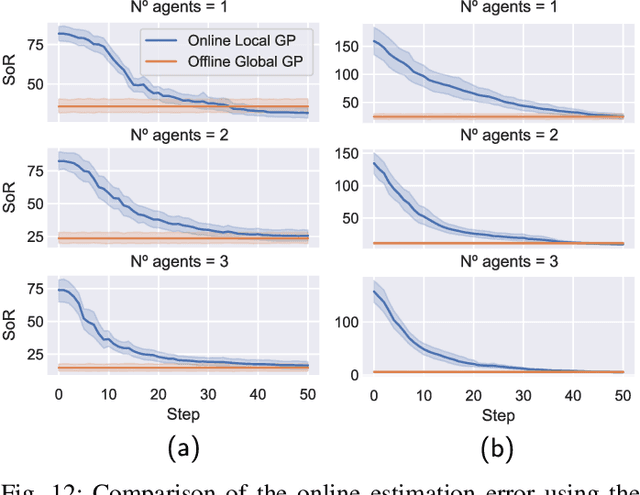

Abstract:The conservation of hydrological resources involves continuously monitoring their contamination. A multi-agent system composed of autonomous surface vehicles is proposed in this paper to efficiently monitor the water quality. To achieve a safe control of the fleet, the fleet policy should be able to act based on measurements and to the the fleet state. It is proposed to use Local Gaussian Processes and Deep Reinforcement Learning to jointly obtain effective monitoring policies. Local Gaussian processes, unlike classical global Gaussian processes, can accurately model the information in a dissimilar spatial correlation which captures more accurately the water quality information. A Deep convolutional policy is proposed, that bases the decisions on the observation on the mean and variance of this model, by means of an information gain reward. Using a Double Deep Q-Learning algorithm, agents are trained to minimize the estimation error in a safe manner thanks to a Consensus-based heuristic. Simulation results indicate an improvement of up to 24% in terms of the mean absolute error with the proposed models. Also, training results with 1-3 agents indicate that our proposed approach returns 20% and 24% smaller average estimation errors for, respectively, monitoring water quality variables and monitoring algae blooms, as compared to state-of-the-art approaches

Censored Deep Reinforcement Patrolling with Information Criterion for Monitoring Large Water Resources using Autonomous Surface Vehicles

Oct 12, 2022Abstract:Monitoring and patrolling large water resources is a major challenge for conservation. The problem of acquiring data of an underlying environment that usually changes within time involves a proper formulation of the information. The use of Autonomous Surface Vehicles equipped with water quality sensor modules can serve as an early-warning system agents for contamination peak-detection, algae blooms monitoring, or oil-spill scenarios. In addition to information gathering, the vehicle must plan routes that are free of obstacles on non-convex maps. This work proposes a framework to obtain a collision-free policy that addresses the patrolling task for static and dynamic scenarios. Using information gain as a measure of the uncertainty reduction over data, it is proposed a Deep Q-Learning algorithm improved by a Q-Censoring mechanism for model-based obstacle avoidance. The obtained results demonstrate the usefulness of the proposed algorithm for water resource monitoring for static and dynamic scenarios. Simulations showed the use of noise-networks are a good choice for enhanced exploration, with 3 times less redundancy in the paths. Previous coverage strategies are also outperformed both in the accuracy of the obtained contamination model by a 13% on average and by a 37% in the detection of dangerous contamination peaks. Finally, these results indicate the appropriateness of the proposed framework for monitoring scenarios with autonomous vehicles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge