Optimizing Plastic Waste Collection in Water Bodies Using Heterogeneous Autonomous Surface Vehicles with Deep Reinforcement Learning

Paper and Code

Dec 03, 2024

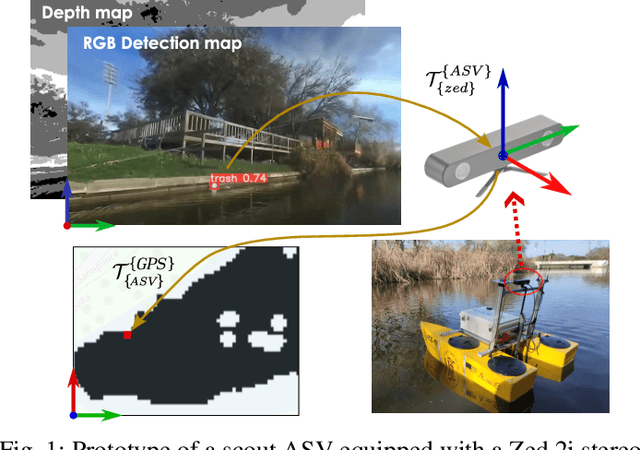

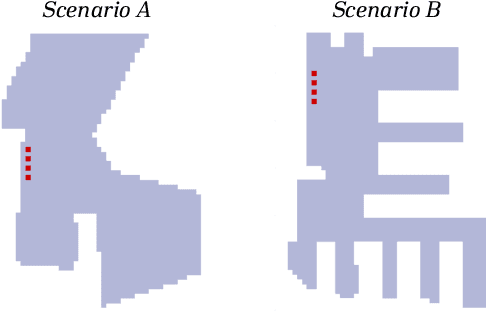

This paper presents a model-free deep reinforcement learning framework for informative path planning with heterogeneous fleets of autonomous surface vehicles to locate and collect plastic waste. The system employs two teams of vehicles: scouts and cleaners. Coordination between these teams is achieved through a deep reinforcement approach, allowing agents to learn strategies to maximize cleaning efficiency. The primary objective is for the scout team to provide an up-to-date contamination model, while the cleaner team collects as much waste as possible following this model. This strategy leads to heterogeneous teams that optimize fleet efficiency through inter-team cooperation supported by a tailored reward function. Different trainings of the proposed algorithm are compared with other state-of-the-art heuristics in two distinct scenarios, one with high convexity and another with narrow corridors and challenging access. According to the obtained results, it is demonstrated that deep reinforcement learning based algorithms outperform other benchmark heuristics, exhibiting superior adaptability. In addition, training with greedy actions further enhances performance, particularly in scenarios with intricate layouts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge