Dani Korpi

Refining Neural Activation Patterns for Layer-Level Concept Discovery in Neural Network-Based Receivers

May 21, 2025Abstract:Concept discovery in neural networks often targets individual neurons or human-interpretable features, overlooking distributed layer-wide patterns. We study the Neural Activation Pattern (NAP) methodology, which clusters full-layer activation distributions to identify such layer-level concepts. Applied to visual object recognition and radio receiver models, we propose improved normalization, distribution estimation, distance metrics, and varied cluster selection. In the radio receiver model, distinct concepts did not emerge; instead, a continuous activation manifold shaped by Signal-to-Noise Ratio (SNR) was observed -- highlighting SNR as a key learned factor, consistent with classical receiver behavior and supporting physical plausibility. Our enhancements to NAP improved in-distribution vs. out-of-distribution separation, suggesting better generalization and indirectly validating clustering quality. These results underscore the importance of clustering design and activation manifolds in interpreting and troubleshooting neural network behavior.

Interpreting Deep Neural Network-Based Receiver Under Varying Signal-To-Noise Ratios

Sep 25, 2024Abstract:We propose a novel method for interpreting neural networks, focusing on convolutional neural network-based receiver model. The method identifies which unit or units of the model contain most (or least) information about the channel parameter(s) of the interest, providing insights at both global and local levels -- with global explanations aggregating local ones. Experiments on link-level simulations demonstrate the method's effectiveness in identifying units that contribute most (and least) to signal-to-noise ratio processing. Although we focus on a radio receiver model, the method generalizes to other neural network architectures and applications, offering robust estimation even in high-dimensional settings.

Adapting to Reality: Over-the-Air Validation of AI-Based Receivers Trained with Simulated Channels

Aug 08, 2024Abstract:Recent research has shown that integrating artificial intelligence (AI) into wireless communication systems can significantly improve spectral efficiency. However, the prevalent use of simulated radio channel data for training and validating neural network-based radios raises concerns about their generalization capability to diverse real-world environments. To address this, we conducted empirical over-the-air (OTA) experiments using software-defined radio (SDR) technology to test the performance of an NN-based orthogonal frequency division multiplexing (OFDM) receiver in a real-world small cell scenario. Our assessment reveals that the performance of receivers trained on diverse 3GPP TS38.901 channel models and broad parameter ranges significantly surpasses conventional receivers in our testing environment, demonstrating strong generalization to a new environment. Conversely, setting simulation parameters to narrowly reflect the actual measurement environment led to suboptimal OTA performance, highlighting the crucial role of rich and randomized training data in improving the NN-based receiver's performance. While our empirical test results are promising, they also suggest that developing new channel models tailored for training these learned receivers would enhance their generalization capability and reduce training time. Our testing was limited to a relatively narrow environment, and we encourage further testing in more complex environments.

Deep Learning-Based Pilotless Spatial Multiplexing

Dec 08, 2023Abstract:This paper investigates the feasibility of machine learning (ML)-based pilotless spatial multiplexing in multiple-input and multiple-output (MIMO) communication systems. Especially, it is shown that by training the transmitter and receiver jointly, the transmitter can learn such constellation shapes for the spatial streams which facilitate completely blind separation and detection by the simultaneously learned receiver. To the best of our knowledge, this is the first time ML-based spatial multiplexing without channel estimation pilots is demonstrated. The results show that the learned pilotless scheme can outperform a conventional pilot-based system by as much as 15-20% in terms of spectral efficiency, depending on the modulation order and signal-to-noise ratio.

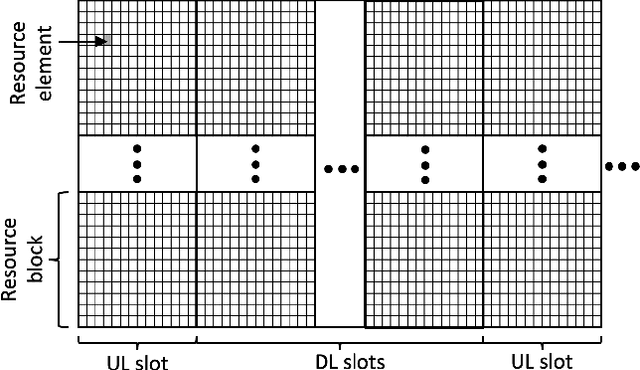

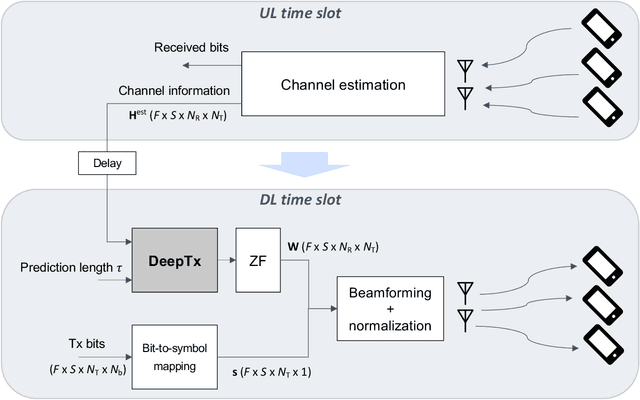

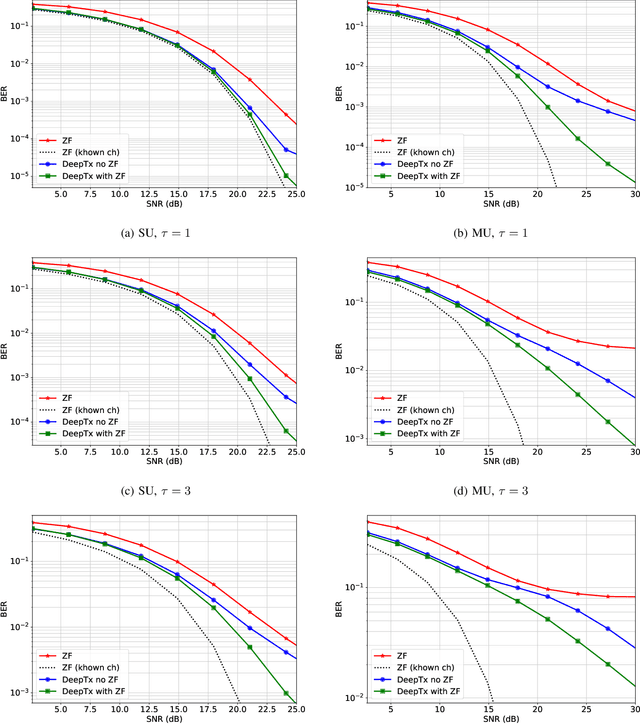

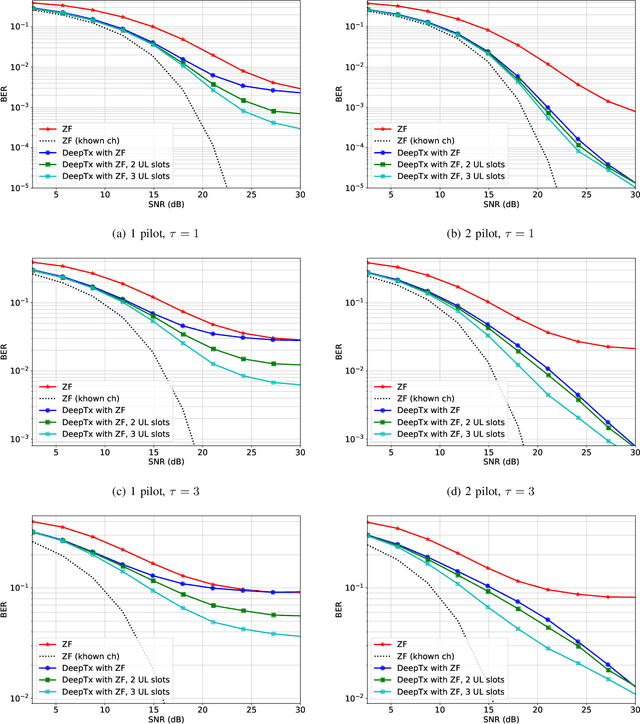

DeepTx: Deep Learning Beamforming with Channel Prediction

Feb 21, 2022

Abstract:Machine learning algorithms have recently been considered for many tasks in the field of wireless communications. Previously, we have proposed the use of a deep fully convolutional neural network (CNN) for receiver processing and shown it to provide considerable performance gains. In this study, we focus on machine learning algorithms for the transmitter. In particular, we consider beamforming and propose a CNN which, for a given uplink channel estimate as input, outputs downlink channel information to be used for beamforming. The CNN is trained in a supervised manner considering both uplink and downlink transmissions with a loss function that is based on UE receiver performance. The main task of the neural network is to predict the channel evolution between uplink and downlink slots, but it can also learn to handle inefficiencies and errors in the whole chain, including the actual beamforming phase. The provided numerical experiments demonstrate the improved beamforming performance.

Waveform Learning for Reduced Out-of-Band Emissions Under a Nonlinear Power Amplifier

Jan 14, 2022

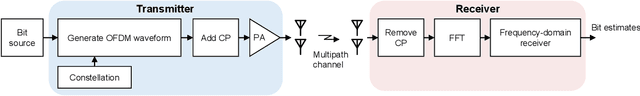

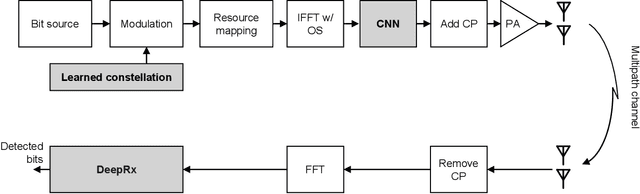

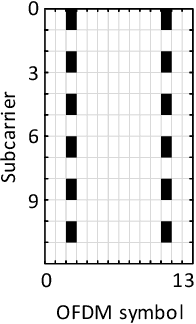

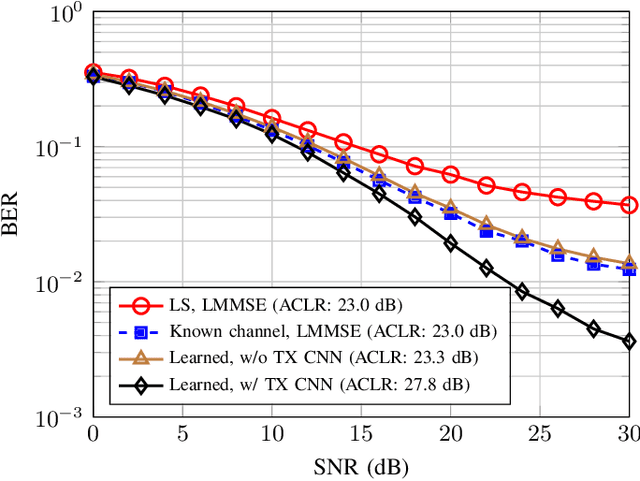

Abstract:Machine learning (ML) has shown great promise in optimizing various aspects of the physical layer processing in wireless communication systems. In this paper, we use ML to learn jointly the transmit waveform and the frequency-domain receiver. In particular, we consider a scenario where the transmitter power amplifier is operating in a nonlinear manner, and ML is used to optimize the waveform to minimize the out-of-band emissions. The system also learns a constellation shape that facilitates pilotless detection by the simultaneously learned receiver. The simulation results show that such an end-to-end optimized system can communicate data more accurately and with less out-of-band emissions than conventional systems, thereby demonstrating the potential of ML in optimizing the air interface. To the best of our knowledge, there are no prior works considering the power amplifier induced emissions in an end-to-end learned system. These findings pave the way towards an ML-native air interface, which could be one of the building blocks of 6G.

HybridDeepRx: Deep Learning Receiver for High-EVM Signals

Jun 30, 2021

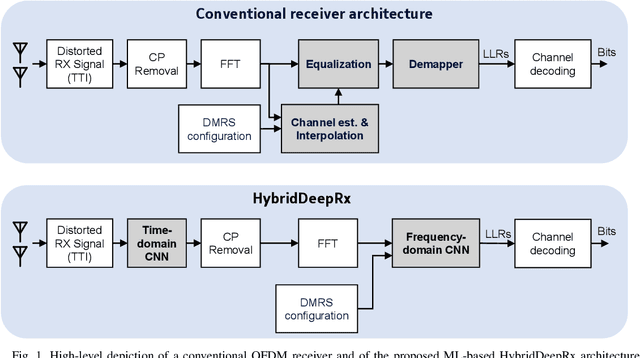

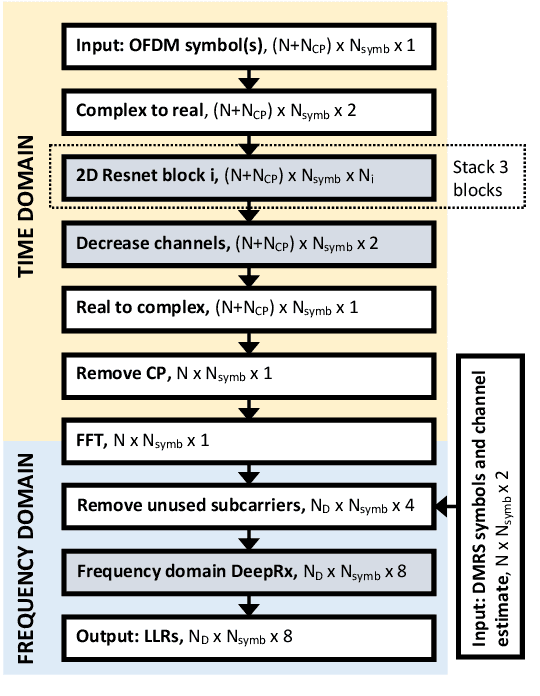

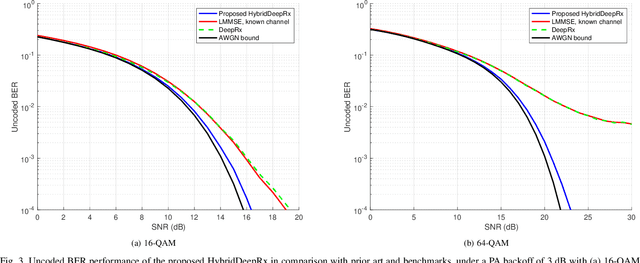

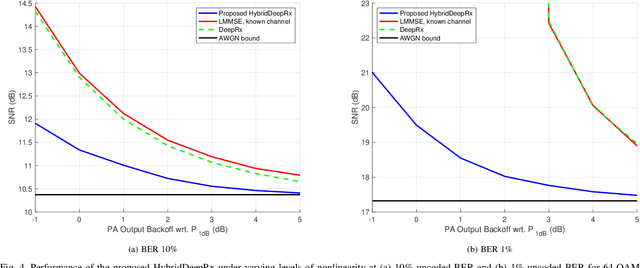

Abstract:In this paper, we propose a machine learning (ML) based physical layer receiver solution for demodulating OFDM signals that are subject to a high level of nonlinear distortion. Specifically, a novel deep learning based convolutional neural network receiver is devised, containing layers in both time- and frequency domains, allowing to demodulate and decode the transmitted bits reliably despite the high error vector magnitude (EVM) in the transmit signal. Extensive set of numerical results is provided, in the context of 5G NR uplink incorporating also measured terminal power amplifier characteristics. The obtained results show that the proposed receiver system is able to clearly outperform classical linear receivers as well as existing ML receiver approaches, especially when the EVM is high in comparison with modulation order. The proposed ML receiver can thus facilitate pushing the terminal power amplifier (PA) systems deeper into saturation, and thereon improve the terminal power-efficiency, radiated power and network coverage.

DeepRx MIMO: Convolutional MIMO Detection with Learned Multiplicative Transformations

Oct 30, 2020

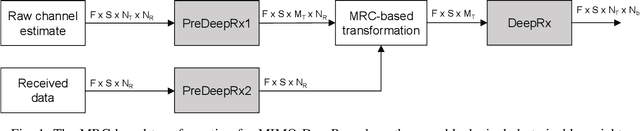

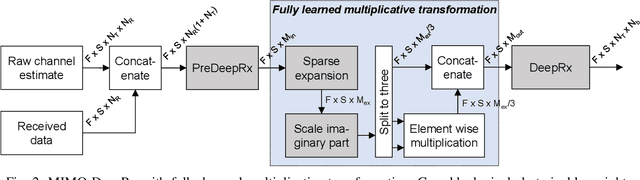

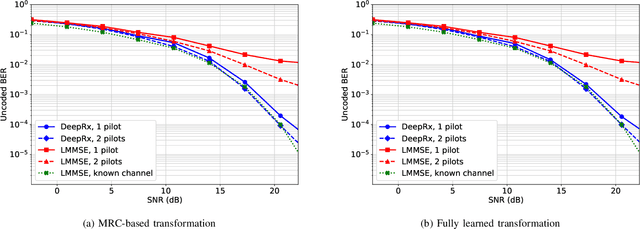

Abstract:Recently, deep learning has been proposed as a potential technique for improving the physical layer performance of radio receivers. Despite the large amount of encouraging results, most works have not considered spatial multiplexing in the context of multiple-input and multiple-output (MIMO) receivers. In this paper, we present a deep learning-based MIMO receiver architecture that consists of a ResNet-based convolutional neural network, also known as DeepRx, combined with a so-called transformation layer, all trained together. We propose two novel alternatives for the transformation layer: a maximal ratio combining-based transformation, or a fully learned transformation. The former relies more on expert knowledge, while the latter utilizes learned multiplicative layers. Both proposed transformation layers are shown to clearly outperform the conventional baseline receiver, especially with sparse pilot configurations. To the best of our knowledge, these are some of the first results showing such high performance for a fully learned MIMO receiver.

DeepRx: Fully Convolutional Deep Learning Receiver

May 04, 2020

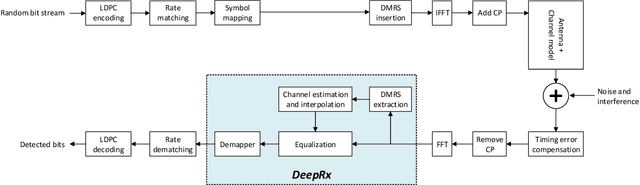

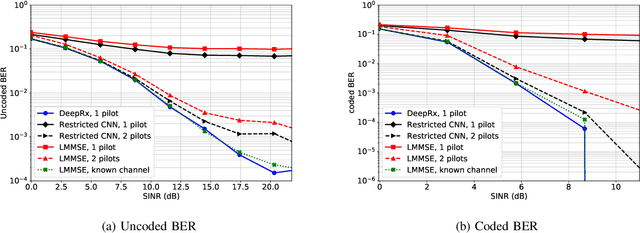

Abstract:Deep learning has solved many problems that are out of reach of heuristic algorithms. It has also been successfully applied in wireless communications, even though the current radio systems are well-understood and optimal algorithms exist for many tasks. While some gains have been obtained by learning individual parts of a receiver, a better approach is to jointly learn the whole receiver. This, however, often results in a challenging nonlinear problem, for which the optimal solution is infeasible to implement. To this end, we propose a deep fully convolutional neural network, DeepRx, which executes the whole receiver pipeline from frequency domain signal stream to uncoded bits in a 5G-compliant fashion. We facilitate accurate channel estimation by constructing the input of the convolutional neural network in a very specific manner using both the data and pilot symbols. Also, DeepRx outputs soft bits that are compatible with the channel coding used in 5G systems. Using 3GPP-defined channel models, we demonstrate that DeepRx outperforms traditional methods. We also show that the high performance can likely be attributed to DeepRx learning to utilize the known constellation points of the unknown data symbols, together with the local symbol distribution, for improved detection accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge