Dan Povey

Efficient MDI Adaptation for n-gram Language Models

Aug 05, 2020

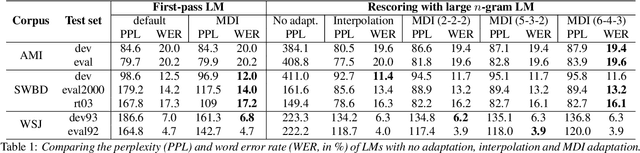

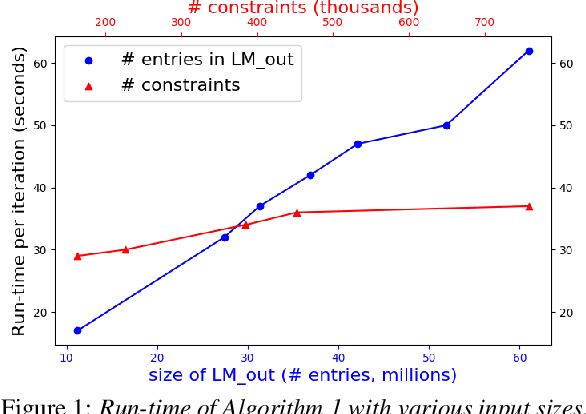

Abstract:This paper presents an efficient algorithm for n-gram language model adaptation under the minimum discrimination information (MDI) principle, where an out-of-domain language model is adapted to satisfy the constraints of marginal probabilities of the in-domain data. The challenge for MDI language model adaptation is its computational complexity. By taking advantage of the backoff structure of n-gram model and the idea of hierarchical training method, originally proposed for maximum entropy (ME) language models, we show that MDI adaptation can be computed in linear-time complexity to the inputs in each iteration. The complexity remains the same as ME models, although MDI is more general than ME. This makes MDI adaptation practical for large corpus and vocabulary. Experimental results confirm the scalability of our algorithm on very large datasets, while MDI adaptation gets slightly worse perplexity but better word error rate results compared to simple linear interpolation.

Multistream CNN for Robust Acoustic Modeling

May 21, 2020

Abstract:This paper presents multistream CNN, a novel neural network architecture for robust acoustic modeling in speech recognition tasks. The proposed architecture accommodates diverse temporal resolutions in multiple streams to achieve robustness in acoustic modeling. For the diversity of temporal resolution in embedding processing, we consider dilation on TDNN-F, a variant of 1D-CNN. Each stream stacks narrower TDNN-F layers whose kernel has a unique, stream-specific dilation rate when processing input speech frames in parallel. Hence it can better represent acoustic events without the increase of model complexity. We validate the effectiveness of the proposed multistream CNN architecture by showing consistent improvement across various data sets. Trained with data augmentation methods, multistream CNN improves the WER of the test-other set in the LibriSpeech corpus by 12% (relative). On custom data from ASAPP's production system for a contact center, it records a relative WER improvement of 11% for the customer channel audios (10% on average for the agent and customer channel recordings) to prove the superiority of the proposed model architecture in the wild. In terms of real-time factor (RTF), multistream CNN outperforms the normal TDNN-F by 15%, which also suggests its practicality on production systems or applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge