Dan Pagendam

Gaussian Ensemble Belief Propagation for Efficient Inference in High-Dimensional Systems

Feb 13, 2024

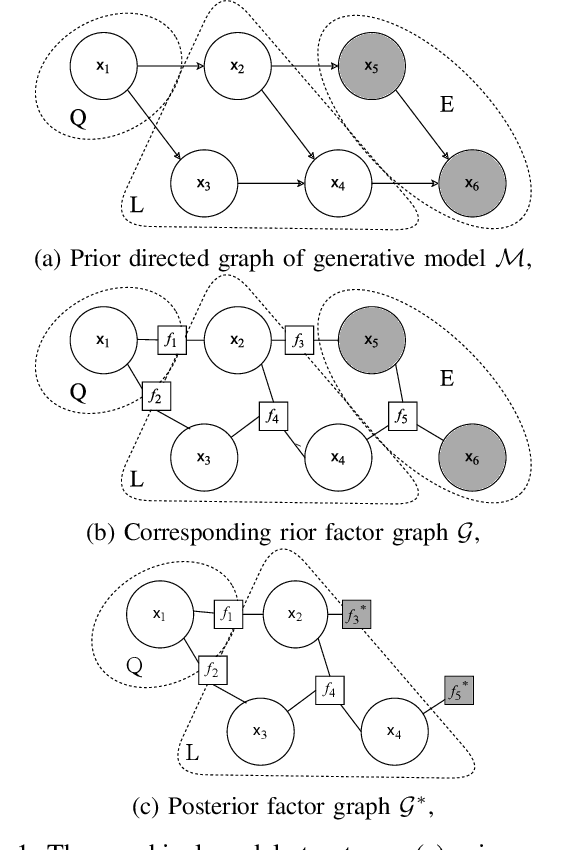

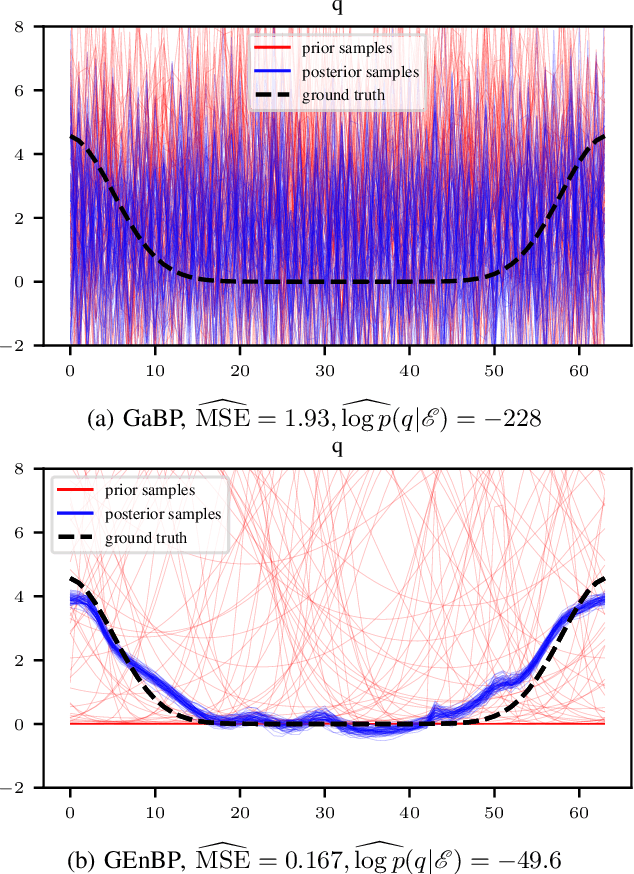

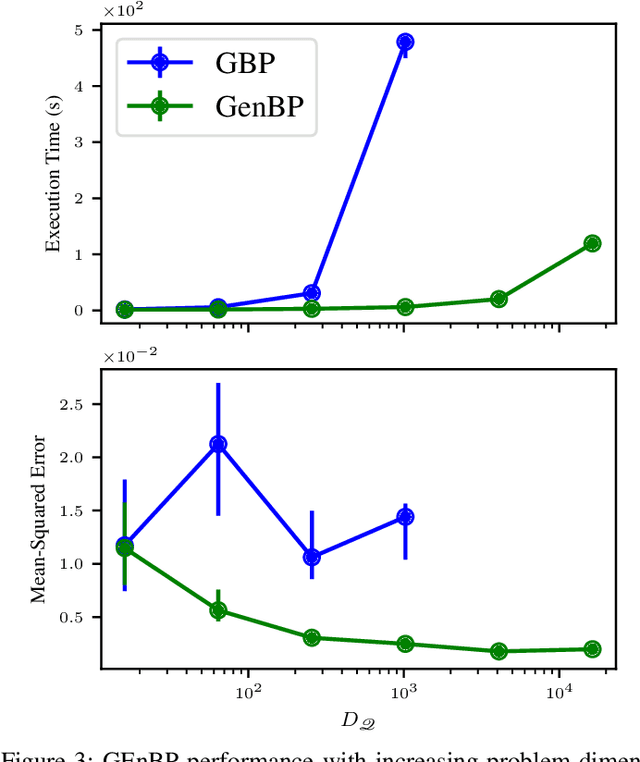

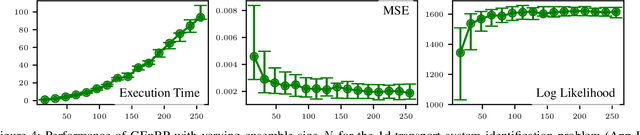

Abstract:Efficient inference in high-dimensional models remains a central challenge in machine learning. This paper introduces the Gaussian Ensemble Belief Propagation (GEnBP) algorithm, a fusion of the Ensemble Kalman filter and Gaussian belief propagation (GaBP) methods. GEnBP updates ensembles by passing low-rank local messages in a graphical model structure. This combination inherits favourable qualities from each method. Ensemble techniques allow GEnBP to handle high-dimensional states, parameters and intricate, noisy, black-box generation processes. The use of local messages in a graphical model structure ensures that the approach is suited to distributed computing and can efficiently handle complex dependence structures. GEnBP is particularly advantageous when the ensemble size is considerably smaller than the inference dimension. This scenario often arises in fields such as spatiotemporal modelling, image processing and physical model inversion. GEnBP can be applied to general problem structures, including jointly learning system parameters, observation parameters, and latent state variables.

Opportunistic Emulation of Computationally Expensive Simulations via Deep Learning

Aug 25, 2021

Abstract:With the underlying aim of increasing efficiency of computational modelling pertinent for managing and protecting the Great Barrier Reef, we investigate the use of deep neural networks for opportunistic model emulation of APSIM models by repurposing an existing large dataset containing the outputs of APSIM model runs. The dataset has not been specifically tailored for the model emulation task. We employ two neural network architectures for the emulation task: densely connected feed-forward neural network (FFNN), and gated recurrent unit feeding into FFNN (GRU-FFNN), a type of a recurrent neural network. Various configurations of the architectures are trialled. A minimum correlation statistic is employed to identify clusters of APSIM scenarios that can be aggregated to form training sets for model emulation. We focus on emulating four important outputs of the APSIM model: runoff, soil_loss, DINrunoff, Nleached. The GRU-FFNN architecture with three hidden layers and 128 units per layer provides good emulation of runoff and DINrunoff. However, soil_loss and Nleached were emulated relatively poorly under a wide range of the considered architectures; the emulators failed to capture variability at higher values of these two outputs. While the opportunistic data available from past modelling activities provides a large and useful dataset for exploring APSIM emulation, it may not be sufficiently rich enough for successful deep learning of more complex model dynamics. Design of Computer Experiments may be required to generate more informative data to emulate all output variables of interest. We also suggest the use of synthetic meteorology settings to allow the model to be fed a wide range of inputs. These need not all be representative of normal conditions, but can provide a denser, more informative dataset from which complex relationships between input and outputs can be learned.

Modern strategies for time series regression

Oct 29, 2020

Abstract:This paper discusses several modern approaches to regression analysis involving time series data where some of the predictor variables are also indexed by time. We discuss classical statistical approaches as well as methods that have been proposed recently in the machine learning literature. The approaches are compared and contrasted, and it will be seen that there are advantages and disadvantages to most currently available approaches. There is ample room for methodological developments in this area. The work is motivated by an application involving the prediction of water levels as a function of rainfall and other climate variables in an aquifer in eastern Australia.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge